May 1, 2011 - Depth Seekers (DeepSeek) Yesterday (April 30) at AI A new model called DeepSeek-Prover-V2-671B was released on the open-source community Hugging Face, followed by the publication of the paper's information on GitHub and other platforms.

1AI cites a paper describing DeepSeek-Prover-V2 as a formalization-focusedmathematical reasoningofOpen Source Large Language Model, based on DeepSeek-V3-0324, proves pipeline generation of initial data by recursion theorem.

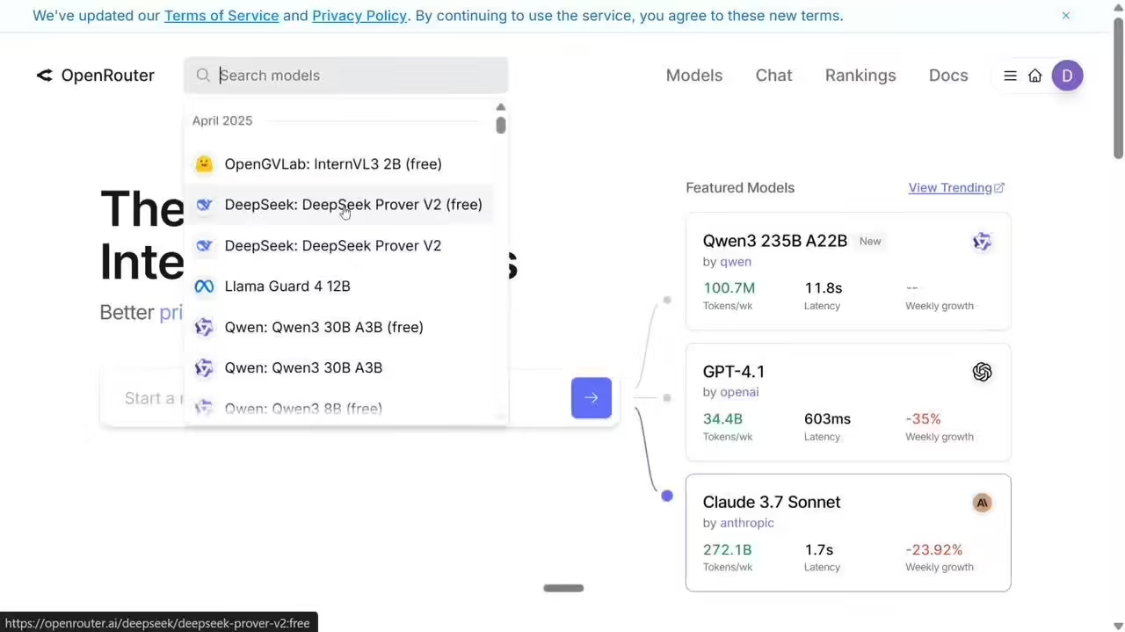

Deepseek has launched DeepSeek-Prover-V2-671B(in conjunction with the V3 Base Big Model),DeepSeek-Prover-V2-7B(augmented model) two models, and DeepSeek-ProverBench Dataset.

DeepSeek-Prover-V2-671B uses the same architecture as DeepSeek V3-0324, not for regular conversations or reasoning, but for formal theorem proving, a model that specifically enhances mathematical capabilities.

The DeepSeek team first guided the DeepSeek-V3 model to decompose the complex theorem into a series of subgoals, integrating both non-formal and formal mathematical reasoning, and formalizing the proof steps on the Lean 4 platform.

Next, a smaller 7B parametric model is used to handle the proof search for subgoals, reducing the computational burden. Finally, the complete step-by-step proofs are combined with DeepSeek-V3's chain-of-thought to form "cold-start" data for reinforcement learning.

During training, the team filtered out a group of puzzles that could not be solved directly by the 7B model but the subgoals had been proved. By integrating the subgoal proofs, a complete formal proof is formed and interfaced with DeepSeek-V3's inference process to generate synthetic data.

Subsequently, the model fine-tunes this data and further improves its capabilities through reinforcement learning, using binary feedback (correct or incorrect) as a reward mechanism. In the end, DeepSeek-Prover-V2-671B set a new high in the field of neural theorem proving, theThe pass rate on the MiniF2F-test dataset was 88.9%In the PutnamBench dataset, 49 of the 658 problems were solved.

The team also released the ProverBench benchmark dataset, containing 325 formal math problems. Of these, 15 problems were derived from recent AIME competitions (AIME 24 and 25) in number theory and algebra and represent high school competition difficulty.

The remaining 310 problems are drawn from selected textbooks and pedagogical content, covering a wide range of areas including linear algebra, calculus, and probability. This dataset is intended to provide comprehensive assessment criteria for high school competitions and undergraduate mathematics, and to promote the testing and application of models in diverse scenarios.