-

Step-Star Step-Video-TI2V Graph-generated Video Modeling Open Source: Controllable Amplitude of Motion and Lens Motion

March 20, 2011, Step-Star open-sourced two Step series multimodal models --Step-Video-T2V video generation model and Step-Audio speech model in February this year, and today Step-Star continues to open-source the graphical video model --Step-Video-TI2V, a graphical video model based on 30B parameters Step-Video-T2V training, which supports 102 frames, 5 seconds, 540P resolution video generation with motion amplitude. Step-Video-TI2V, a 30B parameter Step-Video-T2V trained graph-generated video model, supports the generation of 102 frames, 5 seconds, 540P resolution video, with motion amplitude control and lens motion control two core ...- 6.7k

-

Tencent hybrid released and open-sourced graphic video model: can generate 5-second short videos, but also automatically with background sound effects

March 6 news, 1AI from tencent mixed yuan weibo public number was informed that tencent mixed yuan released graphic video model and open source, at the same time on-line lip-synching and action-driven gameplay, and support for the generation of background sound effects and 2K high-quality video. Based on the ability of graphic video, users only need to upload a picture and briefly describe how they want the screen to move, how the camera is dispatched, etc., and the hybrid can make the picture move according to the requirements and turn it into a 5-second short video, which can also be automatically matched with background sound effects. In addition, by uploading a picture of a character and typing in the text or audio you want to "lip-sync", the character in the picture can "talk"...- 5.3k

-

Tencent, Sun Yat-sen University and Hong Kong University of Science and Technology jointly launched the image-generated video model "Follow-Your-Pose-v2"

Tencent Hunyuan team, Sun Yat-sen University and Hong Kong University of Science and Technology jointly launched a new image-generated video model "Follow-Your-Pose-v2", and the relevant results have been published on arxiv (DOI: 10.48550/arXiv.2406.03035). According to reports, "Follow-Your-Pose-v2" only needs to input a person's picture and an action video, and the person in the picture can follow the action in the video. The generated video can be up to 10 seconds long. Compared with the previously launched model, "Fol…- 4.6k

-

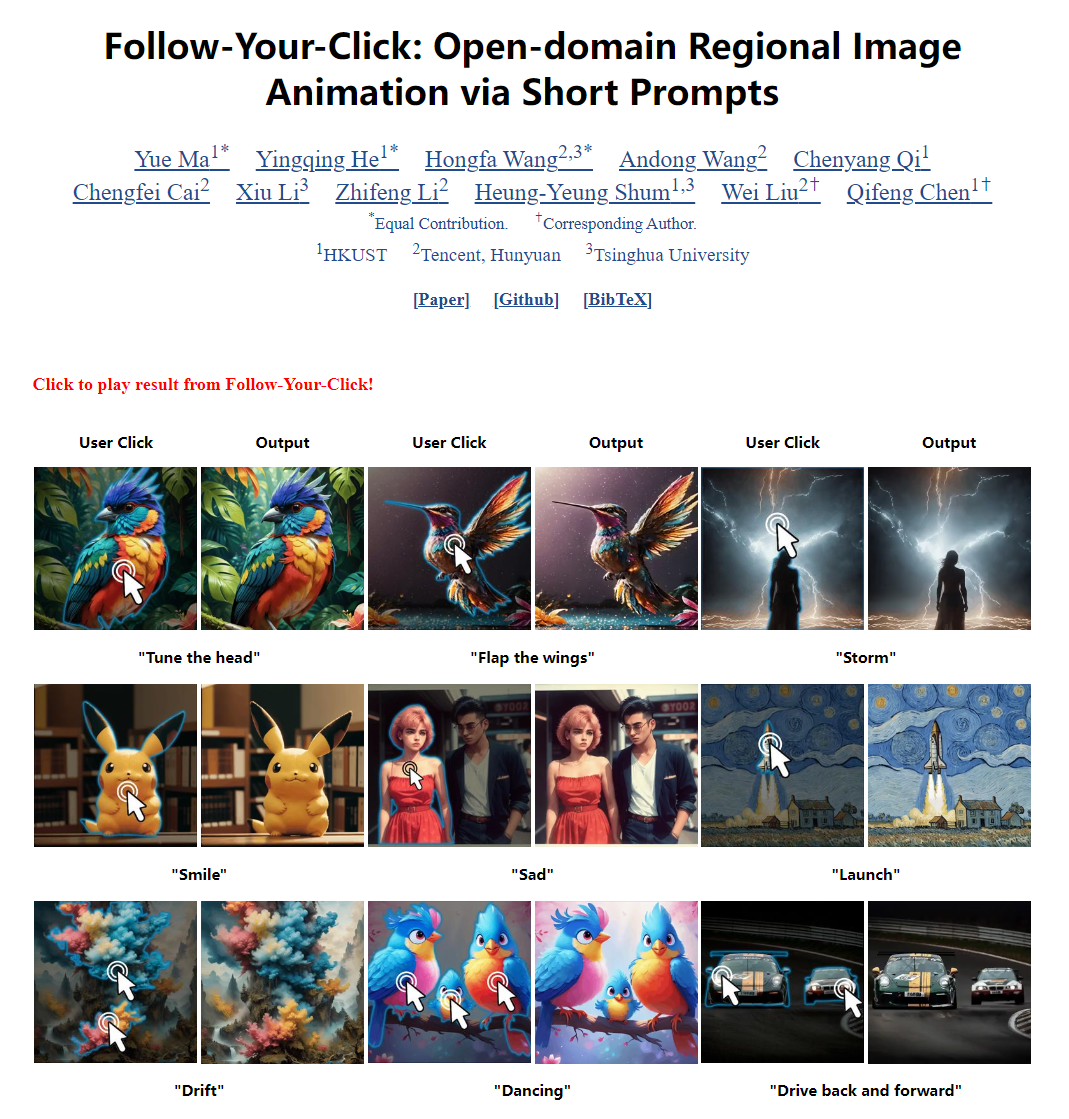

Tencent, Tsinghua University and Hong Kong University of Science and Technology jointly launched a new image-generated video model "Follow-Your-Click": Follow-Your-Click moves wherever the user points

Tencent, Tsinghua University, and the Hong Kong University of Science and Technology have jointly launched a new image-generated video model called "Follow-Your-Click", which is now available on GitHub (the code will be made public in April), and a research paper has also been published (DOI: 2403.08268). The main functions of this image-generated video model include local animation generation and multi-object animation, and support for a variety of action expressions, such as head adjustment and wing flapping. According to reports, Follow-Your-Click can generate local image animations through user clicks and short action prompts. User…- 4.1k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: