-

Apple's FastVLM Model Open for Trial: Mac Users Enjoy Lightning-Fast Video Captioning 85x Faster Than Similar AIs

September 2, 2011 - Technology media outlet 9to5Mac published a blog post yesterday (September 1) reporting that Apple has launched a browser trial of its FastVLM visual language model on the Hugging Face platform. Note: FastVLM is known for its "lightning-fast" video captioning, and users with an Apple Silicon Chip-powered Mac device can easily get their hands on this cutting-edge technology. The core strength of the FastVLM model is its speed and efficiency. The model... -

Smart Spectrum Open Source Next Generation Universal Visual Language Model

Yesterday, Smart Spectrum officially launched and open-sourced a new generation of general-purpose visual language models, GLM-4.1V-Thinking, which is claimed to be "a key leap from perception to cognition for the GLM series of visual models". Specifically, GLM-4.1V-Thinking is a general-purpose inference-based large model that supports multimodal inputs such as images, videos, documents, etc., and is designed for complex cognitive tasks. It introduces the "Chain of Thought Reasoning (CoT Reasoning)" on the basis of the GLM-4V architecture, and adopts the "Reinforcement Learning Strategies for Lesson Sampling (RLCS)", which is a system...- 843

-

Wisdom Spectrum Receives 1 Billion RMB Strategic Investment from Pudong Venture Capital and Zhangjiang Group, Releases New Generation of Generalized Visual Language Model GLM-4.1V-Thinking in Open Source

July 2 news, this morning, Smart Spectrum open platform industrial ecological conference held in Shanghai Pudong Zhangjiang Science Hall, open source release of a new generation of universal visual language model GLM-4.1V-Thinking. In the Smart Spectrum open platform industrial ecological conference, the Smart Spectrum announced that the Pudong Venture Capital Group and the Zhangjiang Group of the total amount of 1 billion yuan of strategic investment in the Smart Spectrum, and recently completed the first delivery. At the same time, the three parties also launched a cooperation to jointly build a new AI infrastructure. Wisdom Spectrum today officially released and open-sourced the Visual Language Model GLM-4.1V-Thinking...- 1.1k

-

Apple releases FastVLM visual language model, paving the way for new smart glasses and other wearables

May 13, 2011 - Apple's machine learning team released and open-sourced a visual language model, FastVLM, on GitHub last week, with versions 0.5B, 1.5B, and 7B. According to the introduction, the model is developed based on Apple's own MLX framework and trained with the help of LLaVA codebase, which is optimized for end-side AI operations of Apple Silicon devices. According to the technical document, FastVLM realizes near real-time response for high-resolution image processing while maintaining accuracy, and the required counting...- 812

-

Hugging Face's Smallest AI Visual Language Model: 256 Million Parameters for PCs with Less Than 1GB of RAM

January 24, 2011 - Technology media NeoWin published a blog post yesterday (January 23) reporting that Microsoft plans to deploy Copilot Chat for Outlook and Copilot Chat for Teams online starting in February 2025, which will bring a new Copilot Chat experience and provide easier access. Citing Microsoft's updated Microsoft 365 roadmap, 1AI attached the information as follows:Late January 2025...- 2.1k

-

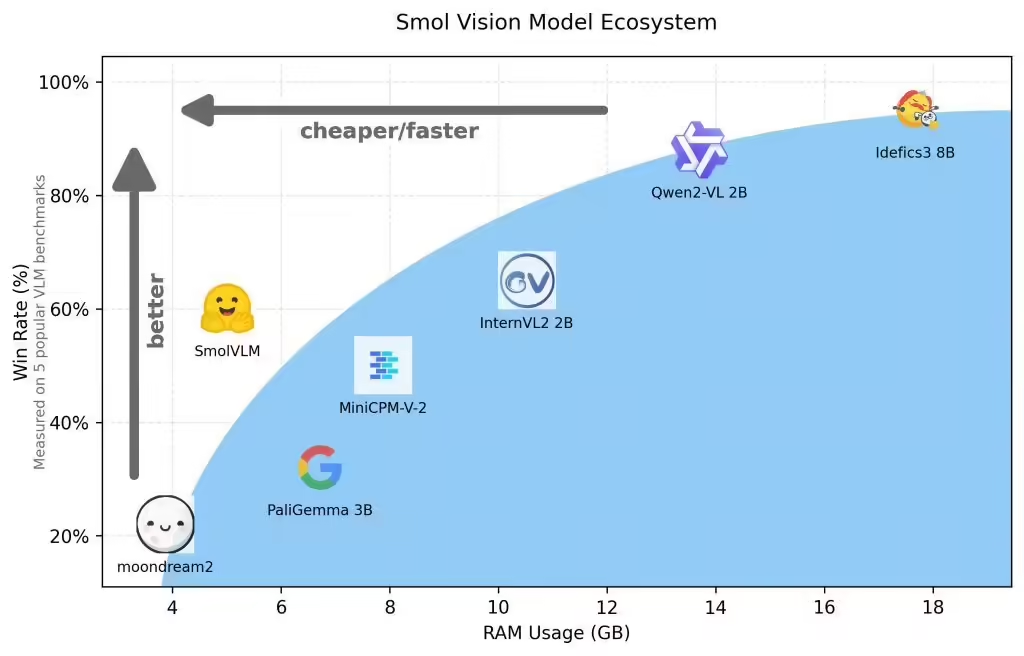

Hugging Face Releases SmolVLM Open Source AI Model: 2 Billion Parameters for End-Side Reasoning, Small and Fast

The Hugging Face platform published a blog post yesterday (November 26) announcing the launch of the SmolVLM AI visual language model (VLM), with just 2 billion parameters for device-side reasoning, which stands out from its peers by virtue of its extremely low memory footprint. Officials say the SmolVLM AI model benefits from being small, fast, memory efficient, and completely open source, with all model checkpoints, VLM datasets, training recipes, and tools released under the Apache 2.0 license. SmolVLM AI ...- 5.4k

-

Peking University, Tsinghua University and others jointly release LLaVA-o1: the first spontaneous visual AI model, a new idea of inference computing Scaling

Nov. 19, 2011 - A team of researchers from Peking University, Tsinghua University, Pengcheng Lab, Alibaba Dharmo Academy, and Lehigh University has introduced LLaVA-o1, the first GPT-o1-like systematic inference visual language model that is spontaneous, which can be explained at the end of the article. language model. LLaVA-o1 is a novel visual language model (VLM) designed for autonomous multi-stage reasoning. LLaVA-o1 has 1...- 3.3k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: