-

Open-source virtual human video generation model LongCat-Video-Avatar: It's all human when it's called "no talking."

On December 19, according to a tweet from the LongCat public, the company LongCat team officially released and opened the SOTA-class virtual human video generation model, LongCat-Video-Avatar. The model is based on the LongCat-Video base and continues the core design of "One Model for Multitask" and supports core functions such as Audio-Text-to-Video, Audio-Text-Image-to-Video and video continuation, as well as..- 1.8k

-

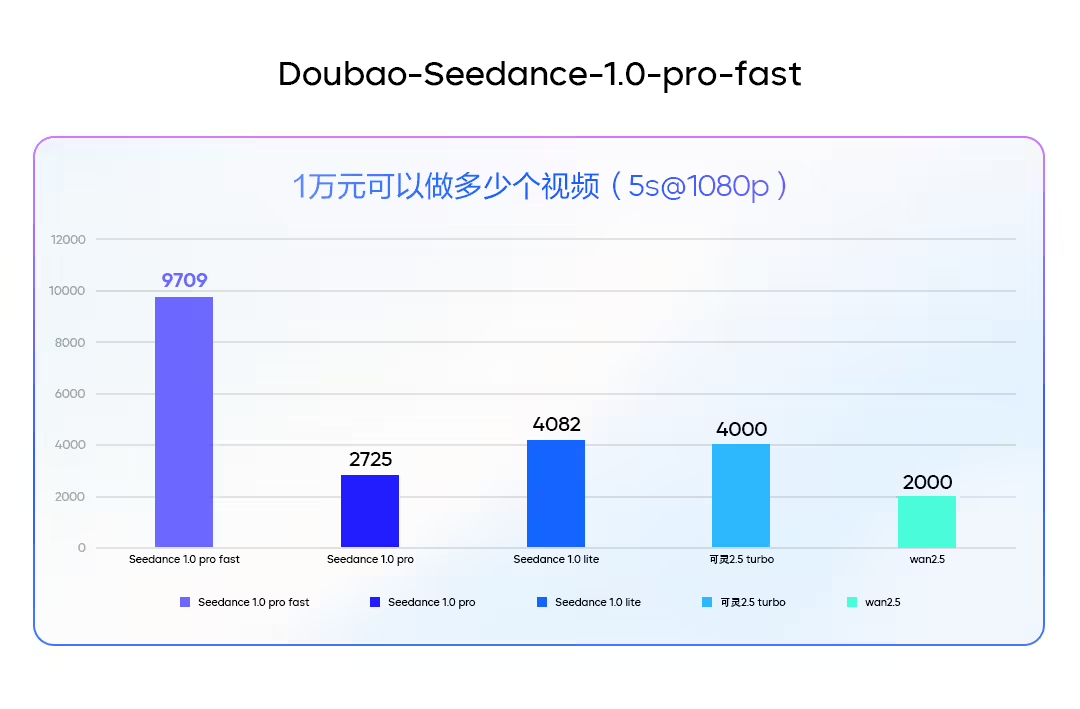

Volcanic engine online bean bag video generation model 1.0 process: 5 seconds 720P content completed in 10 seconds

According to news from 28 October, according to Volcanic Engine public tweets, on 24 October, the Volcanic Engine officially went online to generate video-generation model 1.0 beans. Based on the core advantages of the Seedance 1.0pro model, the model achieved a significant efficiency breakthrough: the highest rate of generation increased by about three times, with prices falling by 72%. Seedance 1.0 pro fast achieved a significant increase in generation efficiency, and the 5-second video of 720P could be completed in only 10 seconds, more rapidly than pro version..- 1.6k

-

America releases open source LongCat-Video video generation model, which stabilizes 5 minute content

October 27th, this morning, the LongCat team released and opened the LongCat-Video Video Generation Model. According to the official presentation, it achieves open-source SOTA (the most advanced level) with a unified model in the context of the Ventian, Graphical video base mission, and is pre-trained in the use of raw video continuation missions, achieves consistent generation of long-minute video, ensures consistency of time series across frames and soundness of physical motion, and has a significant advantage in the area of long video generation. According to the presentation, in recent years, the World Model has allowed artificial intelligence to truly understand..- 2.3k

-

Match mark Sora 2, Google Veo 3.1 video generation model for major upgrades

On October 16th, Google officially released Veo 3.1 Video Generation Model Updates today, with significant improvements at both functional and model levels. In terms of functionality, for the first time, Veo 3.1 added audio support to the "Reflection Video" and "Suspend" functions to make the creation process more complete. Users can not only define roles and styles through multiple reference images, but can also use first-end images to generate seamless transitions, or extend to produce content in excess of one minute. At the model level, Veo 3.1 has made significant progress in the understanding and audio-visual quality of the hints..- 2.2k

-

OpenAI rolls out the strongest video generation model in the family Sora 2 and creates audio in sync

On October 1st, in the morning of Beijing time, OpenAI released the next generation video generation model Sora 2. This new model has been significantly enhanced in the definition of video effects and has added an audio generation capability. It inherits and expands early image generation techniques, and in the new version of App launched during the same period, users can complete their identification by recording their own video and voice in one-off, and then “passenger” themselves or others in the resulting video. Sora uses similar to existing social media to provide an algorithm-recommended stream of information and to promote individualized content based on interactive objects and interests..- 2.7k

-

A picture can generate cinematic digital human video: AliCloud Tongyi Wan2.2-S2V video generation model announced open source

August 27 news, yesterday evening, Ali Cloud announced the open source of a new multimodal video generation model Tongyi Wan phase Wan2.2-S2V, only a static picture and a piece of audio, you can generate a natural facial expression, consistent mouth shape, body movement silky smooth movie level digital human video. According to reports, the length of the video generated by this model can be up to minute level, which significantly improves the efficiency of video creation in the industries of digital human live broadcasting, movie and TV production, and AI education. Currently, Wan2.2-S2V can drive real people, cartoons, animals, digital people and other types of images, and supports portraits, half-body and full-body any...- 3.4k

-

Baidu Releases Self-Researched Video Generation Model MuseSteamer: Generate Cinematic HD Audio Video from a Single Image

July 2 news, Baidu commercial R & D team released its own video generation model "MuseSteamer" and creation platform "Painted Thinking", MuseSteamer is the world's first to realize the integration of Chinese audio and video video generation video model. MuseSteamer is the world's first video model that realizes the integrated generation of Chinese audio and video. The technology can realize the synergistic creation of picture, sound effects and human voice lines, breaking the fragmented process of traditional AIGC video, which is "picture first and then dubbing". It is reported that MuseSteamer tops the world's first in the authoritative list VBench I2V with a total score of 89.38%, and supports the generation of a 10-second 1...- 2.5k

-

Volcano Engine Releases New Beanbag Video Generation Model Tomorrow, Supports Seamless Multi-Camera Narrative

June 10 news, byte jumping volcano engine official public number announced today, will be released on June 11, the new beanbag video generation model. According to the introduction, the new beanbag video generation model has a number of "hard core capabilities", 1AI with examples as follows: support seamless multi-camera narrative, that is, through the efficient model structure, multi-modal positional coding and multi-tasking unified modeling, the model can support unique and stable multi-camera expression. Supports multi-action and on-the-fly camera operation, i.e., after fully learning rich scenes, subjects and behavioral actions, the model can more accurately respond to the user's fine commands, and smoothly generate multi-subject and multi-movement...- 1.5k

-

Google Veo 3: AI video generation model, the first to generate video background sound effects

Google DeepMind's Veo model, an advanced video generation model with extreme realism and fidelity, support for 4K output, and improved cue-following capabilities and creative control.Veo version 3 adds native audio generation, capable of generating sound effects, ambient soundscapes, and even dialog.Veo is an advanced video generation model that helps creatives make it easier to produce high-quality videos. It supports features such as native audio generation, style matching, and precise camera control for a variety of scenarios, from movie production to game development. v...- 6.4k

-

Google's Most Powerful Video Generation AI Model, Veo3, Unveiled: Producing Background Sound, Character Dialogue, and More!

May 21 news, in this year's I/O developer conference, Google released a new generation of video generation model Veo3, which is also its first model can generate video background sound effects. It can not only synthesize the picture, but also for the birdsong or street traffic and other scenes with corresponding sound effects, and can even generate character dialog. Google says Veo 3 also excels at physics simulation and lip syncing. Currently, the model is only available to Gemini Ultra users in the U.S., as well as enterprise users of Vertex AI, which has also been integrated into Google's AI Shadow...- 1.4k

-

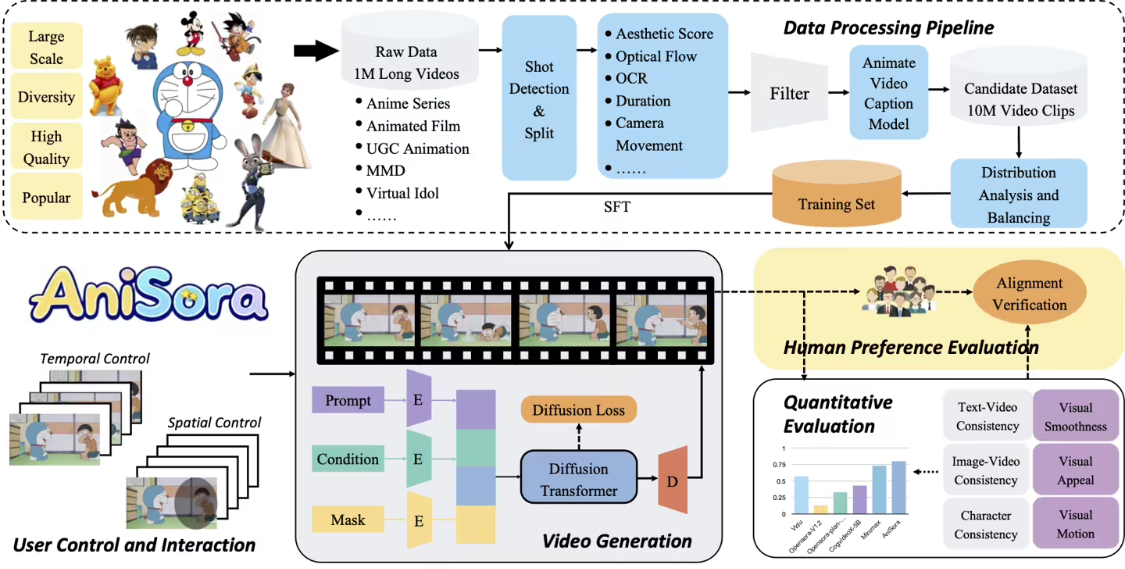

B station team open source anime video generation model AniSora, one click to create different styles of clips

May 18 news, B station team May 12 open source anime video generation model AniSora. AniSora can create a variety of anime style video clips with one click, including series episodes, Chinese original animation, manga adaptation, VTuber content, anime PV and ghost animation and so on. The B Station team says that animated content is highly sought after in today's movie and television industry. Although advanced models such as Sora, Kling, and CogVideoX perform well in natural video generation, they are still stretched for anime videos. Moreover, due to...- 1.8k

-

Volcano Engine Releases Seedance 1.0 lite, a Beanbag Video Generation Model: Movie and TV-Grade Quality, Dramatic Speed Improvements

May 13, 2011 - In the FORCE LINK AI Innovation Tour held in Shanghai today, Volcano Engine officially released a series of AI model upgrades, including Seedance 1.0 lite, a video generation model, and Seedance 1.5, a visual deep-thinking model, as well as upgrades to the Doubao music model, aiming to help enterprises to pass the application chain from business to intelligent body through a more comprehensive model matrix and richer intelligent body tools. With a more comprehensive model matrix and a richer set of intelligent body tools, the company aims to help enterprises open up the application chain from business to intelligent body. According to 1AI, this release of Seedance 1.0 lite...- 10.8k

-

Sand AI Releases Open Source Video Generation Model MAGI-1, Tsinghua Special Prize Winner Team's Video Generation AI Brushes the Screen Overnight

Another heavyweight open source player has emerged in the video generation space. On April 21, 2025, Sand AI, the startup of Marr Prize and Tsinghua Special Prize winner Cao Yue, launched its own big model for video generation -- MAGI-1. This is a world model that generates videos by autoregressive prediction of video block sequences, with natural and smooth generation effects, and multiple versions available for download. According to the official introduction, the video generated by MAGI-1 has the following characteristics: 1, high smoothness, no lag, can be unlimited renewal. It can be a mirror to the end to generate a continuous long visual...- 8.3k

-

Google's Veo 2 video generation model comes to Gemini, users can create 8-second 720p videos

April 16 - Google has announced that it is bringing its Veo 2 video generation AI model to Gemini Advanced subscribers. The move is designed to counter competition from OpenAI's Sora video generation platform and gain a foothold in an increasingly competitive market. Just two weeks ago, Runway, a strong rival in the synthetic media space, released its fourth-generation video generator and managed to raise more than $300 million (note: roughly Rs. 2,193 million at current exchange rates) in new funding. Starting this Tuesday, Gemi...- 1.6k

-

FastFire Releases Keling 2.0 Video Generation Model and Ketu 2.0 Image Generation Model

April 15 news, fast hand today held "inspiration comes true" can Ling AI 2.0 model conference, and announced that the base model upgraded again, for the world officially released can Ling 2.0 video generation model and can figure 2.0 image generation model. According to the introduction, Ke Ling 2.0 model maintains global leadership in dynamic quality, semantic response, screen aesthetics and other dimensions; Ke Tu 2.0 model significantly improves in command compliance, movie texture and artistic style performance. Gai Kun, senior vice president of Racer and head of the community science line, revealed that since its release in June last year, Ke Ling AI...- 2.4k

-

Runway Releases AI Video Generation Model Gen-4: Keeps Characters, Scenes Highly Consistent

April 1, 2011 - Artificial intelligence (AI) startup Runway on Monday released its newly developed AI video generation model, Gen-4, which the company claims is one of the highest fidelity AI-driven video generation tools to date. The newly released Gen-4 model is now available to Runway's personal and enterprise customers. According to Runway, the model's core strengths are its ability to maintain a high degree of consistency of characters, locations, and objects across video scenes, maintaining a "coherent world environment," and the ability to...- 2.5k

-

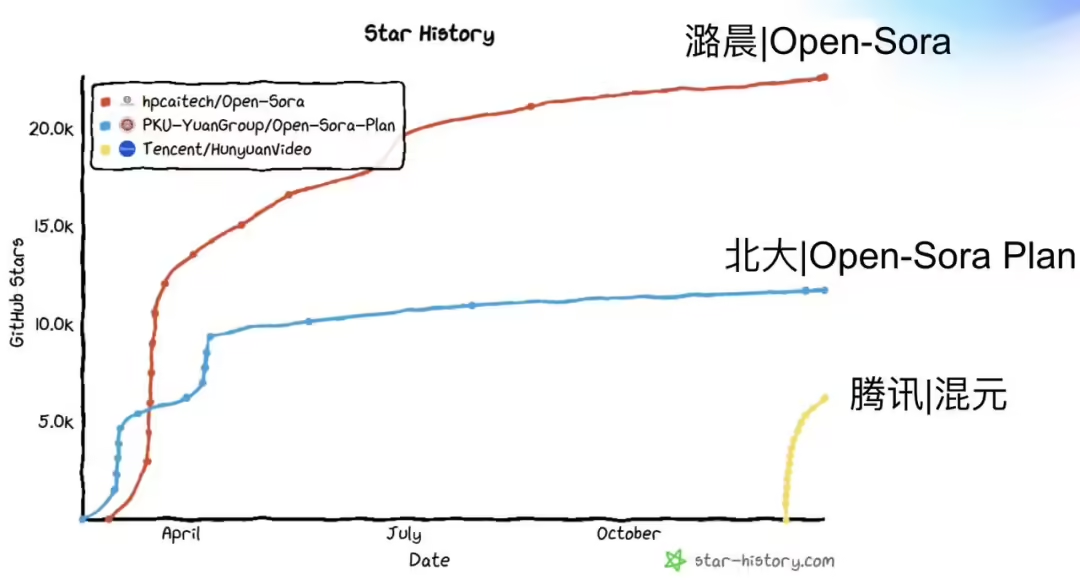

Lucent Technologies Launches Open-Sora 2.0, an Open Source Video Generation Model with Performance Close to OpenAI Sora

March 13 news, today, Lucent Technologies announced the launch of Open-Sora2.0, and fully open source model weights, inference code and distributed training of the entire process. According to reports, this is a new open source SOTA video generation model, only 200,000 U.S. dollars (Note: the current about 1.449 million yuan), that is, 224 GPUs to successfully train a commercial-grade 11B parameters of the video generation of large models, the performance of Tencent Mixed Meta and 30B parameters of the Step-Video. Lucent Technologies said, from Open-Sora ...- 3.7k

-

The strongest open source video model? Local Deployment of Ali's Wanxiang 2.1 (Wan2.1) Vincent Video

Following the Tencent mixed yuan, Ali also announced its open source video generation model: Wan phase 2.1 (Wan2.1), said it has SOTA-level performance, its highlights include: 1, better than the existing open source model, and can even "with some closed source model comparable to. 2, is the first video model that can simultaneously generate Chinese and English text. 3, support for consumer-grade GPUs, of which the T2V-1.3B model requires only 8.19GB of video memory. At present, WanPhase 2.1 can be deployed locally through ComfyUI, the following method: First, the installation of the necessary tools Please keep the "network open"...- 29.7k

-

KunlunWanwei Open Source China's First Video Generation Model for AI Short Drama Creation SkyReels-V1

February 18, 2011 - Kunlun World Wide announced today that it has open-sourced SkyReels-V1, the first video generation model for AI short drama creation in China, and SkyReels-A1, the first SOTA-level video base model-based expression action control algorithm in China. Kunlun World Wide's official introduction said that SkyReels-V1 marks the details of the performances, and also processes the emotions, scenes, and performance demands. SkyReels-V1 marks performance details, processes emotions, scenes, performance demands, etc., and utilizes "ten million-level, high-quality" Hollywood-level data for training and fine-tuning. In addition, SkyReels-V1 can realize "movie and TV level characters...".- 7.2k

-

OpenAI Says No Plans to Launch Sora API for Video Generation Models Yet

Dec. 18 (Bloomberg) -- OpenAI said today that it has no plans to launch an application programming interface (API) for its video generation model Sora, which generates videos based on text, images. During an online Q&A with members of the OpenAI development team, Romain Huet, OpenAI's head of developer experience, made it clear that "we have no plans to launch a Sora API at this time." Previously, OpenAI previously had to urgently shut down the Sora-based video due to far more visits than expected...- 3.5k

-

Byte Jumping Beanbag for PC goes live with video generation feature, internal test users can generate ten videos per day for free

Byte jumping video generation model PixelDance has officially opened internal testing in the computer version of the beanbag, some users have opened the experience portal. The internal test page shows that users can generate ten videos per day for free. According to 1AI's previous report, the PixelDance video generation model was first released at the end of September, and the earliest through the dream AI, volcano engine for creators and enterprise customers to invite a small-scale test. According to early internal test creators, when PixelDance generates a 10-second video, the best results are achieved by switching the camera 3-5 times, and the scenes and characters can maintain a good...- 6.9k

-

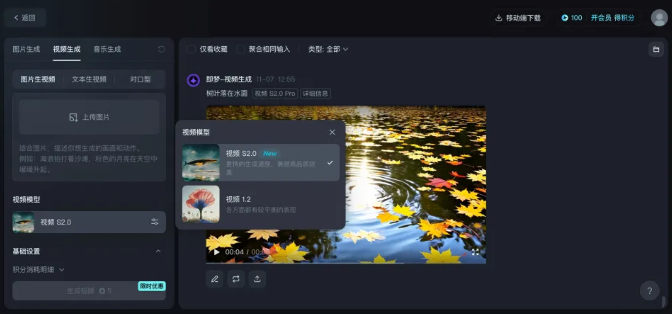

Instant Dream AI Announces Open Access to Seaweed Video Generation Models

Recently, namely dream AI announced that from now on byte jumping self-developed video generation model Seaweed for platform users officially open. After logging in, under the "Video Generation" function, users can experience the video model by selecting "Video S2.0". Seaweed video generation model is part of the beanbag model family, with professional-grade light and shadow layout and color harmonization, the visual sense of the screen is very beautiful and realistic. Based on the DiT architecture, the Seaweed video generation model can also realize smooth and natural large-scale motion pictures. Tests show that the model only needs 60s to generate a high-quality AI with a duration of 5s...- 7.9k

-

Ali Tongyi 10,000-phase video generation model "AI raw video" function is officially online, more understanding of the Chinese style of the big model is here!

At the 2024 Ali Yunqi Conference, Ali Cloud CTO Zhou Jingren announced that its latest research and development of AI video generation big model - Tongyi Wanxiang AI raw video, has been officially online, the official website and App can be immediately tried. AI video domestic battlefield, Ali also down. Alibaba's Tongyi launched the Tongyi Wanxiang AI raw video model is officially online, with a powerful picture visual dynamic generation capability, supporting a variety of artistic styles and film and television level texture video content generation. The model optimizes the performance of Chinese elements, supports multi-language input and variable resolution generation, has a wide range of application scenarios, and provides free service...- 13.2k

-

Byte Jump Beanbag Big Model Releases Video Generation Model on September 24th

Byte Jump Volcano Engine announced that the beanbag big model will be released on September 24th video generation model, and will bring more model family ability upgrade. It is understood that the Beanbag Big Model was officially released on May 15, 2024 at the Volcano Engine Original Power Conference. Beanbag Big Model offers multiple versions, including professional and lightweight versions, to suit the needs of different scenarios. The professional version supports 128K long text processing with powerful comprehension, generation and logic synthesis capabilities for scenarios such as Q&A, summarization, creation and classification. While the lightweight version provides lower token cost and latency, providing enterprises with flexible...- 17.8k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: