April 23 - Technology media outlet marktechpost published a blog post yesterday, April 22, reporting thatNvidiaNewly Launched Eagle 2.5, a visual-linguistic model (VLM) focused on long context multimodal learning.

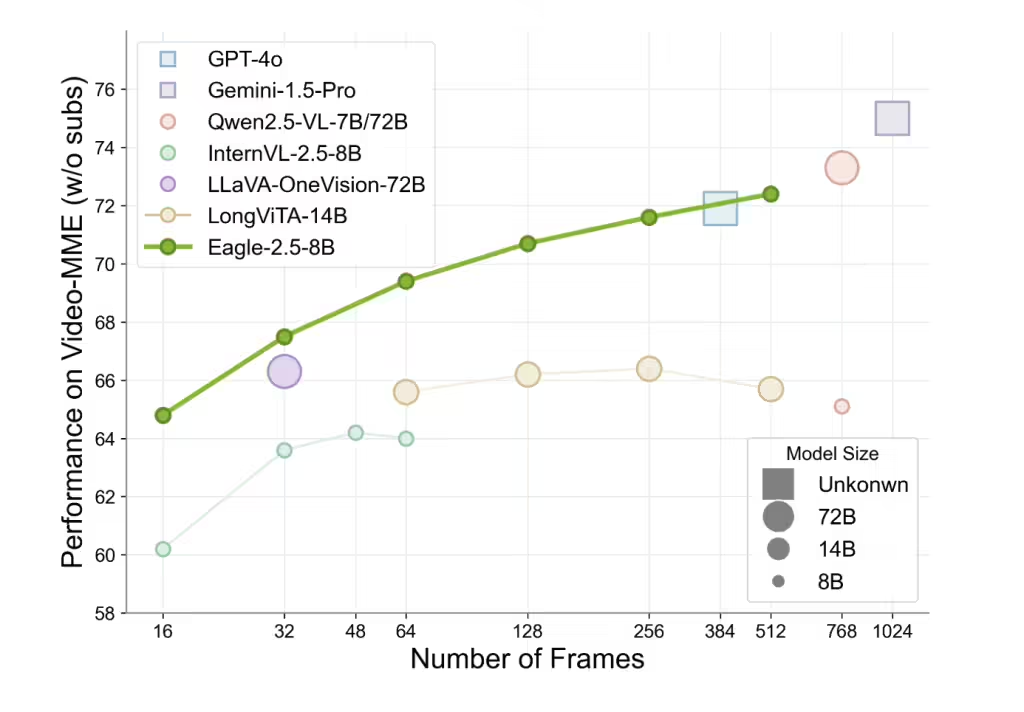

The model focuses on understanding large-scale video and images, and is particularly good at processing high-resolution images and long video sequences. Despite having a parameter size of only 8B, Eagle 2.5 scored 72.4% on the Video-MME benchmark (512 frame input), which is comparable to much larger models such as Qwen2.5-VL-72B and InternVL2.5-78B.

Innovative training strategies

The success of Eagle 2.5 could not have been achieved without two key training strategies: Information-First Sampling and Progressive Post-Training.

Information Priority Sampling preserves more than 60% of the original image area while reducing aspect ratio distortion through Image Area Preservation (IAP) technology, while Automatic Degradation Sampling (ADS) dynamically balances the visual and textual inputs based on contextual lengths, ensuring textual integrity and optimization of visual details.

Progressive post-training gradually extends the model context window from 32K to 128K tokens, allowing the model to maintain stable performance under different input lengths and avoid overfitting a single context range. These strategies, combined with SigLIP visual coding and MLP projection layers, ensure model flexibility in diverse tasks.

Customized data sets

The training data pipeline for Eagle 2.5 integrates open-source resources and a customized dataset, Eagle-Video-110K, which is designed for understanding long videos with double labeling.

The top-down approach uses story-level segmentation combined with human-labeled chapter metadata, dense descriptions generated by GPT-4; the bottom-up approach utilizes GPT-4o Generate Q&A pairs for short clips to capture spatio-temporal details.

Filtered by cosine similarity, the dataset emphasizes diversity rather than redundancy, ensuring narrative coherence and fine-grained annotation, which significantly improves the model's performance in high-frame-count (≥128 frames) tasks.

Performance

The Eagle 2.5-8B performs well in several video and image comprehension tasks. In the video benchmark, MVBench scored 74.8, MLVU 77.6, and LongVideoBench 66.4; in the image benchmark, DocVQA scored 94.1, ChartQA 87.5, and InfoVQA 80.4.

Ablation studies have shown that the removal of IAP and ADS results in performance degradation, while progressive training and the addition of the Eagle-Video-110K dataset result in more consistent improvements.

1AI Attach reference address