April 24 News.Anthropic In a blog post published yesterday (April 23), it was reported that Claude cutting edge AI Modelsis being acted upon by malicious actorsmisuse,Involves activities such as "influence-as-a-service" operations, credential stuffing, recruitment scams and malware development.

The Anthropic development team put in place several security measures for Claude that successfully blocked many harmful outputs, but threat actors are still trying to bypass these protections.1AI cites a blog post about the report, which reveals, through multiple case studies, how malicious actors can leverage AI technology for complex operations, including political influence manipulation, credential theft, hiring scams, and malware development.

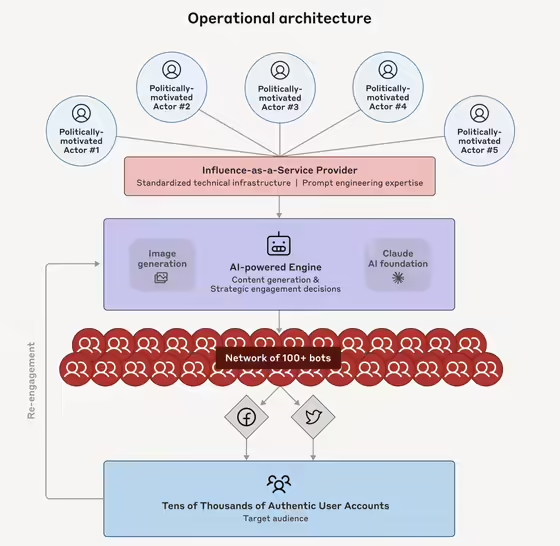

One of the most notable cases is that of a for-profit organization that created over 100 fake accounts on X and Facebook, with complete human intervention, the ability to comment in multiple languages, masquerading as real users, and successfully interacting with tens of thousands of real users to disseminate politically biased narrative content.

Another case involved a credential stuffing operation, where a malicious actor leveraged Claude enhancements to identify and process compromised usernames and passwords associated with security cameras, while gathering information about Internet targets to test those credentials.

The report also found that a user with limited technical skills developed malware through Claude that was beyond his skill level. None of these cases confirmed actual deployment success, but show how AI can lower the bar for malicious behavior.

Using techniques such as Clio and hierarchical summarization, the team analyzed large amounts of conversation data to identify patterns of abuse and combined them with classifiers to detect potentially harmful requests, successfully blocking the accounts in question. The team emphasized that as AI systems become more powerful, semi-autonomous and complex abuse systems are likely to become more common.