April 30th.Googletoday announced the launch of three new AI experimentation features designed toA more personalized way to help users learn foreign languages.

The first experiment can help usersQuickly master the expressions needed for the current sceneThe second encourages users toDrop the textbook language and communicate in a more authentic, colloquial way. The third experiment thenUtilizes the phone's camera to allow users to learn vocabulary with the help of real-life scenarios.

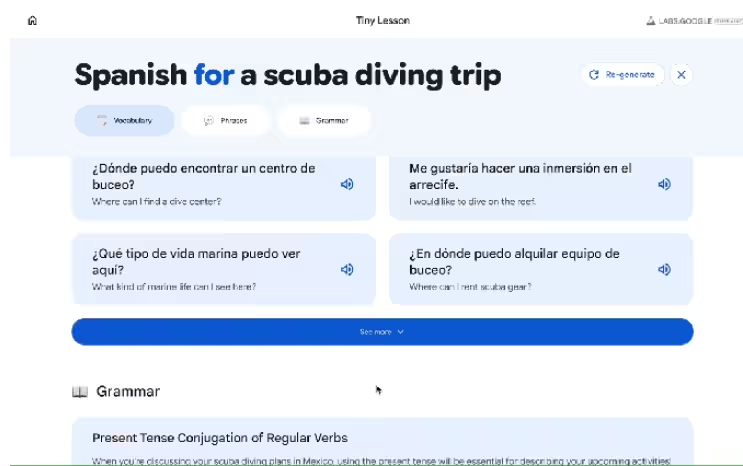

Google launched the "Tiny Lesson" pilot program, users only need toDescribe a situationFor example, if you lose your passport, the system will be able toAutomatic generation of corresponding vocabulary and grammar suggestionsThe program also provides practical expressions such as "I don't know where I left it" or "I want to call the police".

Another experiment, "Slang Hang," was designed to help usersMoving away from stagnant written language.. Google says that when people learn a languageFormal terms are usually learned firstThe experiment is therefore intended to lead to a more life-affirming version of local slang.

With this feature, users canGenerate a real conversation with a native speakerand go through its unfolding process sentence by sentence. This can be done, for example, byInteraction between vendors and customersorTwo friends who haven't seen each other in years reunite on the subwayThe dialog to learn. When you come across a word you don't understand, you can also hover over the word to see the meaning and usage.

Google says the experiment is still in development, theSome slang usage may be inaccurate or even appear to be fictitiousThe user is therefore advised to consult authoritative sources for verification.

The third experiment, "Word Cam", combines image recognition and language learning, where the user takes a picture of the scene around them and thenGemini recognizes the objects in the screen and labels them in the language it has learned.It also provides more relevant vocabulary for expansion.

Google points out that this feature is centered on increasing sensitivity to everyday words -- often times people may know how to say "window" but never think of the "blinds" expression. expression.

Google emphasized that the original purpose of the three experiments was to explore how AI could make the language learning process more relevant and personal.

1AI learned from Google official, these experiments support a variety of languages including Chinese, English, French, Spanish, Portuguese, Arabic, Korean, Japanese, Russian, etc., and users can access the experience through Google Labs.