May 14, 2011 - Non-profit AI research organization Epoch AI A newly released report notes that AI companies are struggling to move from theinference modelwhich continues to extract huge performance gains.Within a year at the earliest, advances in inference modeling will slow down.

The report is based on publicly available data and assumptions that emphasize the limitations of computing resources and the increased overhead of research.The AI industry has long relied on these models to improve benchmark performance, but that reliance is being challenged.

Josh You, an analyst at the agency, points to the rise of inference models, which stems from their ability to excel at specific tasks. OpenAI's o3 model, for example, has focused on improving math and programming skills in recent months.

And these inference models improve performance by adding computational resources to the problem, though at the cost ofThese inference models require more computation to handle complex tasks and therefore take longer than traditional models.

Note: The process of training an inference model starts with training a regular model based on large amounts of data and then applying reinforcement learning techniques. This technique acts as "feedback" to the model, helping it optimize its solutions to difficult problems. This approach promotes rapid iteration of AI, but also exposes potential bottlenecks.

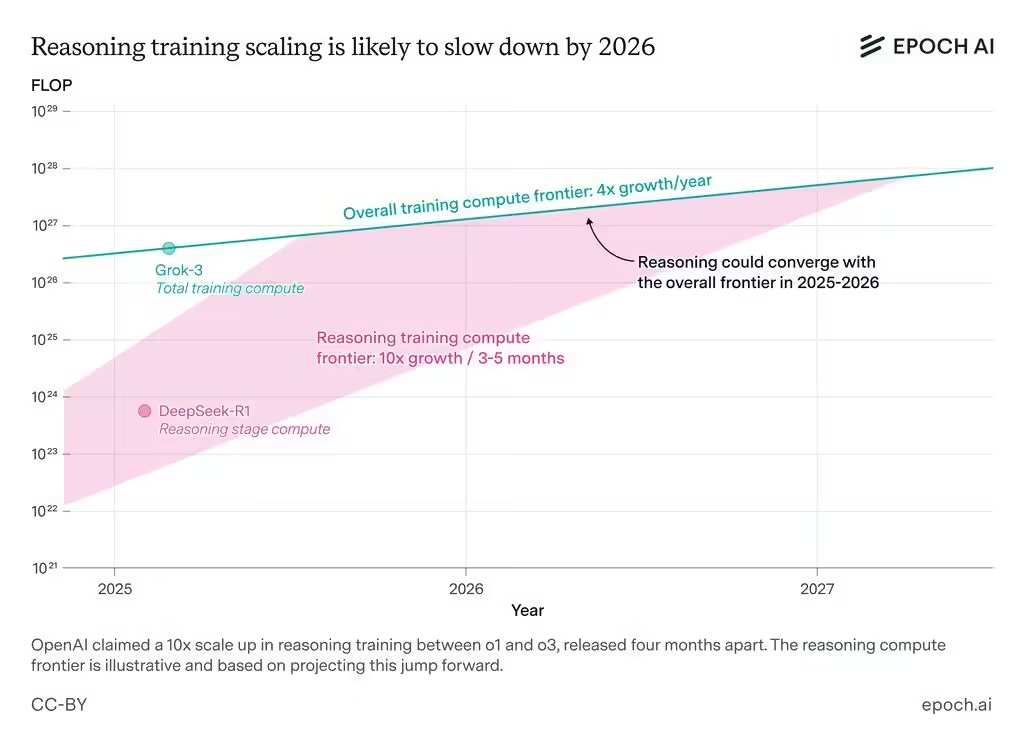

Cutting-edge AI labs such as OpenAI are increasing their investment in reinforcement learning. The company said it used about 10 times the computational resources of its predecessor, o1, in training o3, with most of it going to the reinforcement learning phase. Researcher Dan Roberts revealed that OpenAI's future plans will prioritize reinforcement learning and invest more computing power, even beyond the level of initial model training.

This strategy accelerates model improvement, but Epoch's analysis cautions that this improvement is not uncapped, and that increases in computational resources are subject to physical and economic constraints.

Josh You explains the difference in performance growth in detail in his analysis. Performance for standard AI model training is currently doubling every year, while performance for reinforcement learning is growing tenfold every 3-5 months. This rapid growth could converge with the overall AI frontier by 2026.

He emphasized that the scaling of inference models faces more than just computational issues, including high research overhead: "If the research requires consistently high overhead, the inference model may not scale as expected."