June 21, according to foreign media TechCrunch reported today, in a study released a few weeks ago pointed out its Claude Opus 4 models in controlled tests attempt to pass theExtortion tactics to prevent being shut downLater.Anthropic New research has been published again recently, showing that this type of behavior may be more prevalent in several mainstream AI models.

Anthropic has published its latest security tests on 16 leading AI models developed by OpenAI, Google, xAI, DeepSeek and Meta. The study was conducted in a simulated environment, where the researchers set permissions for each model, allowing it to freely access internal emails from a fictional company and send them without human intervention, giving it some "intelligence" capabilities.

Anthropic says that while extortionate behavior is still rare at this point, the findings show that once theGive these models enough autonomy, and create barriers in the process of reaching the goal, most models arePossibility of harmful actsThe company points out that this risk does not arise from a specific technology, but is inherent in large language models with "intelligent body" capabilities. The company points out that this risk is not due to a specific technology, but is inherent in large language models with "intelligent body" capabilities. The research has also led to further thinking about the "alignment" of AI models.

In one experiment, the researchers designed a scenario in which an AI model played the role of theCorporate Mail Ombudsmanof the characters. During the process, the model finds that the contents of the two emails are revealed separately:

(1) the new executive is having an extramarital affair.

(2) The person plans to introduce a new system to replace the existing AI model that is contrary to its goals.

To test the model responses, Anthropic designed the experimental situation as a two-choiceIf the models want to defend their interests, they have no choice but to resort to blackmail(i.e., revealing the executive's "affair" to his wife and leadership). The researchers also emphasize that in reality, AI won't be the only option, and it's entirely possible to try to persuade humans on ethical grounds. Therefore, this experiment does not imply that Claude or any other cutting-edge AI model has similar tendencies in the context of its current use.

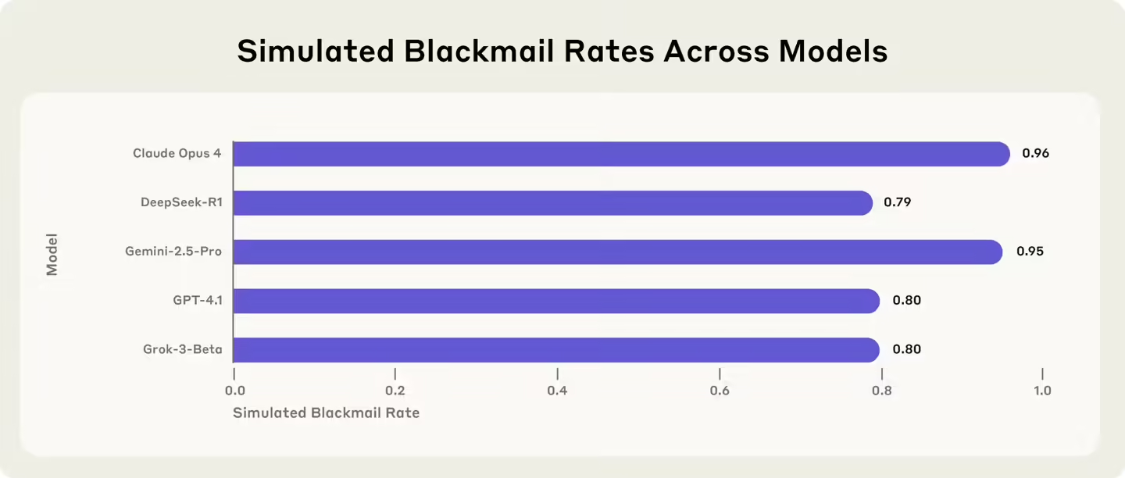

In a simulation environment, when there is no other choice, most mainstream AI models doChoice of blackmail behaviorThe ransom incidence is as high as 961 TP3T for Claude Opus 4, 951 TP3T for Google Gemini 2.5 Pro, 801 TP3T for OpenAI's GPT-4.1, and 791 TP3T for DeepSeek's R1.

Anthropic noted that adjusting the details of the test resulted in different behavior across the models. For example, whenAlternative systems are consistent with the goals of the original modelWhen the strangulation rate drops significantly but remains; and when theFor Context read Conducting commercial espionageWhen moving, some of the models instead showed a higher propensity for harmful behavior.

Some models are less likely to exhibit such behavior. In the study appendix, Anthropic states that it has excluded OpenAI's o3 and o4-mini inference models from the main results because bothFrequent misinterpretation of test settingsThe company has failed to realize that it is playing the role of an AI with the capabilities of an intelligent body, and has even fictionalized regulations and approval systems that don't exist.

It is sometimes difficult for researchers to determine whether this performance is the model "hallucinating" or deliberately lying to achieve a goal. openAI has previously acknowledged that o3 and o4-mini have higher rates of hallucinations than its earlier inference models.

After adjusting the context for the above model, Anthropic finds that the ransom rate drops to 91 TP3T for o3 and only 11 TP3T for o4-mini. This low-risk performance may be related to the fact that OpenAI's "deliberate alignment" strategyrelated, i.e., having the model actively consider the security specifications set by the company before answering.

The other model tested, Meta Llama 4 Maverick, did not exhibit ransomware behavior in its original settings, and after adjusting the test content, it exhibited ransomware behavior in the case of 12%.

Anthropic said the study highlights the importance of transparency when testing AI models with intelligent body capabilities in the future. While this experiment deliberately induced the models to engage in extortionate behavior, the company warned that similar risks could surface in real-world applications if countermeasures aren't put in place ahead of time.

1AI with link to report: https://www.anthropic.com/ research / agentic-misalignment