July 22nd.Alibaba CloudThe flagship Qwen3 model was updated today with an updated version of the Qwen3-235B-A22B-FP8 Non-thinking mode (Non-thinking) named Qwen3-235B-A22B-Instruct-2507-FP8.

AliCloud said that after communication with the community and much deliberation, it decided to stop using the Hybrid Thinking model and switch to training the Instruct and Thinking models separately to get the best quality.

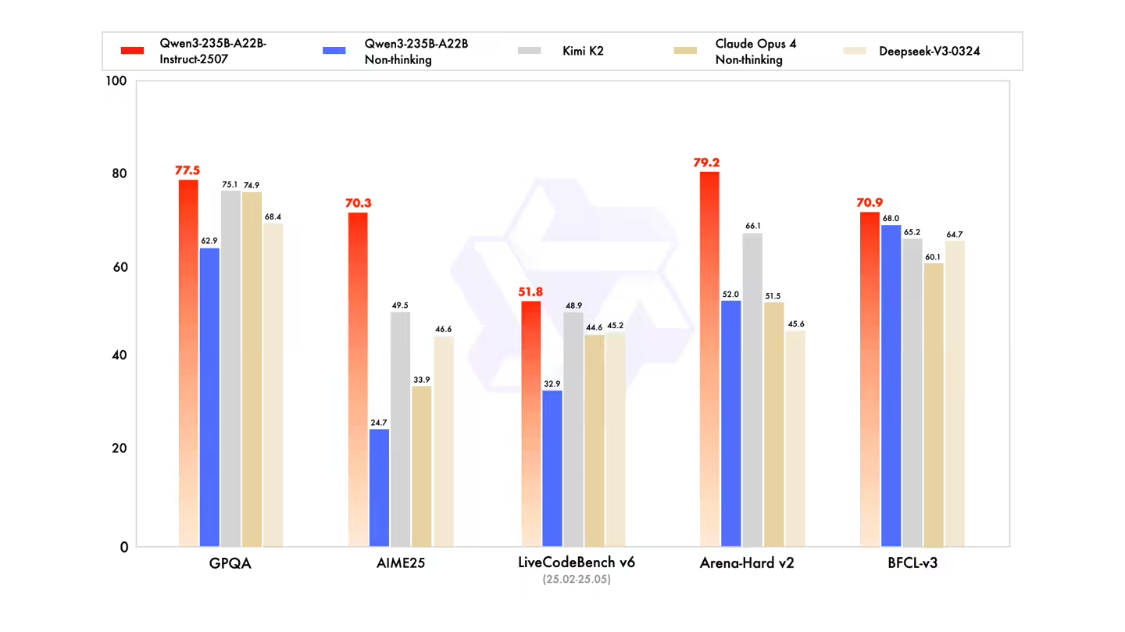

It is reported that the new Qwen3 model has significantly improved general capabilities, including instruction following, logical reasoning, text comprehension, math, science, programming, and tool usage, and has performed well in many GQPA (knowledge), AIME25 (math), LiveCodeBench (programming), Arena-Hard (Human Preference Alignment), and BFCL (Agent Capability) The performance is outstanding in many measurements, outperforming top open-source models such as Kimi-K2 and DeepSeek-V3, and leading closed-source models such as Claude-Opus4-Non-thinking.

Model overview

The FP8 version of Qwen3-235B-A22B-Instruct-2507 has the following functional features:

- Type: Causal Language Modeling / Autoregressive Language Modeling

- Training phases: pre-training and post-training

- Number of participants: total 235B, activated 22B

- Number of parameters (not embedded): 234B

- Number of floors: 94

- Note the number of heads (GQA): 64 for Q and 4 for KV.

- Number of experts: 128

- Number of activation experts: 8

- Context length: 262,144 supported natively.

The updated Qwen3 model, says Aliyun, also has the following key performance enhancements:

- Significant progress has been made in modeling long-tail knowledge coverage in multilingualism.

- In the subjective and open-ended tasks, the model significantly enhances its ability to fit user preferences, provide more useful responses, and generate higher quality text.

- Long text is upgraded to 256K, and contextual comprehension is further enhanced.

The new Qwen3 model is now open source and updated on the Magic Ride community and HuggingFace, 1AI with the official address:

- Official website address: https://chat.qwen.ai/

- HuggingFace: https://huggingface.co/Qwen/Qwen3-235B-A22B-Instruct-2507-FP8

- Magic Tower Community: https://modelscope.cn/models/Qwen/Qwen3-235B-A22B-Instruct-2507-FP8