August 11Kunlun WanweiOfficial release of the SkyReels-A3 model.

Officially, the SkyReels-A3 model is based on "DiT (Diffusion Transformer) video diffusion model + frame insertion model for video extension + reinforcement learning based action optimization + mirror control", which can realize full-modal audio drive of any duration.Digital HumanCreation.

The SkyReels-A3 model is said to bring new experiences to users in the following four directions:

Text Prompt supports screen changes;

More natural movement interactions, including interactions with goods, hand movements while speaking, etc;

The use and control of mirrors is more advanced, giving artistic scenes such as music and music videos a higher level of artistic beauty;

It can generate single-scene minute-level videos and support up to 60 seconds of output; multi-scenes can support unlimited duration.

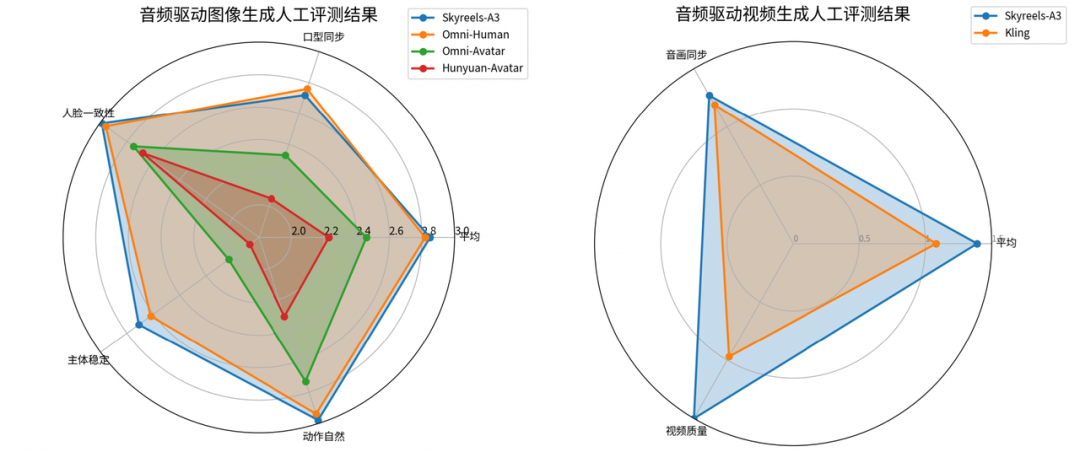

In a quantitative evaluation, SkyReels-A3 is compared to methods such as the advanced open-source model OmniAvatar and the closed-source model OmniHuman in different audio-driven scenarios.The results show that SkyReels-A3 outperforms these methods in most of the metrics, especially in lip synchronization (sync-c and sync-d).

In addition, Kunlun also took manual evaluation to more fully respond to the effect of model generation. The results show that SkyReels-A3 achieves the best results for face and subject stability, naturalness of movement, while achieving the best comparable results in mouth synchronization and face, and also has obvious advantages in audio and video synchronization and video quality.

🔗 SkyReels-A3 project homepage:https://skyworkai.github.io/skyreels-a3.github.io/

🔗 SkyReels Official Website Address:https://www.skyreels.ai/home

🔗 SkyReels series open source model address:https://huggingface.co/Skywork