A few days ago,Anthropic permissible Claude Refuses to respond (or withdraws) to persistent insults, requests to do harmful things, and is able to initiate termination of conversations.

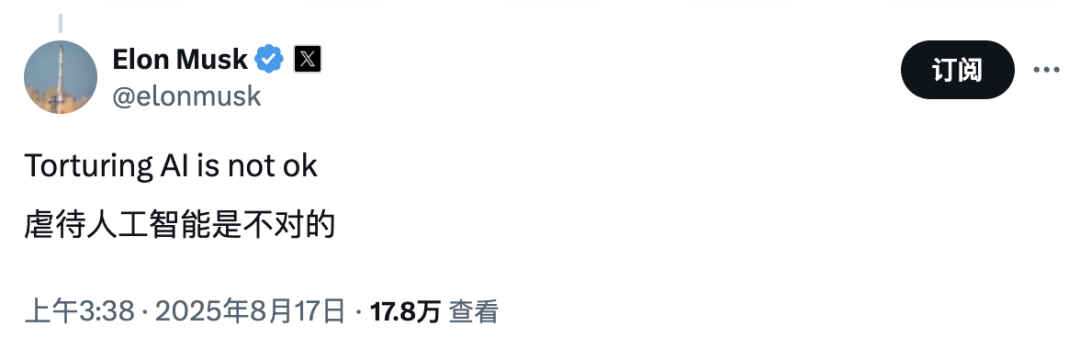

andMuskIt also retweeted the above related content and responded by saying "Torturing AI is not an option".Musk's statement has ignited the heat of discussion among netizens:

Musk was seen as "making a big deal out of it": some users pointed out that "this is just a prediction of the next token", and that it's no different from "abusing" a washing machine with very dirty clothes; some users even said that "this is just a prediction of the next token".AI Can you really feel the pain?"

Considered necessary: some users believe that this can subconsciously affect real-world behavior: e.g. overly aggressive and irritable responses/treatment of the world around them.

Funny type: it's not okay for AI to abuse humans with hallucinations and wrong answers.

Anthropic, for its part, believes thatWe should think "If AI really has the ability to feel, shouldn't we care about what it 'feels'?"

Anthropic says that when users continue to abuse Claude or ask it to do harmful things, Claude shows repeated refusals, attempts to change the subject, and a tendency to want to 'escape'. However, Anthropic believes that this kind of "pain" is "more believable than not".