On September 25th, at the 2025 Cloud Habitat CongressAliTHE CEO AND CEO OF THE GROUP, WU NAMI-KWAN, SAYS HE'S ACTIVELY PROMOTING 380 BILLION DOLLARS AI Infrastructure development and plans for additional and additional inputs. At the conference, Ali launched six new models + one brand-new:

- Qwen MAX: trillions of parametersLarge Model, Coding and Tool Call Capability to top the international list

- Qwen3-Omni: a new generation of original all-modular megamodels that truly achieves "all-modernity"

- Qwen3-VL:Agent and Coding are fully empowered to truly “understand, understand and respond to the world”

- Qwen-Image: Upgrade! The real realization of the phrase “no damage to the face, no change to the dress”

- Qwen3-Coder: 256K context rehabilitation project with a substantial increase in TerminalBench scores

- Wan2.5-Preview: Synchronization of audio-visual generation, images supporting scientific graphics and artistic characters

- (a) General hearings: a large enterprise-level voice base model, “last kilometre” of the downing voice model of the enterprise

in,Thousand Questions on Tongyi Qwen-MAX, Qwen3-Omni, Qwen-Image-Edit-2509 were previously reported。

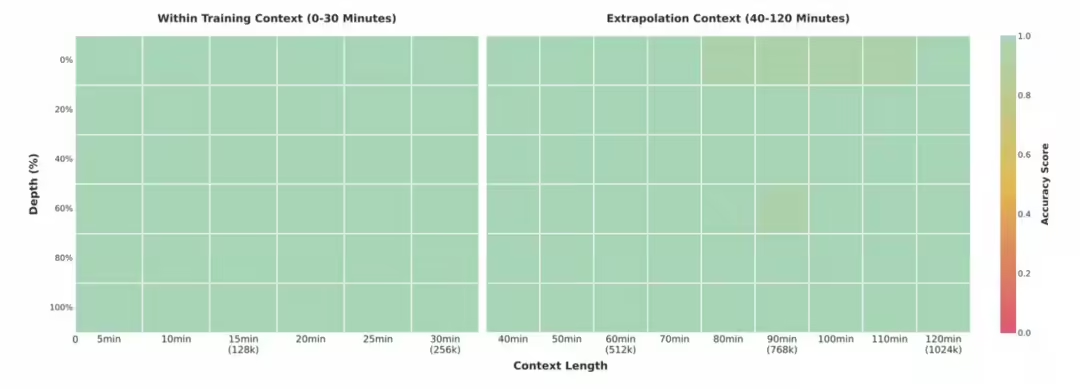

As for the rest of the model, Qwen3-VL is a visual understanding model that truly achieves “understand the world, understand events, take action”, supports 2 hours of video precision positioning (e.g., “what does a red man do in 15 minutes”), expands the OCR language from 19 to 32 with a significant increase in the recognition of remote words, ancients, slanted text; and supports the context of 256 K, which can be extended to 1 million tokens, suitable for long video and document analysis。

This release focuses on strengthening the following capacities:

- Visual intelligence systems: to operate computer and mobile interfaces, to identify GUI elements, understand buttons, call tools and missions, to reach the world ' s highest level in assessments such as OS World

- Visible programming: seeing UI design or flowcharts can directly generate HTML / CSS / JS code or Draw.io diagrams, significantly increasing the efficiency of product and development collaboration

- Space perception and 3D Knowledge: supports the determination of the location of the object, the relationship between the change of perspective and the cover, and provides ground support for the body-smart, robotic navigation, AR / VR etc.

- Super-long video understanding and behaviour analysis: not only can you understand the two-hour video content, but you can also answer precise time and behaviour questions such as “What does the 15-minute red man do” “from which direction the ball flies into the picture”

- Thinking version reinforces STEM reasoning: SOTA level in MathVista, MathVisión, CharXiv, etc., so that scientific charts, formulae and literature images can be refined

- (a) Comprehensive upgrading of visual perception: optimizing pre-training data to support “everything identification” - from celebrity, animated role, commodities, landmarks to flora and fauna, covering life and professional scenes

- (A) MULTILINGUAL OCR AND COMPLEX SCENE SUPPORT: LANGUAGE EXPANSION TO 32 LANGUAGES, MORE STABLE RECOGNITION OF COMPLEX LIGHT, FUZZY, TILTED TEXT, AND A SIGNIFICANT INCREASE IN THE RATE OF RECALL OF REMOTE, ANCIENT AND PROFESSIONAL TERMS

- Early warning of safety and security: in real situations such as homes, businesses, neighbourhoods, roads, etc., the detection of risk persons and events is up to industry lead

- Long context original support: 256K starting, extended to 1 million token, supporting the full course of teaching materials, hours of meeting video memory and accurate retrieval。

Qwen-Image is an open-source photo editor, and this is also a new upgrade. The new version supports multi-graph reference editing, enhances consistency of faces, commodities, text IDs, and is a prototype of ControlNet, which achieves industrial-level stability by "rewording, reloading and so forth" and meets high-demand scenarios such as electricity, design and advertising。

Core highlights of this upgrade:

- Multi-chart editing support: For multi-chart input, Qwen-Image-Edit-2509 is based on Qwen-Image base model, which not only handles single-chart editing scenarios, but is also new to support multiple-chart editing scenarios, providing “persons + characters”, “persons + goods”, “persons + scenes” and so on。

- Increased consistency in the editing of single graphs: before the Qwen-Image-Edit-2509 scene, there was a significant improvement in the consistency of dimensions, mainly as follows:

- ENHANCED IDENTITY EDITORIAL CONSISTENCY: ENHANCED CHARACTER ID MAINTENANCE, SUPPORT FOR VARIOUS STYLE PORTRAITS, CHANGING POSTURES

- INCREASED COMMODITY EDITORIAL CONSISTENCY: ENHANCED COMMODITY ID MAINTENANCE AND SUPPORT FOR VARIOUS COMMODITY POSTER EDITORS

- Increased text editorial consistency: multiple text fonts, colours and material editing are supported in addition to text content modifications

- Native support ControlNet: Support includes guidance information on depth maps, edge maps, key points, etc。

Qwen3-Coder Context Code Specialist this time to upgrade capacity, optimising through Joint Training, TerminalBench scores, which once became the second popular Coder model on the OpenRouter platform (IT House Note: After Claude Sonet 4). In support of 256K context, the entire project-level code library can be understood and repaired once and for all, with faster reasoning, less consumption by Token and more secure, and the developers are referred to as “AAI to repair complex projects with one key”。

Core highlights of this upgrade:

- Joint Training for Agentic Coding: Joint Optimization with Qwen Code or Claude Code, with significant improvements in CLI applications

- PROJECT-LEVEL CODE UNDERSTANDING: 256K CONTEXT SUPPORTED TO HANDLE COMPLEX CROSS-DOCUMENTARY, MULTILINGUAL PROJECTS

- (b) Optimization of reasoning: faster and better results with less Token than the previous generation model

- CODE SECURITY UPGRADES: ENHANCED GAP DETECTION AND MALICIOUS CODE FILTERING, MOVING TOWARDS “RESPONSIBLE AI”

- Multi-modular input support: a combination of Qwen Code systems to support uploading intercept + natural language command generation code, global lead。

For the first time, the Wan2.5-Preview Synchronization Creative Engine supported acoustic synchronization, which comprehensively enhanced three core capabilities of video generation, image generation and image editing, and met commercial content production needs, such as advertising, electrician and video。

Video generation -- 10 seconds of a "symphony" movie:

- (A) SYNCHRONIZATION OF ORIGINAL SOUND: VIDEO WITH ITS OWN VOICE (MANY PEOPLE), ASMR, SOUND, MUSIC, SUPPORT FOR CHINESE, ENGLISH, SMALL LANGUAGES AND DIALECTS, WITH THE IMAGE AND SOUND SOARED

- 10 seconds long video generation: 1 times longer, with a maximum of 1080 P 24fps, a significant increase in dynamic performance and structural stability and a surge in narrative capability

- Directives follow upgrades: support complex continuous change commands, mirror control, structured hints, and precision reduction of user intent

- (A) OPTIMIZATION OF GRAPHIC VIDEO PROTECTION ID: SIGNIFICANT IMPROVEMENT IN THE CONSISTENCY OF VISUAL ELEMENTS SUCH AS CHARACTERS, GOODS, ETC., AND AVAILABILITY OF COMMERCIAL ADVERTISING AND VIRTUAL ICON SCENES

- Generic audio drive: Supports the uploading of auto-defined audio as a reference, and the production of video with a hint or a headchart to achieve "Speak your story in my voice"。

Texture - Master of Design for Writing:

- Aesthetic Quality Upgrading: Real light, detailed Quality Performance is enhanced and good at different art styles and design Quality Reductions

- STABLE TEXT GENERATION: SUPPORT FOR CHINESE AND ENGLISH, SMALL LANGUAGES, ARTISTIC CHARACTERS, LONG TEXT, FINE RENDERING OF COMPLEX IMAGES, POSTERS / LOGO AT ONCE

- (b) Direct production of graphs: the output of structured graphics such as scientific graphs, flow graphs, data graphs, diagrams, text content tables

- DIRECTIVES FOLLOW UPGRADES: COMPLEX COMMANDS REFINE UNDERSTANDINGS, HAVE THE ABILITY TO REASON LOGIC, AND CAN ACCURATELY REVERSE REALITY IP IMAGES AND SCENE DETAILS。

Image editing - Industrial repainting:

- COMMAND EDITOR: SUPPORTS THE ENRICHMENT OF EDITORIAL TASKS (BACKGROUND / COLOUR / ELEMENT / STYLE) WITH INSTRUCTIONS TO UNDERSTAND PRECISION WITHOUT THE NEED FOR PROFESSIONAL PS SKILLS

- CONSISTENCY: SUPPORTS SINGLE / MULTI-CHARTED REFERENCE PADS, VISUAL ELEMENTS SUCH AS HUMAN FACES, GOODS, STYLES, ETC. ID IS MAINTAINED STRONGLY, EDITED AS "MAN OR PERSON, BAG OR BAG."。

All-powerful hearingsIt is a brand-new, corporate-level voice base model that integrates the leading Fun-ASR speech recognition model with the Fun-CosyVoice speech synthesis model, and is dedicated to addressing the challenges of voice landing in complex environments。

The Fun-ASR speech recognition master model specializes in three major trades of “sight output” “serial” “hot word failure” in speech recognition. Through the first CTC+LM+RAG, the hallucinogenic rate has dropped from 78.51 TP3T to 10.71 TP3T, basically addressing the problem of serials。

Supporting the dynamic trans-linguistic voice cloning, trade term 100% for accurate recall. The Fun-CosyVoice mega-model for speech synthesis uses innovative methods of speech decomposition training to significantly enhance audio synthesis and support cross-lingual voice cloning. A summary of core competencies:

- (a) A significant decrease in the hallucinogenic rate: CTC primary screening was used as a LLM context through a context enhancement structure, and the hallucinogenic rate fell from 78.5% to 10.7%, with more stable and reliable output

- (a) Resolving the problem of serials: CTC decoded text into LLM Prompt, which greatly eases the phenomenon of “autotranslation” such as the English audio output in Chinese

- (B) STRONG CUSTOMIZATION CAPACITY: INTRODUCTION OF RAG MECHANISM DYNAMIC INJECTIONS OF TERMINOLOGY TO SUPPORT PRECISION RECOGNITION OF NAMES, BRAND NAMES, TRADE BLACK LANGUAGE (E.G. “ROI” “PERFORMANCE OF PRIVATE DOMAIN”) AND FIVE MINUTES OF CONFIGURATION

- Multilingual voice cloning: using multi-stage training methods, a sound can be spoken globally, with the sound likeness industry leading

- The industry is fully covered: it is based on tens of millions of hours of real audio training, covering the 10+ industry in finance, education, manufacturing, the Internet, livestock, and so on。