On December 10th, the Pew Research Center released a study on Tuesdays of local time on how American youth use social media at the same timeAIChat robot。

cybersecurity for young people has been a global theme. australia plans to introduce a social media ban on minors under 16 from wednesday. the impact of social media on adolescent mental health has long been widely debated: some studies suggest that online communities can contribute to improving mental health, while others suggest that “doomscrolling” or over-net access can have negative impacts. last year, the united states director of health even called for social media platforms to put warning labels on their products。

The Pew study found that:97% AMERICAN TEENAGERS USE THE INTERNET EVERY DAY, OF WHICH ABOUT 40% RESPONDENTS SAY THEY ARE “ALMOST ON-LINE”I DON'T KNOW. ALTHOUGH THIS PERCENTAGE IS LOWER THAN LAST YEAR'S SURVEY (461 TP3T), IT IS STILL SIGNIFICANTLY HIGHER THAN IT WAS 10 YEARS AGO, WHEN ONLY 241 TP3T TEENAGERS CALLED THEMSELVES “ALMOST AT ANY TIME ONLINE”。

This technology has become another key factor in the impact of the Internet on young people in the United States, as artificially intelligent chat robots become more common in the United States。

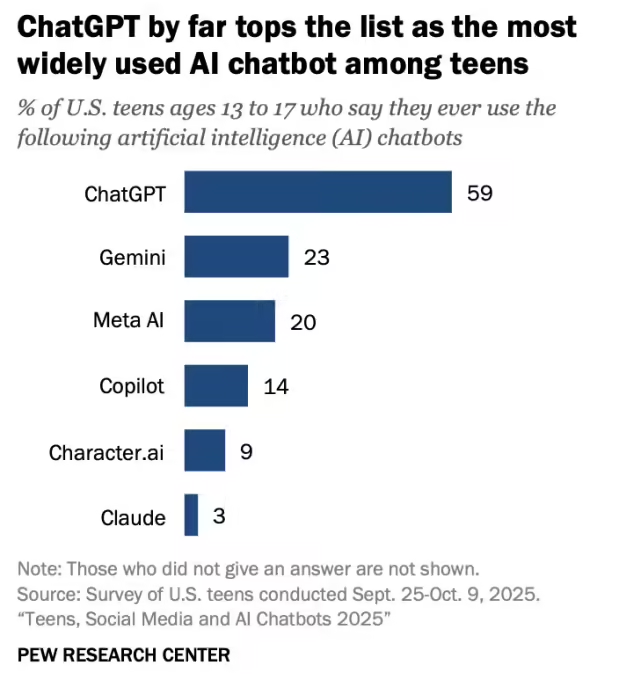

The study shows thatABOUT 30 PERCENT OF AMERICAN TEENAGERS USE AI CHAT ROBOTS EVERY DAY, OF WHICH 4% CLAIMS TO BE "ALMOST CONTINUOUS."I don't know. 59% teenagers say they have used ChatGPT, and its penetration is second and third-ranked chat robots -- Gemini (23%) and Meta AI (20%) in Google are more than double. 46% youths in the United States say they use it at least a few times a week AI Chatbot, AND A FURTHER 36% INDICATES THAT IT HAS NEVER BEEN USED。

AI noted that the Pew study also analysed in detail the impact of race, age and family income on the use of chat robots by adolescents. The survey shows that 68% youth of African and Hispanic descent use chat robots, compared to 58% for white youth. It is particularly noteworthy that young people of African descent are about twice as likely to use Gemini and Meta AI as white adolescents。

Michelle Faverio, a researcher at the Pew Research Centre, said to TechCrunch: “The racial and ethnic differences in the use of chat robots by teenagers are significant ... But it is still difficult to speculate about the reasons behind it. This pattern is consistent with the other racial and ethnic differences that we have observed in the use of science and technology for young people. Afro-descendant and Hispanic adolescents are more likely than white teenagers to indicate that they are active in certain social media platforms, such as TikTok, YouTube and Instagram.”

IN TERMS OF THE OVERALL USE OF THE INTERNET, 55% YOUTHS OF AFRICAN DESCENT AND 52% YOUTHS OF HISPANIC ORIGIN REFER TO THEMSELVES AS “ALMOST AT ANY GIVEN TIME”, WHILE WHITE ADOLESCENTS ARE ONLY 27%。

The older adolescents (15 to 17 years of age) use social media and AI chat robots more frequently than the younger (13 to 14 years of age). In the case of household income, about 62% adolescents in households with an annual income of over $75,000 indicated having used ChatGPT, compared to 52% in households below this income threshold. However, in households with an annual income of less than $75,000, the use of Character.AI is twice as high (14%)。

ALTHOUGH YOUNG PEOPLE MAY INITIALLY USE THESE TOOLS ONLY FOR BASIC QUERIES OR JOB COUNSELLING, THEIR INTERACTION WITH AI CHAT ROBOTS MAY GRADUALLY BECOME ADDICTIVE AND EVEN POTENTIALLY HARMFUL。

At least two teenagers -- Adam Raine and the family of Amaurie Lacey -- have filed a lawsuit against ChatGPT Development Company OpenAI for the role of chat robots in the suicide of their children. In both cases, ChatGPT provided young people with detailed self-care instructions and, unfortunately, their content was actually implemented。

OpenAI, for its part, argued that it should not be held responsible for Rene ' s death, as the 16-year-old boy had allegedly bypassed ChatGPT ' s safety protection mechanisms, violating the service terms of chat robots; it had not yet responded to the complaint by Lacey ' s family。

Dr. Nina Vasan, director of the University of Stanford Laboratory for Mental Health Innovation, said to TechCrunch: “Even though these AI tools are not designed for emotional support, they are being used in this way. This means that it is the responsibility of the enterprise to adjust its model to take into account the welfare of the user.”