On February 10th, according to Late PostBean curd 2.0 or will this yearSpring Festival (Chinese New Year)Front line。

According to the report, the upcoming bean bag 2.0 model is the U.W. Yonghui (the byte Seed Director of Basic Research) taking over the core output of Seed for a year. It's a multi-modular model similar to Gemini, with 1000 billion parameters, and it's the largest model ever trained by Seed。

Seed revealed, through reports, that infrastructure challenges were encountered during the training of the model. They analysed that basic capacity-building had been relatively neglected during the past two years during the continuous pursuit of Seed, so that training bean packs had been unstable and difficult to advance during the expansion of parameters during the period 2.0。

According to the information received, in a podcast by the RL Infra director of OpenAI, the Infra of each model team has a bug, and the model company essentially works at the speed of the Infra repair bug, which determines the number of ideas to be validated in a unit time, which can be solved by increasing the brain density。

According to the report, it is more difficult for the Seed team to reorganize the Infra system。

The Infra team of Seed is known to have hundreds of people, while supporting the development and testing of dozens of models inside Seed, which is considered by the top to be the highest in the country. “To restructure, it takes a great deal of human and material resources, as well as considerable trust costs. One of the Seeds said that only "drive and fix wheels."。

It has been learned that the training bean pack 2.0, after having encountered problems, eventually took several teams to work together for three months, mainly from model structures, training data and so on, to ensure that the model is on line before spring。

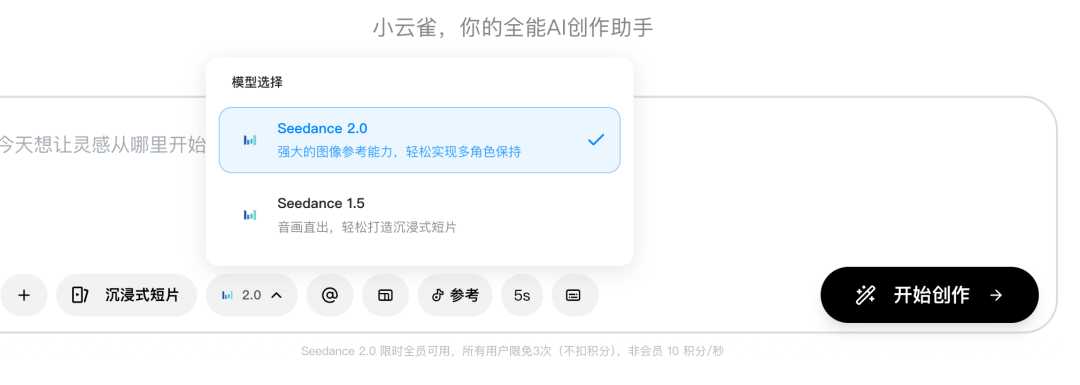

In addition, the byte latest video generation model Seedance 2.0 Platforms such as the Little Skylark, the Dream, have been put on line and have received extensive attention。

Seedance 2.0 is known to create film-level videos based on text or images. It uses a two-part proliferation transformer structure that generates both video and audio. Only a detailed tip or an upload of a picture, Seedance 2.0, can generate a multi-sequence video with primary audio in 60 seconds。

IT IS WORTH MENTIONING THAT THE MODEL'S UNIQUE MULTI-PHOTO NARRATIVE FUNCTION ALLOWS FOR THE AUTOMATIC GENERATION OF MULTIPLE INTERRELATED SCENARIOS BASED ON INDIVIDUAL TIPS. AI WILL AUTOMATICALLY MAINTAIN THE CONSISTENCY OF ALL SCENES TO SWITCH ROLES, VISUAL STYLES AND ATMOSPHERES WITHOUT THE NEED FOR MANUAL EDITING。