February 12th news, just nowZhipuOfficially online and open-source updateModel GM-5。

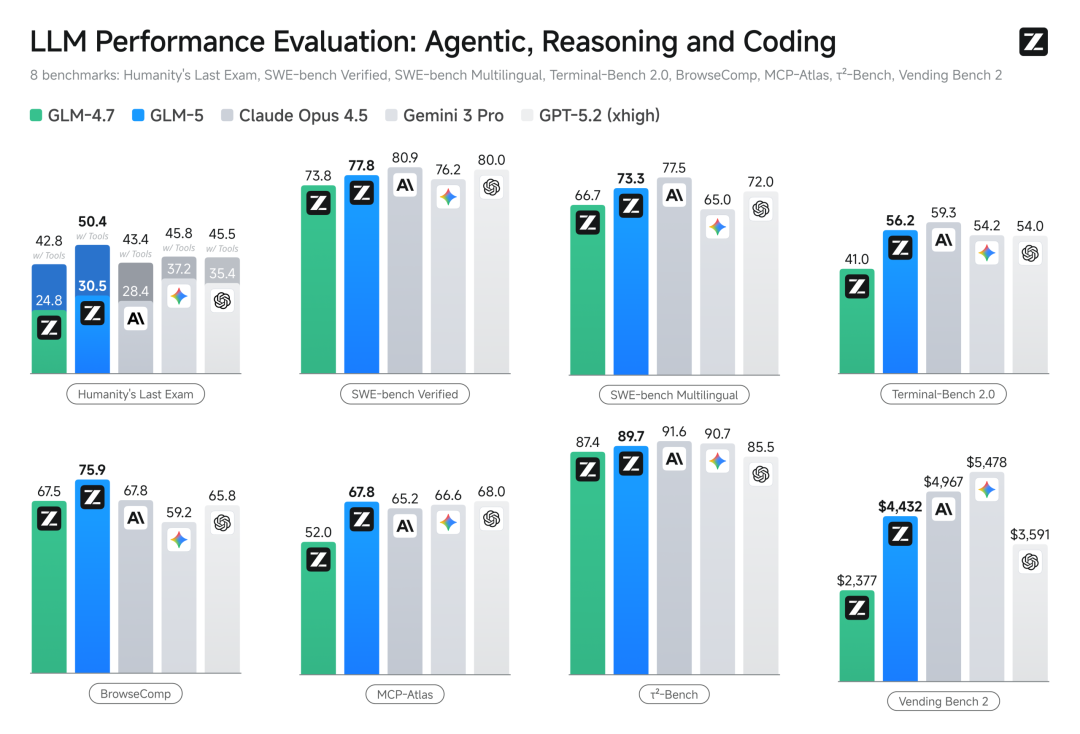

GLM-5 is described as a product of the move towards Agentic Engineering: in the Coding and Agent capabilities, its access to open source SOTA shows that it is approaching Claude Opus 4.5 in the use of a real programming landscape, with a special focus on complex system engineering and long-range Agent missions。

GLM-5 ADOPTS A NEW BASE:PARAMETER SIZE EXPANDED FROM 355B (ACTIVATION 32B) TO 744B (ACTIVATION 40B) AND PRE-TRAINING DATA FROM 23T TO 28.5T; build a new "Slime" framework to support larger model sizes and more complex intensive learning tasks。

at the same time,GLM-5 and DeepSeek Sparse AttentionThe cost of model deployment has been significantly reduced while maintaining long text without loss。

In particular:

The GLM-5 is the fourth largest in the world in the list of global authority Artificial Analysis。

GLM-5 achieves alignment of Claude Opus 4.5 in programming capacity, with open source model SOTA in industry-recognized mainstream baseline testing。

GLM-5 obtained a maximum of 77.8 and 56.2 for open source models in SWE-bench-Verified and Terminal Bench 2.0, respectively, with performance exceeding Gemini 3 Pro。

The GLM-5 achieved the highest performance in BrowneComp (online retrieval and information understanding), MCP-Atlas (large-scale end-to-end tool call) and 2-Bench (tool planning and implementation for automatic agency in complex scenarios)。

IT IS WORTH MENTIONING THAT THE GLM-5 HAS NOW COMPLETED ITS IN-DEPTH REASONING WITH CHINA'S CALCULATOR PLATFORMS, SUCH AS TSING, MOOR'S LINE, COLD WU, KUNLUNG, KUENCHI, MU SAKAI, TSUIHARA, AND SEA LIGHT. THROUGH BOTTOM ALGORITHM OPTIMIZATION AND ACCELERATION OF HARDWARE, THE GLM-5 HAS ACHIEVED HIGH-INPUT, LOW-DELAYED AND STABLE OPERATION IN THE NATIONAL CHIP CLUSTER。

As of this date, the GLM-5 synchronizes the open source with the ModeScope platform in Hugging Face, and the model weight follows MIT License. Meanwhile, GLM-5 has been incorporated into the GLM Working Plan Max package。

Online Experience

Z.ai: https://chat.z.ai

Ideas Statement APP/Web Edition: https://chatglm.cn

open source link

GitHub: https://github.com/zai-org/GLM-5

Hugging Face: https://huggingface.co/zai-org/GLM-5