-

I'll teach you how to deploy a popular video model to make a video of text easily

Ali Baba ' s Pantyan 2.1 (Wan2.1) is now the leading open-source video model that has become an important tool for AI video creation, based on its strong capacity for generation. This paper will teach you how to quickly install and use the Wan2.1 model to make text-generated videos easy. 1 . Environmental readiness, prior to start, ensure that your equipment meets the following requirements: System support: Windows, MacOS hardware requirements: it is recommended to use equipment with NVIDIA graphic cards, it is recommended to display 16 ≥, it is recommended to use RTX 3060 and above to ensure that models..- 4.4k

-

Google's most powerful video model, Veo 3.1, first samples exposed: home-carrying, 8 seconds 720P

On October 11th, the tech-media testingcatalog published an article yesterday (10 October) that exposed the first real-generated samples of the Google New Generation Video Model Veo 3.1, capable of generating video clips with a duration of 8 seconds, resolution 720p and a track. The media points out that in the cloud platform Vertex AI and the video creation tool Google Vids, a new generation of models, Veo 3.1, has emerged, with the first real video samples generated by Veo 3.1..- 2.1k

-

Google's Veo 3 AI born-video model is now available to Pro/Ultra members, with a new "photo-born video" feature to follow

July 4, Google announced the Veo 3 AI text-generated video model at this year's I/O developer conference, and now Google VP Josh Woodward has announced that the model is now officially available to all users who subscribe to the Pro / Ultra membership. It should be noted that Pro members can only generate 3 videos per day (the number generated by Ultra was not announced), so if users run out of quota on the same day, they'll need to wait until the next day to try again, or continue to use the previous-generation Veo 2 model. 1AI also notes that J...- 37.3k

-

Google releases Veo, a video model that can generate HD videos over 60 seconds long. Application link is attached!

At the Google I/O Developer Conference 2024, Google introduced Veo, a strong competitor to OpenAI's Sora. Here's a closer look at the product. Veo Highlights Veo is Google DeepMind's text-based video generation model that creates high-quality 1080p videos of more than 60 seconds. It combines text and images to produce videos that meet both input requirements. It also supports video editing using text descriptions, including editing specific areas of the video. About Google Ve...- 15.1k

-

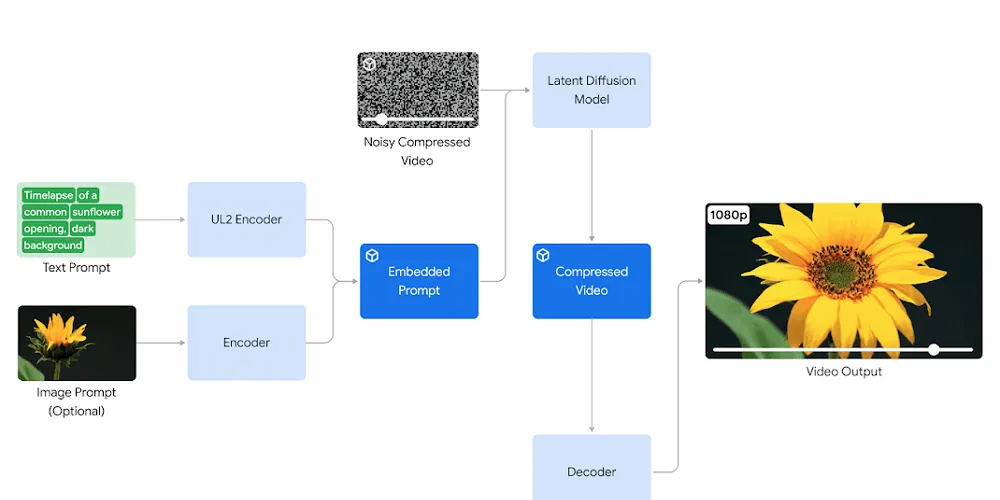

Aiming at Sora, Google launches Veo Vincent video model: over 1 minute long, up to 1080P, supports movie techniques

OpenAI launched the text-to-video Sora three months ago, sparking widespread discussion among netizens, the media, and insiders. At the 2024 I/O Developer Conference held today, Google also launched a benchmark product, Veo, which can generate "high-quality" videos with a length of more than 1 minute and a resolution of up to 1080P, with a variety of visual and film styles. According to Google's official press release, Veo has advanced understanding of natural language and can understand film terms such as "time-lapse photography" and "aerial scenery". Users can use text, images...- 4.2k

-

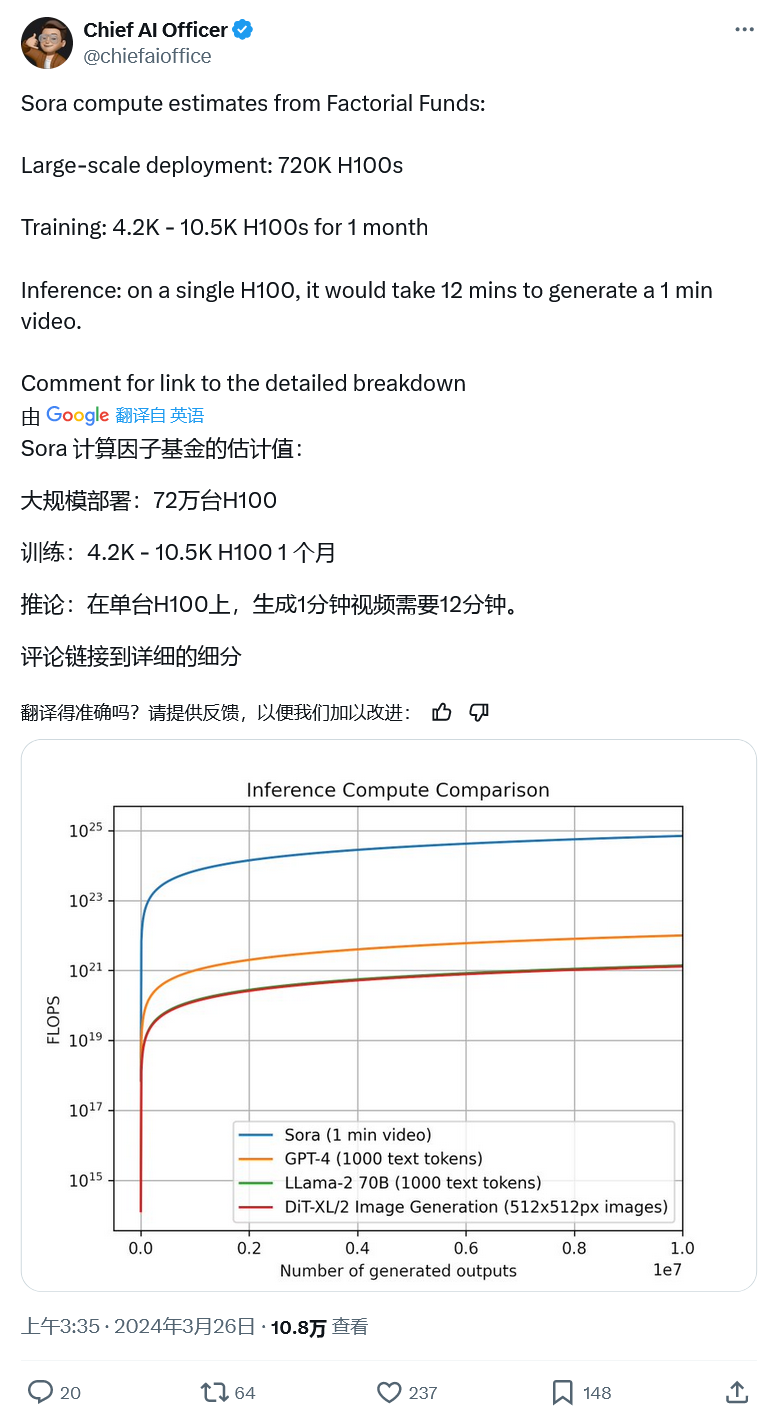

The agency estimates that OpenAI will need 720,000 Nvidia H100 chips to deploy the Vincent video model Sora: worth $21.6 billion

Market research firm Factorial Funds recently released a report that OpenAI needs 720,000 Nvidia H100 AI accelerator cards at peak times to deploy its text-to-video model Sora. If each Nvidia H100 AI accelerator card costs $30,000, 720,000 cards would cost $21.6 billion (currently about RMB 156.168 billion). And this is just the cost of the H100 accelerator card, which consumes a lot of electricity to run. Each H100 consumes 700W of power…- 4.6k

-

The head of the Sora team said Sora is "not a product yet" and will not be available to the public in the short term

Recently, the YouTube channel WVFRM Podcast invited the core team members of OpenAI's video model Sora to participate in an interview. The three members include Bill Peebles, Tim Brooks and Aditya Ramesh, all of whom are Sora project leaders. The three leaders talked about when Sora will be available to users. They said that Sora is still in the feedback acquisition stage, "It is not a product yet, and it will not be released to the public in the short term...- 3.4k

-

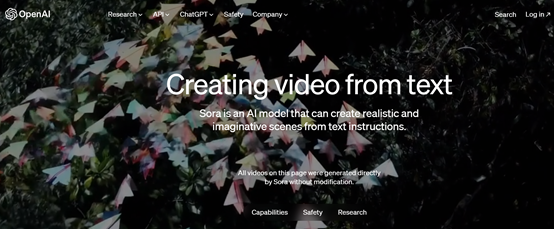

Big news! OpenAI releases Sora, a human-generated video model that can generate 1 minute of video at a time!

In the early morning of February 16, OpenAI released the innovative text-generated video model - Sora - on its official website. From the effect of Sora-generated video shown by OpenAI on its official website, it is very good in terms of generating video quality, resolution, text-semantic restoration, video action consistency, controllability, details, color, etc.! In particular, it can generate videos of up to 1 minute in length! Surpassing mainstream products such as Gen-2, SVD-XT, Pika, etc., it's a king's bomb right out of the gate. On September 21, 2023, OpenAI released the Vincennes map model DALL-E 3, together with the current Sor...- 6.2k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: