-

Huawei Pangu model unveiled

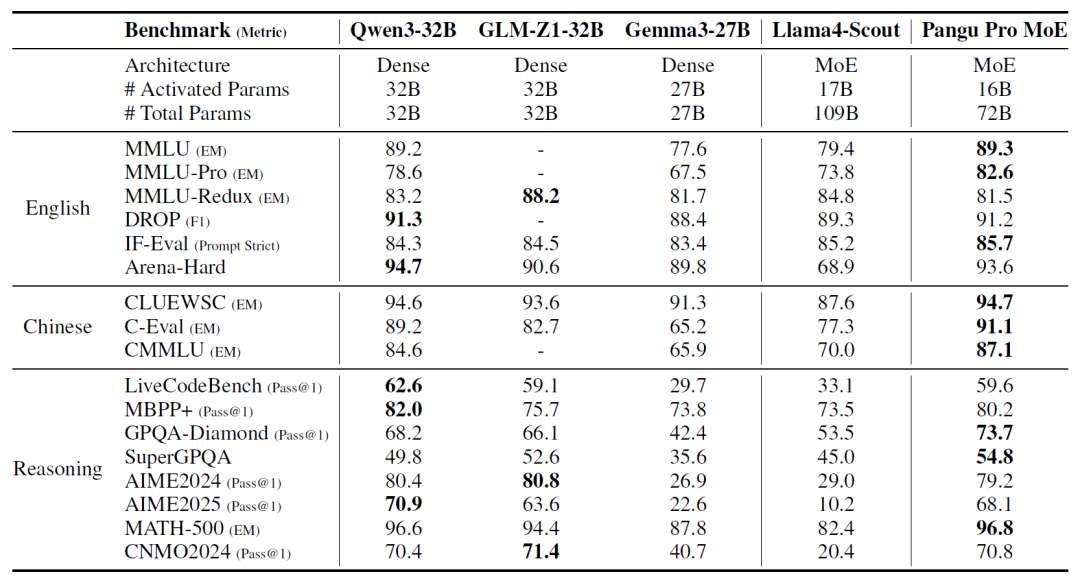

A few days ago, Huawei's Pangu team officially announced Pangu Pro MoE, a grouped hybrid expert model native to Rise. According to the Pangu team: The Mixed Model of Expertise (MoE) is emerging in Large Language Models (LLMs) as an architecture that can support larger scale parameters at lower computational cost, resulting in greater expressive power. This advantage stems from the design feature of its sparse activation mechanism, i.e., each input token only needs to activate a portion of the parameters to complete the computation. However, in real-world deployments, there is a serious imbalance in the activation frequency of different experts, with a portion of experts being overcalled...- 3.4k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: