A few days ago,HuaweiTeam Pango officially announced the grouping of the Rise Nativehybrid expert model-- "Pangu Pro MoE". According to the Pangu team:

Mixed Expert Models (MoE) are emerging in Large Language Models (LLMs), architectures that are able to support larger scale parameters at lower computational cost, resulting in greater expressive power. This advantage stems from the design feature of its sparse activation mechanism, i.e., each input token only needs to activate a portion of the parameters to complete the computation. However, in practical deployments, there is a serious imbalance in the activation frequency of different experts, where some experts are over-invoked while others remain idle for a long period of time, leading to inefficiency in the system.

To this end, the Pangu team proposes a new Mixture of Grouped Experts (MoGE) model, which groups experts in the expert selection phase and constrains tokens to activate an equal number of experts in each group, thus realizing load balancing of experts and significantly improving the deployment efficiency of the model on the Rise platform.

It is reported that the Pangu Pro MoE model is based on the MoGE architecture, and the team has constructed 72 billion total parameters and 16 billion active parameters, and optimized the system for the Rise 300I Duo and 800I A2 platforms.

In terms of performance, Pangu Pro MoE achieves an inference throughput performance of 1148 tokens/s on a single card on the Rise 800I A2, which can be further improved to 1528 tokens/s through speculative acceleration and other techniques, significantly outperforming dense models with 32 billion and 72 billion parameters of the same scale; and on the Rise 300I Duo inference server, the Pangu team also achieves an On the Rise 300I Duo inference server, the Pangu team also realized an extremely cost-effective model inference solution.

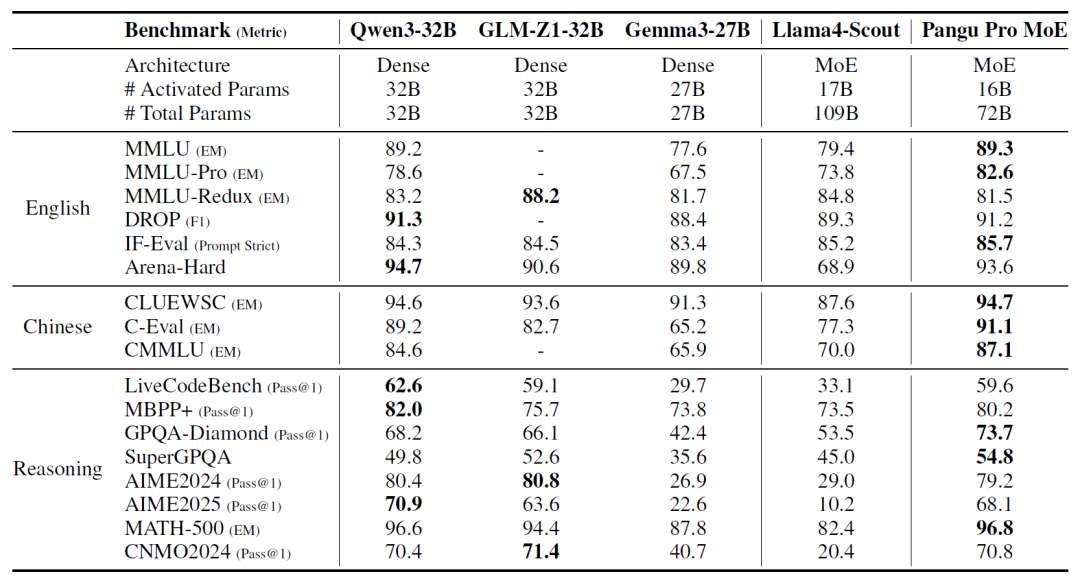

The team's research has shown that the Rise NPU is capable of supporting massively parallel training of Pangu Pro MoE. Multiple public benchmarks have shown that Pangu Pro MoE is the leader in total parameter modeling within the hundreds of billions.

From the official comparison table, Pangu Pro MoE compares with Qwen3-32B, GLM-Z1-32B, Gemma3-27B, and Llama4-Scout and outperforms them in a number of areas, including Chinese, English, and reasoning.

Model Technical Report (Chinese): https://gitcode.com/ascend-tribe/pangu-pro-moe/blob/main/README.md

Model Technical Report (English): https://arxiv.org/abs/2505.21411