-

Microsoft's open source multimodal model LLaVA-1.5 is comparable to GPT-4V

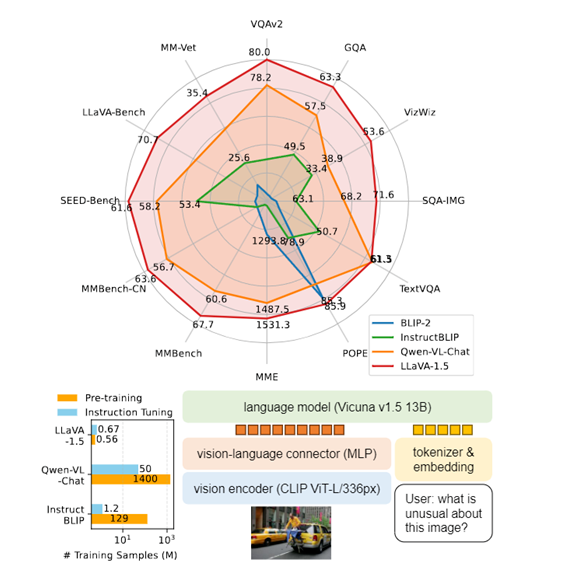

Microsoft has open-sourced the multimodal model LLaVA-1.5, which inherits the LLaVA architecture and introduces new features. Researchers have tested it in visual question answering, natural language processing, image generation, etc. and found that LLaVA-1.5 has reached the highest level among open-source models, comparable to the effect of GPT-4V. The model consists of three parts: the visual model, the large language model, and the visual language connector. Among them, the visual model uses the pre-trained CLIP ViT-L/336px. Through CLIP encoding, a fixed-length vector representation can be obtained to improve the representation of image semantic information. Compared with the previous version…- 9.5k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: