-

Beanbag proposes a new sparse model architecture, UltraMem, which reduces inference cost by up to 83% compared to MoE.

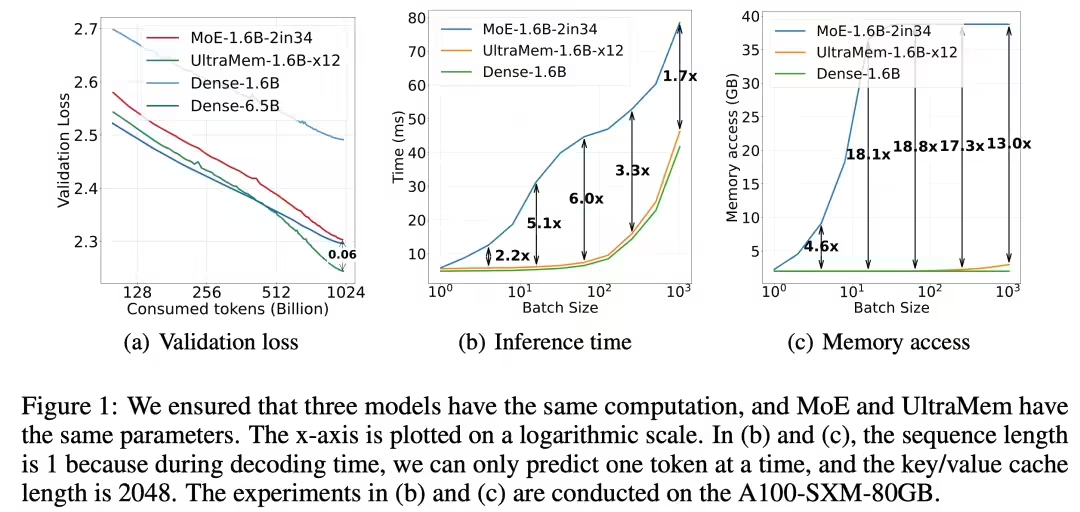

February 12, 2011 - The Beanbag Big Model team announced today that the Byte Jump Beanbag Big Model team has proposed a new sparse model architecture, UltraMem, which effectively solves the problem of high access memory in MoE inference, improves inference speed by 2-6 times compared to the MoE architecture, and reduces the cost of inference by up to 83%. The study also reveals the Scaling Law of the new architecture, proving that it not only has excellent Scaling characteristics, but also outperforms MoE in terms of performance. The study also reveals the Scaling Law of the new architecture, demonstrating that it not only has excellent scaling characteristics, but also outperforms MoE. Experimental results show that the training scale of 20 million value...

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: