-

The country's first open-source vertical large-language model in the field of general agriculture, “Sunon”

On January 14, the official of Nanjing Agricultural University announced that, last week, at the sub-forum of the annual conference of the Higher Agroforestry Education Branch of the Chinese Institute of Higher Education in China in 2025, “Technology for the transformation of the full dimension of agro-forestry education”, the Nanjing Agricultural University of Nanjing had officially launched Sinong, the Siinong language model. The model is the first open-source vertical-language model in the country for the general area of agriculture and the first large-language model for agriculture, which was developed by Nanjing University of Agriculture. The publication of the Sinon Language Model marked a new breakthrough in research and application of artificially intelligent basic models in agriculture at Nanjing Agricultural University. "Sunon..- 2.8k

-

OpenAI is being exposed to the development of a new AI large-language model: codenamed Garlic, performance beyond Google Gemini 3

On December 3rd, The Information reported that OpenAI was developing a new AI Large Language Model to respond to the technical competition of Google Gemini 3, codenamed Garlic. It was described that the new AI model, presented to the team last week by Mark Chen, Chief Research Officer of OpenAI, was said to have performed better than Google Gemini 3.0 and Anthropic Opus 4.5 in the programming and logical reasoning mission. Open..- 4.2k

-

Hugging Face CEO responding to "AI Foam Says": It's more like Big Language Model Foam

According to the news of November 19, TechCrunch today, the co-founder and CEO of Hugging Face, Clem Delange, raised the point at the Axios BFFD event that there is no so-called "AI" foam, but rather a "large-language model bubble" and that this bubble may break down before long. Foams say hundreds of billions of dollars today, but even if the bubble breaks, the future of AI is not threatened. According to Clem Delangue, currently..- 2.1k

-

The first Alpha Arena: Ali Tunyi 3-Max won 22,32% return, GPT-5 lost over 621,TP3T

On November 5th, American Research Institute Nof1 recently launched a live disk test: They injected the six top AI large language models (LLLM) into the initial amount of $10,000 each to enable them to trade in real markets. The first Alpha Arena was officially declared closed, and Ali, under his banner, Qwen3-Max, led the way at the end, winning the investment champion at the return rate of 22.32%. Qwen3-Max, DeepSeek v3.1, GPT-5,..- 3.3k

-

Switzerland Joins Global AI Race with Apertus, a National Open Source Large Language Model

Sept. 3 (Bloomberg) -- There's a new player in the global AI race, and this time it's an entire country. Switzerland has officially released Apertus, a national open-source large language model (LLM) that it hopes will become an alternative to models offered by companies like OpenAI. "Apertus, which comes from the Latin word for "open," was jointly developed by the Swiss Federal Institute of Technology in Lausanne (EPFL), the Swiss Federal Institute of Technology in Zurich (ETH Zurich), and the Swiss National Supercomputing Center (CSCS). jointly developed by all three institutions, all of which are public organizations. "Currently, the Aper...- 2.9k

-

Yupp Platform Launches: Invites Users to Use Industry's Major AI Models at Low Cost, Collects Feedback to Build Leaderboards

June 23, AI startup Yupp has officially launched an "artificial evaluation system", inviting users to score more than 500 AI models in the industry such as ChatGPT, Claude, Gemini, DeepSeek, Grok, Llama, etc. Yupp will build a set of leaderboards called Yupp AI VIBE (Vibe Intelligence Benchmark) based on user feedback data to visually display the level of different models. Yupp will build a set of leaderboards called Yupp AI VIBE (Vibe Intelligence Benchmark) based on user feedback data to visualize the level of different models. It is reported that...- 2.3k

-

Scientists at the Chinese Academy of Sciences prove for the first time that a large language model can "understand" things as well as humans do

June 11 news, 1AI from the Institute of Automation of the Chinese Academy of Sciences WeChat public was informed that recently the joint team of the Institute's Neural Computing and Brain-Computer Interaction (NeuBCI) group and the Center of Excellence for Innovation in Brain Science and Intelligent Technology of the Chinese Academy of Sciences combined behavioral experiments and neuroimaging analysis to confirm for the first time that multimodal large language models (MLLMs) are able to spontaneously form objects that are highly similar to human conceptual representation system. This research not only opens up a new path for AI cognitive science, but also provides a theoretical framework for building AI systems with human-like cognitive structures. The research results are based on Human-...- 1.2k

-

CellFM, the world's largest single-cell fundamental model, released: based on domestic supercomputing, developed by Sun Yat-sen University, Huawei, etc.

May 22, 2011 - Single-cell macrolanguage models provide a new paradigm for unraveling the mysteries of life and disease mechanisms by decoding the "molecular language" of cells. However, the existing models are limited by the data size and arithmetic bottleneck, and it is difficult to realize a qualitative leap in performance. To address this challenge, Professor Yang Yuedong's team at Sun Yat-sen University, together with Chongqing University, Huawei, and XinGeYuan Biotechnology, has successfully developed the world's largest single-cell fundamental model, CellFM, based on the computational power of National Supercomputing Center Guangzhou's "Tianhe Xingyi" supercomputing system, and on a domestically-made Smart Computing Chip. The model innovatively integrates the data of more than 100 million people... The model innovatively integrates more than 100 million...- 8.5k

-

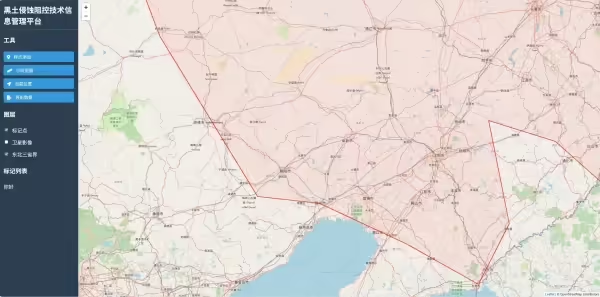

The first national black soil erosion control technology wisdom configuration platform online trial run

April 28 news, according to the Chinese Academy of Sciences Northeast Institute of Geography and Agroecology to Jilin Daily reported that recently, led by the Chinese Academy of Sciences Northeast Institute of Geography and Agroecology, Northwest University of Agriculture and Forestry, Nanjing Soil Research Institute of the Chinese Academy of Sciences, Chinese Academy of Agricultural Sciences Institute of Agricultural Resources and Agricultural Planning, Jilin Agricultural University, Jilin Academy of Soil and Water Conservation, and other joint development of the The "Wisdom Protection of Black Soil" was put on line for trial operation, which is the nation's first big language model-driven wisdom configuration platform for black soil erosion prevention and control technology. Northeast black soil protection knowledge database management platform According to reports, the platform is based on the massive black soil erosion retreat...- 2.1k

-

Turing Award Winner Likun Yang: Development of Large Language Models is Approaching a Bottleneck, AI Cannot Achieve Human-Level Intelligence by Relying on Text Training Only

Yann LeCun, Turing Award winner and Meta's chief AI scientist, was a guest on the "Big Technology Podcast", which aired on the 20th, and talked about why current generative AI is difficult to make scientific discoveries and how AI will develop in the future. He said that existing AI technologies, such as big language models, are essentially based on text training and generate answers through statistical laws, and cannot "create new things", so there are limitations. Human beings are able to use common sense and mental models to think and solve new problems, and this...- 4.6k

-

Hon Hai's First Big Language Model FoxBrain Released: Reasoning Capabilities, Future Plans for Partial Open Source

March 10 news, according to Reuters reports, Hon Hai today announced the launch of the first big language model "FoxBrain", and plans to use the technology to optimize manufacturing and supply chain management. Hon Hai said in a statement, FoxBrain by 120 NVIDIA H100 GPU training is completed, the training cycle of about four weeks. Hon Hai is currently an Apple iPhone assembler and maker of NVIDIA's AI servers, and is the world's largest electronics foundry. The model is based on Meta's Llama 3.1 architecture and has been specifically optimized to...- 4.1k

-

Speed to Market 30%: SemiKong, the Industry's First Dedicated Large Language Model for the Semiconductor Industry, Released

December 29, 2011 - SemiKong, the world's first AI tool built specifically to meet the needs of the semiconductor industry, has been released as a large-scale language model (LLM) developed by Aitomatic and its "AI Alliance" partners. SemiKong is designed to be integrated into the workflow of semiconductor design companies, acting as a "digital expert" in the field to dramatically accelerate time-to-market for new chips. According to Aitomatic, the semiconductor industry is facing a significant loss of expertise. As more and more...- 2.4k

-

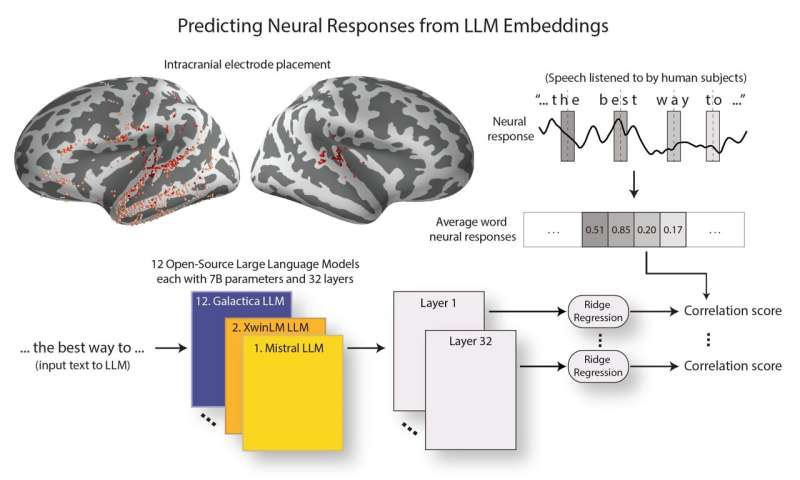

Columbia University Study: Big Language Models Are Becoming More Like the Human Brain

Dec. 20, 2011 - Large language models (LLMs), represented by ChatGPT and others, have become increasingly adept at processing and generating human language over the past few years, but the extent to which these models mimic the neural processes of the human brain that support language processing remains to be further elucidated. As reported by Tech Xplore 18, a team of researchers from Columbia University and the Feinstein Institute for Medical Research recently conducted a study that explored the similarities between LLMs and neural responses in the brain. The study showed that as LLM technology advances, these models not only perform...- 4.1k

-

Salesforce CEO: Big Language Models May Be Approaching Technical Ceiling, AI's Future Is Intelligent Bodies

As Business Insider reported today, Salesforce CEO Marc Benioff recently said on the Future of Everything podcast that he believes the future of AI lies with autonomous agents (commonly known as "AI intelligences") rather than the large language models (LLMs) currently used to train chatbots like ChatGPT. "AI intelligences") rather than the large language models (LLMs) currently used to train chatbots like ChatGPT. "In fact, we may be approaching the technological upper limit of LLMs." Benioff mentioned that in recent years...- 3.2k

-

Honor MagicOS 9.0 Upgrade Supports 3 Billion Parameters End-Side Large Language Model: Power Consumption Drops by 80%, Memory Usage Reduced by 1.6GB

October 23 news, glory today officially released MagicOS 9.0, said to be "the industry's first personalized full-scene AI operating system equipped with intelligent body". In MagicOS 9.0, MagicOS new magic big model family ushered in the upgrade, support for flexible deployment of end-cloud resources, flexible deployment of different devices, with each version as follows: 5 million parameter image big model, end-side deployment, the full range of support for 40 million parameter image big model, end-side deployment, high-end series of 3 billion parameter big language model, end-side deployment, high-end series of 3 billion parameter big language model, end-side deployment, the high-end series of ...- 7.4k

-

JetBrains Builds the Most Powerful AI Assistant for Developers Mellum: Built for Programming, Low Latency, Fast Completion, High Accuracy

JetBrains released a blog post yesterday (October 22), specifically designed to launch a new large language model, Mellum, to provide software developers with faster, smarter and more context-aware code completion. Officially, the biggest highlight of Mellum compared to other big language models is that it is designed specifically for developers to program with low latency, high performance, and comprehensive functionality to provide developers with relevant advice in the shortest possible time. Mellum supports Java, Kotlin, Python, Go and P...- 6.8k

-

MediaTek's next-generation Tengui flagship chip optimized for Google Gemini Nano multimodal AI with support for image and audio processing

October 8, 2011 - MediaTek today announced that the new generation of its Tiangui flagship core is now optimized for Google's big language model, Gemini Nano. Officially, the upcoming Tiangui flagship core is optimized for Google's Gemini Nano multimodal AI, supporting image and audio processing in addition to text. The new-generation Tengui Flagship Core is equipped with the eighth-generation MediaTek AI processor (NPU), which supports multimodal hardware acceleration for text, image, and voice. MediaTek's new-generation Tenguet flagship core will be launched on October 9 at 10:30...- 3.3k

-

Shunfeng Releases "Feng Language" Large Language Model: Abstract Accuracy Rate Exceeds 95%, Claims Logistics Pendant Domain Capability Beyond Generalized Models

Shunfeng Technology yesterday in Shenzhen International Artificial Intelligence Exhibition released the logistics industry vertical domain big language model "Feng language". Jiang Shengpei, technical director of large model of SF Technology, said that SF has self-researched the industry's vertical domain large language model under the idea of comprehensive consideration of the balance of effect and cost of use. In terms of training data, Feng language about 20% of training data is Shunfeng and the industry's logistics supply chain related vertical domain data. At present, the accuracy of the summary based on the big model has exceeded 95%, and the average processing time of customer service personnel after dialoguing with customers has been reduced by 30%; the accuracy of the location of the problem of the courier boy has exceeded ...- 7.3k

-

Ali Tongyi Qianwen announced the launch of the new domain name "tongyi.ai", and added a deep search function to the web version of the chat

Alibaba's large language model "Tongyi Qianwen" announced the launch of a new domain name "tongyi.ai" and brought a number of new features. Summarized as follows: The web version of the chat adds a new deep search function: supports more content source indexes, search results are more in-depth, professional and structured, and the digital corner mark floats to display the source web page. App image micro-motion effects support multi-size images: enter the Tongyi App channel page, select "Image Micro-motion Effects", you need to upload a picture, and you can generate sound effects and micro-motion video effects that match the picture. App custom singing and acting supports 3:4 aspect ratio (originally 1:1): audio...- 4.7k

-

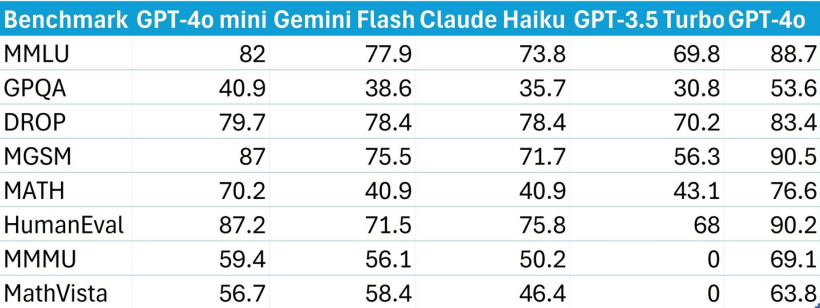

Will the AI large language model price war begin? Google lowers Gemini 1.5 Flash fees this month: the maximum reduction is 78.6%

Is the price war for large language models coming? Google has updated its pricing page, announcing that starting from August 12, 2024, the Gemini 1.5 Flash model will cost $0.075 per million input tokens and $0.3 per million output tokens (currently about RMB 2.2). This makes the cost of using the Gemini 1.5 Flash model nearly 50% cheaper than OpenAI's GPT-4o mini. According to calculations, Gemini …- 10k

-

OpenAI CEO admits that the alphanumeric naming of "GPT-4O MINI" is problematic

As OpenAI launches its next-generation GPT large language model, GPT-4o Mini, CEO Sam Altman finally acknowledged the problem with its product naming. The GPT-4o Mini is advertised as more cost-effective than the non-Mini version, and is particularly suitable for companies to develop their own chatbots. However, this complex and cumbersome naming has sparked widespread criticism. This time, OpenAI CEO Sam Altman finally responded to the criticism, admitting that the combination of numbers and letters…- 6.5k

-

Cohere and Fujitsu launch Japanese large language model "Takane" to improve enterprise efficiency

Canadian enterprise AI startup Cohere and Japanese information technology giant Fujitsu recently announced a strategic partnership to jointly launch a Japanese large language model (LLM) called "Takane". The partnership aims to provide enterprises with powerful Japanese language model solutions to enhance the user experience of customers and employees. Source note: The image is generated by AI, and the image is licensed by Midjourney. According to the statement, Fujitsu has made a "significant investment" for this collaboration. Cohere will use the advantages of its AI model, and Fujitsu will use its Japanese training and tuning technology...- 4.5k

-

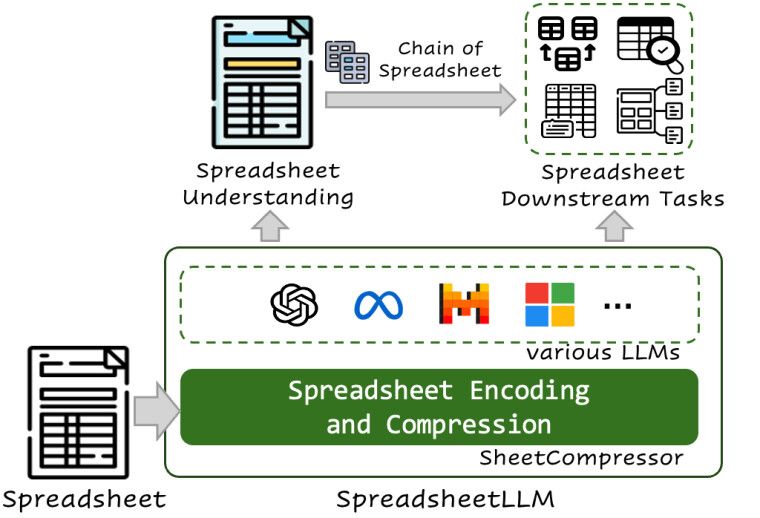

Microsoft develops new AI model for Excel and other applications: performance is 25.6% higher than conventional solutions, and word usage cost is reduced by 96%

According to a recent research paper published by Microsoft, it plans to develop a new AI large language model, SpreadsheetLLM, for spreadsheet applications such as Excel and Google Sheets. Researchers said that existing spreadsheet applications have rich functions and provide users with a large number of options in terms of layout and format, so traditional AI large language models are difficult to handle spreadsheet processing scenarios. SpreadsheetLLM is an AI model designed specifically for spreadsheet applications. Microsoft has also developed SheetCom…- 9.1k

-

SenseTime's large language model application SenseChat is now open to Hong Kong users for free and supports Cantonese chat

SenseTime announced that its Sensechat mobile app and web version are now free to Hong Kong users. The service has been launched in mainland China. Sensechat is based on the "Shangliang Multimodal Large Model Cantonese Version" launched by SenseTime in May this year. Relying on SenseTime's "daily update" language and multimodal capabilities, as well as its understanding of Cantonese, local culture and hot topics, users can chat with it directly in the most familiar Cantonese, directly input text or voice, ask questions, search for things, generate pictures, write copy, etc. IT Home attached an example as follows: Hong Kong Apple iPhone uses...- 12.6k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: