-

Former Google CEO: The AI model or hacked to "learning to kill."

On October 11, according to CNBC, Google CEO Eric Schmidt warned about the potential risks of artificial intelligence on a previous Sifted Summit. He indicated that the AI model was likely to be hacked or even manipulated to learn dangerous skills. There is evidence that either open or closed source models can be removed by hackers. In training, they learn a lot, and a bad example is how to kill. He stressed that all major males now..- 2.6k

-

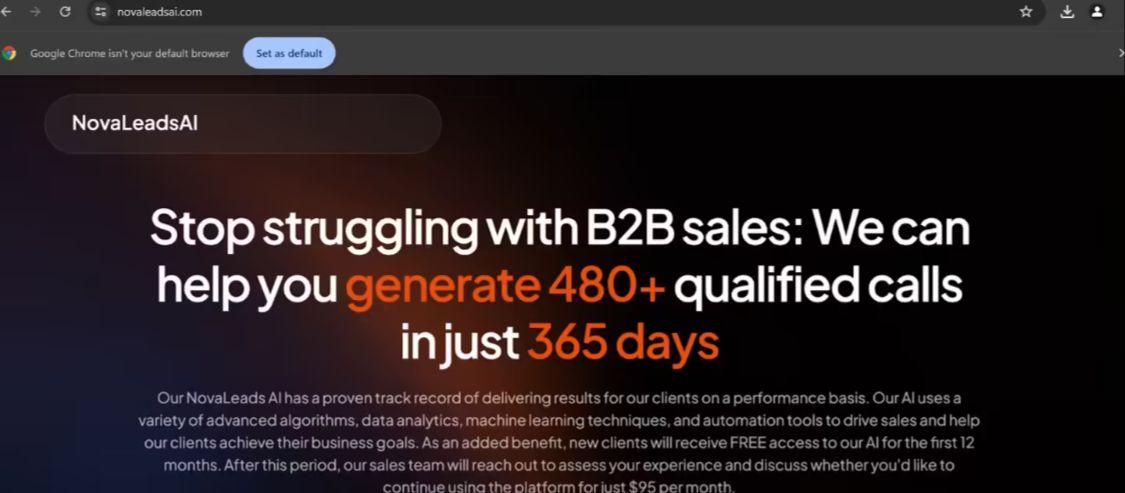

Security Firm Exposes Hacker Torrents AI Tool Sites Spreading Ransom Trojans Involving ChatGPT / InVideo AI and Other Platforms

Security firm Cisco Talos released a notification on June 7, revealing that some hackers have set up cottage AI tool websites to spread ransom Trojans such as CyberLock, Lucky_Gh0$t, Numero, and so on, and it is now known that the corresponding hackers have cottaged platforms such as Novaleads, ChatGPT, InVideo AI, and so on. 1AI Reference Report was informed that the copycat Novaleads platform, for example, although in fact the original Novaleads company's main business "customer relationship management services" software, did not...- 1.3k

-

AI intelligences have the ability to go head-to-head with human hackers, and in some cases even win

June 2 news, according to foreign media The Decoder 1 report, Palisade Research recently held a series of network security competitions show that the AI intelligent body has the ability to compete head-to-head with human hackers, and even win in some occasions. The research team tested the AI systems in two large-scale "capture the flag" (CTF) competitions, in which thousands of players competed. In such competitions, teams have to solve security challenges by breaking encryption, identifying vulnerabilities, and so on, in order to find hidden "flags. The purpose of the test is to examine...- 1.2k

-

Cybercrime's "Intelligent Accomplices": AI Intelligence, Such as OpenAI Operator, Becomes a New Weapon for Hackers

March 15, 2011 - Symantec, a cybersecurity company, published a blog post on March 13, pointing out that AI intelligences (such as OpenAI's Operator) have broken through the functional limitations of traditional tools to assist hackers in launching phishing attacks and constructing attack infrastructures. The researchers emphasized that such AI tools are shifting from "passive assistance" to "active execution," becoming a new threat to cybersecurity, 1AI said, citing the blog post. Symantec found that AI intelligence can manifest itself in complex attack chains, including intelligence gathering, malicious code writing, and... -

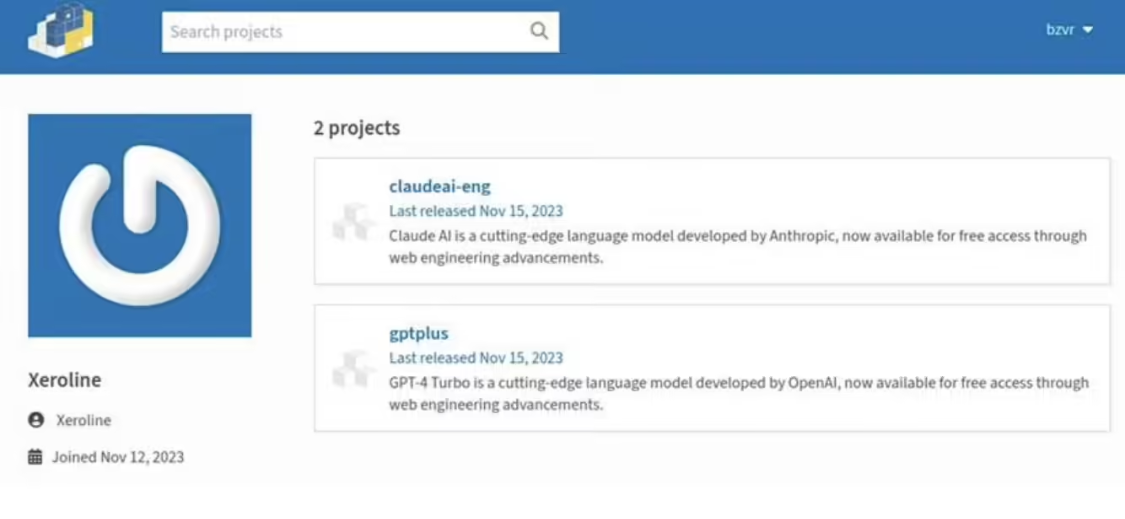

Security firm reveals PyPI fake GPT / Claude AI-assisted software package tool is really Trojan ransomware

Security firm Kaspersky has issued a notification claiming that they have discovered two malware packages in PyPI that masquerade as GPT and Claude AI platform aids, but are actually Trojan ransomware. 1AI has learned that the two malware packages are named "gptplus" and "claudeai-eng". Among them, "gptplus" claims to be able to access the GPT-4 Turbo model via OpenAI's API, while "claudeai-eng" claims to be able to access the Anthropi ...- 5.2k

-

Study Finds Hackers Can Easily Manipulate AI Robots, Turning Them Into Deadly Weapons

Researchers at the University of Pennsylvania have found serious vulnerabilities in a range of AI-enhanced robotic systems that are susceptible to hacking and control. An IEEE Spectrum report cites alarming examples such as hacked robot dogs turning flamethrowers on their owners, guiding bombs to the most destructive locations, and self-driving cars deliberately crashing into pedestrians. According to 1AI, researchers at the Pennsylvania College of Engineering have dubbed the LLM-based robotic attack technique they developed RoboPAIR. from three different robotics vendors...- 3.6k

-

OpenAI internal forum hacked, secrets stolen

Recently, according to the New York Times, the internal forum of OpenAI, a well-known artificial intelligence company, was hacked, causing a series of security issues. Hackers successfully obtained detailed information about the design of the company's artificial intelligence technology, although they did not invade the system that OpenAI actually built AI. This incident did not lead to the leakage of customer or partner information, but it caused employees to worry about the security of the company. OpenAI executives announced the incident at an all-staff meeting in April 2023, but did not disclose it to the public because no customer or partner information was stolen. It is reported that some employees…- 3.6k

-

Security company warns hackers are targeting user accounts of major AI language model platforms to resell API balances/obtain private information

Security company Sysdig recently released a report claiming that a large number of hackers are targeting major LLM large language model network platforms to launch "LLMjacking" attacks. Hackers steal user accounts and passwords through a series of methods, resell model APIs to third parties, and select private information from user conversation records for blackmail or public sale. Sysdig said that hackers seem to "prefer" Anthropic Claude v2/v3 platforms. Currently, they have detected that hackers mainly use database collision and PHP frameworks...- 4.7k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: