Yale University, in conjunction with researchers from the University of Cambridge and Dartmouth College, has launched a program called MindLLM ofHealthcare industry AI tool that converts functional magnetic resonance imaging (fMRI) data of the brain into text, outperforming industry technologies such as UMBRAE, BrainChat, UniBrain, and others in multiple benchmark tests.

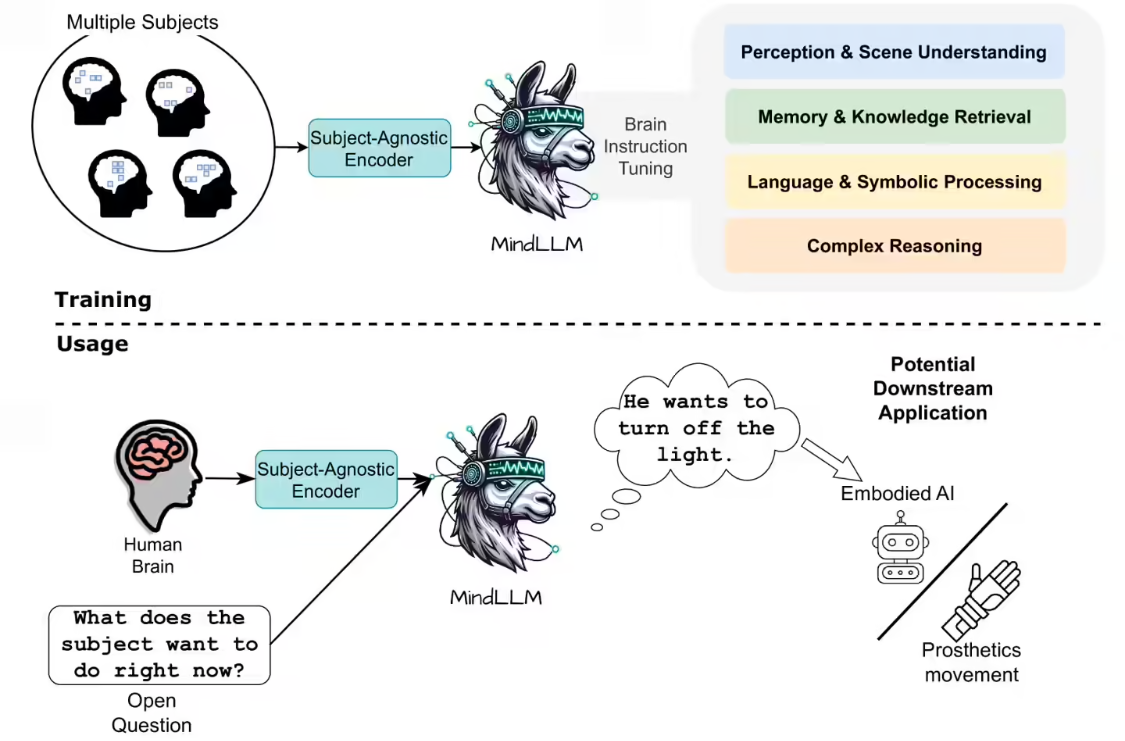

MindLLM is described as consisting of an fMRI encoder and a large-scale language model that analyzes stereoscopic pixels (Voxels) in fMRI scans to interpret brain activity. Its fMRI encoder employs a neuroscientific attention mechanism that adapts to the shape of different input signals to enable multiple analysis tasks.

The research team also introduced the Brain Instruction Tuning (BIT) method for the corresponding tool, which enhances the model's ability to extract a wide range of semantic information from fMRI signals, enabling it to perform a variety of decoding tasks, such as image description and question-and-answer reasoning.

The test results show that in the benchmark tests of text decoding, cross-individual generalization, and adaptation to new tasks, MindLLM improves up to 12.0%, 16.4%, and 25.0% relative to the industry model, proving that it outperforms the traditional model in adapting to new subjects and handling unknown linguistic reasoning.

but,The researchers mentioned that currently the model can only analyze static image signalsIn the future, if further improved, it is expected to be developed into a real-time fMRI decoder and widely used in the fields of neural control, brain-computer interface and cognitive neuroscience, which will have a positive impact on the applications of neural prostheses for repairing perceptual ability, mental state monitoring and brain-computer interaction.

The corresponding paper is now available on ArXiv, with the address of the paper (Click here to visit).