March 14th.Tsinghua UniversityProfessor Zhai Jidong's team at the Institute of High Performance Computing at the University, and Tsinghua-based startup Qingcheng Jizhi, jointly announced today that theLarge Model Inference Engine“Chiba Chitu" is now open source.

According to the introduction, this engine realizes for the first time to run FP8 accuracy models natively on non-NVIDIA Hopper architecture GPUs and various types of domestic chips, halving the cost and doubling the performance of DeepSeek inference. Positioned as a "production-grade large model inference engine", it offers the following features:

- Diversified computing power adaptation: not only supports NVIDIA's latest flagship to the old series of products, but also provides optimization support for domestic chips.

- Full Scenario Scalability: From CPU-only deployments, single GPU deployments to large-scale cluster deployments, Red Rabbit Engine provides scalable solutions.

- Long-term stable operation: can be applied to the actual production environment, stable enough to carry concurrent business traffic.

Officially, the current open-source Red Rabbit Engine, when deployed with the DeepSeek-R1-671B full-blooded version, achieved a 3.15 times increase in inference speed while reducing GPU usage by 50% in comparison to some foreign open-source frameworks in the tests on the A800 cluster.

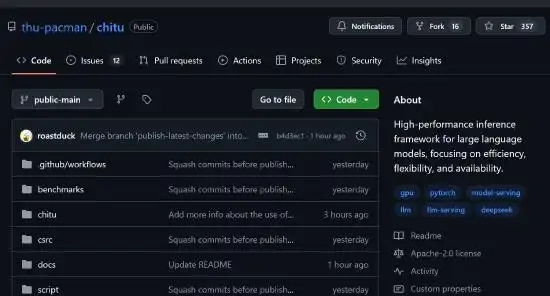

1AI with open source address: https://github.com/thu-pacman/chitu