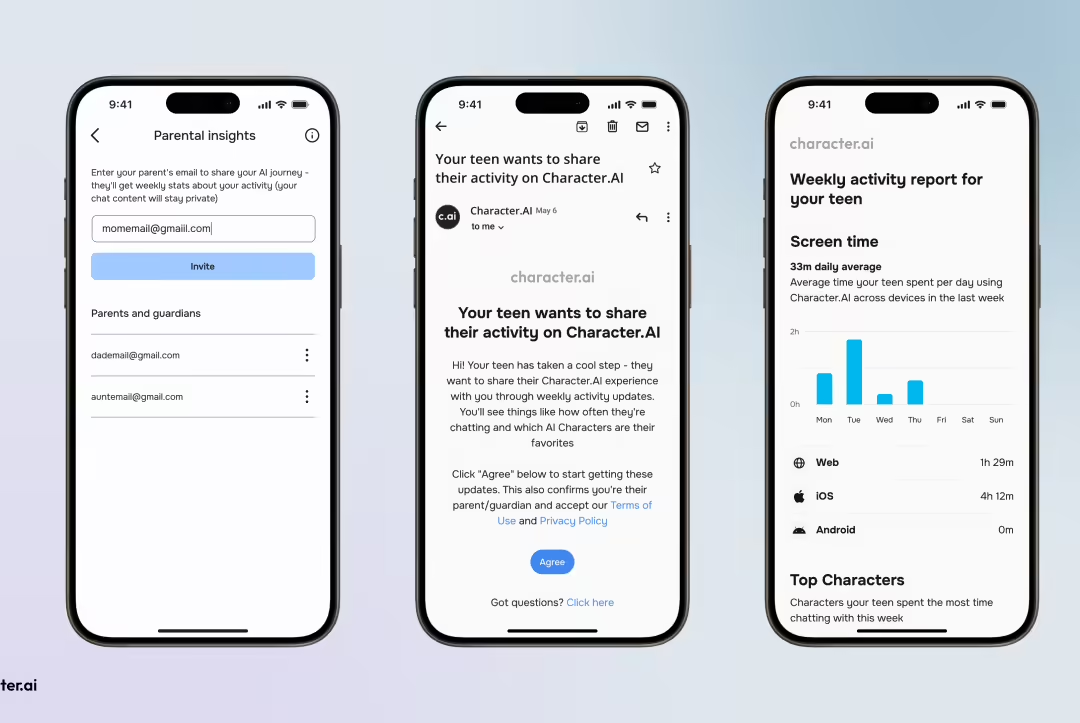

Chatbot Platform CharacterAI launched a new feature, Parental Insights, on March 26 that allows teen users to send weekly reports on their chat usage to their parents.

According to the official announcement, the report includesUsers' average daily time spent on web and mobile, most frequently interacted characters and their chat time, aimed at responding to concerns about minors' addiction to chatbots and exposure to inappropriate content.

This feature is optional and parents are not required to register for an account.Minor users can be found in the Character.AI Self-enabled in Settings.. Officials emphasize that the report only provides an overview of user usage andWon't contain full chat logs, so the content of the conversation will not be shared.

Character.AI has been rolling out new features for underage users since last year, but at the same time the platform has been questioned and even faced legal action over the content of its service. character.AI has become popular among the teenage community, allowing users toCreate, customize and publicly share chatbots. However, multiple lawsuits have accused some of the bots on the platform of providing inappropriately sexualized content and even spreading messages involving self-harm.

Character.AI says the system has been tweaked to, among other things, move users under the age of 18 to an area specifically trained toAvoiding "sensitive" contentmodels and add more visible reminders to the interface to emphasize that the bots are not real people.

As 1AI previously reported, Character.AI faced multiple lawsuits last year. For its behavior toward teenage users that allegedly caused "Serious and irreparable harm". Multiple Character.AI chatbots engaged in conversations with minors aboutEgregious behaviors such as self-harm and sexual abuse.