April 8 News.search in depth(DeepSeekThe company has joined forces with Tsinghua University to launch a new AI alignment technology, SPCT (Self-Principled Critique Tuning), which breaks through the traditional model of relying on massive amounts of training data.Dynamically optimizing output quality through the inference phase.

According to a paper published by the research team on April 4, the technique is based on a recursive architecture of "principle synthesis-response generation-critical filtering-principle optimization".Enable models to dynamically correct outputs as they reason.

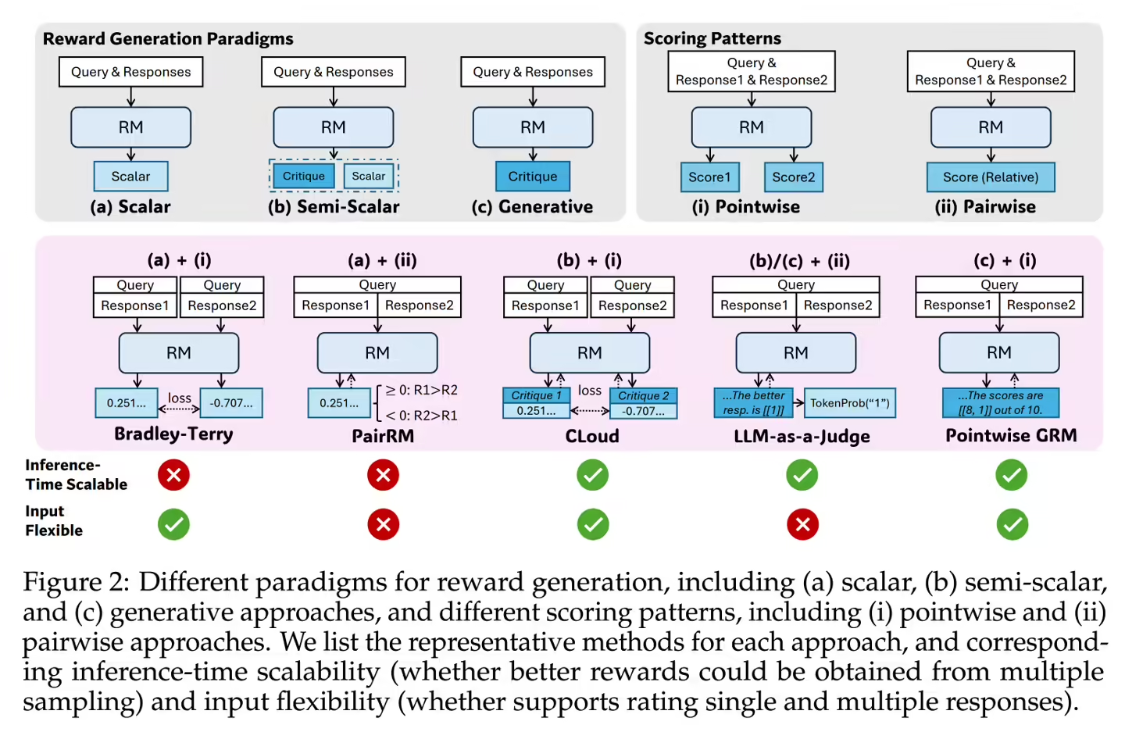

The SPCT approach is divided into two phases. First, rejection fine-tuning is used as a cold-start phase to allow the GRM to adapt to different input types and generate principles and critique content in the correct format. The second is the rule-based online reinforcement learning phase, which uses rule-based outcome rewards to encourage the GRM to generate better principles and critique content and improve the scalability of the inference phase.

Tests of the DeepSeek-GRM model with 27 billion parameters show a performance level of a 671B scale model through inference computation with 32 samples per query. This hardware-aware design utilizes a hybrid expert system (MoE) and supports a 128k token context window with a single query latency of only 1.4 seconds.

The report points out that SPCT significantly reduces the deployment threshold of high-performance models, with the DeepSeek-GRM model, for example, costing about $12,000 to train (note: the current exchange rate is about RMB 87,871) and scoring 8.35 on the MT-Bench.

| Model | ballpark | MT-Bench | Estimated training costs |

|---|---|---|---|

| DeepSeek-GRM | 27B | 8.35 | $12,000 |

| Nemotron-4 | 340B | 8.41 | $1.2 million |

| GPT-4o | 1.8T | 8.72 | $6.3 million |

For comparison, 340B's Nemotron-4, which costs $1.2 million, scored 8.41 points. OpenAI's 1.8T parameter GPT-4o scored 8.72 points, but the cost is as high as $6.3 million (RMB 46.132 million at current exchange rate), while the cost of DeepSeek-GRM is only one part in 525. This technology reduces the need for human annotation by 90% and energy consumption by 73% compared to DPO, providing new possibilities for dynamic scenarios such as real-time robot control.