April 12, 2011 - Technology media outlet marktechpost published a blog post yesterday (April 11) reporting thatNvidiaRelease Llama-3.1-Nemotron-Ultra-253B-v1.This 253 billion parameterLarge Language ModelsAchieve major breakthroughs in reasoning power, architectural efficiency and production readiness.

As AI becomes pervasive in digital infrastructures, organizations and developers need to find a balance between computational cost, performance, and scalability. The rapid development of large-scale language models (LLMs) has improved natural language understanding and dialog capabilities, but their large scale often leads to inefficiencies and limits large-scale deployment.

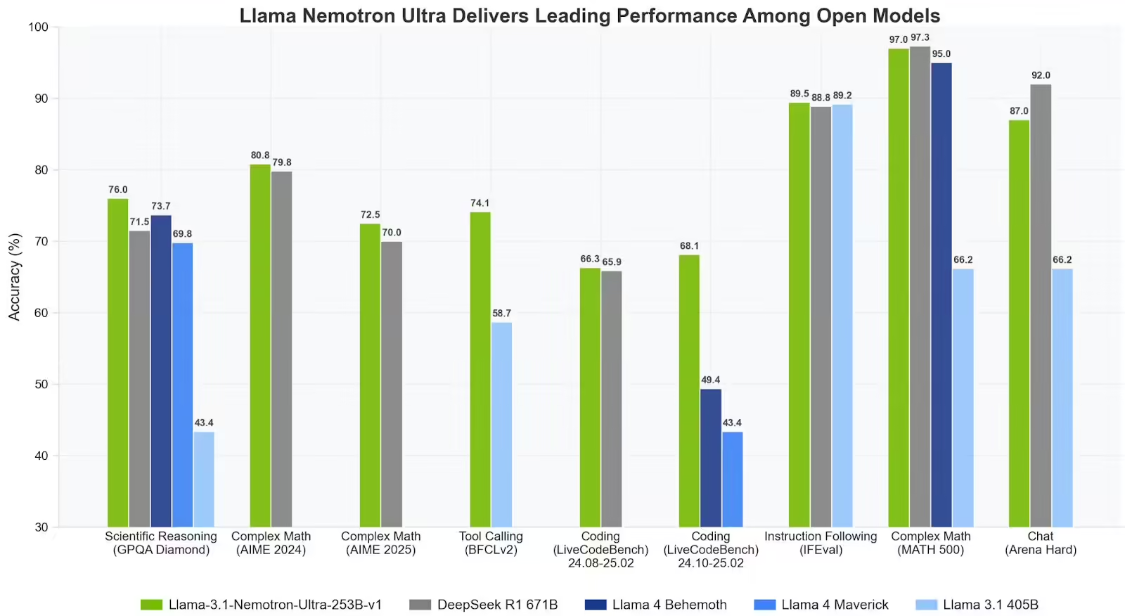

NVIDIA's newly released Llama-3.1-Nemotron-Ultra-253B-v1 (Nemotron Ultra for short) meets this challenge head-on with a model that is based on Meta's Llama-3.1-405B-Instruct architecture, designed for commercial and enterprise needs, and supports tasks ranging from tooling use to multiple rounds of complex command execution tasks, from tool usage to multi-round complex instruction execution.

Citing a blog post, 1AI describes the Nemotron Ultra as using a decoder-only dense Transformer structure optimized by the Neural Architecture Search (NAS) algorithm, which is innovative in that it employs a jumping-attention mechanism, where the attention module is omitted from some of the layers or replaced with a simple linear layer.

Additionally, feed-forward network (FFN) fusion technology merges multiple layers of FFNs into wider but fewer layers, dramatically reducing inference time while maintaining performance. The model supports a context window of 128K tokens and can handle long texts, making it suitable for advanced RAG systems and multi-document analysis.

Nemotron Ultra is also a breakthrough in deployment efficiency. It can run inference on a single 8xH100 node, significantly reducing data center costs and improving accessibility for enterprise developers.

NVIDIA further optimized the model through multi-stage post-training, including supervised fine-tuning on tasks such as code generation, math, dialogue, and tool invocation, as well as reinforcement learning (RL) using the Group Relative Policy Optimization (GRPO) algorithm. These steps ensure that the model performs well in benchmarks and is highly attuned to human interaction preferences.