according toShanghai Artificial Intelligence LaboratoryOfficial public number, April 16, Shanghai Artificial Intelligence Laboratory (Shanghai AI Lab) upgraded andOpen Sourcegeneral purposeMultimodal large modelShusen Wanxiang 3.0 (InternVL3).

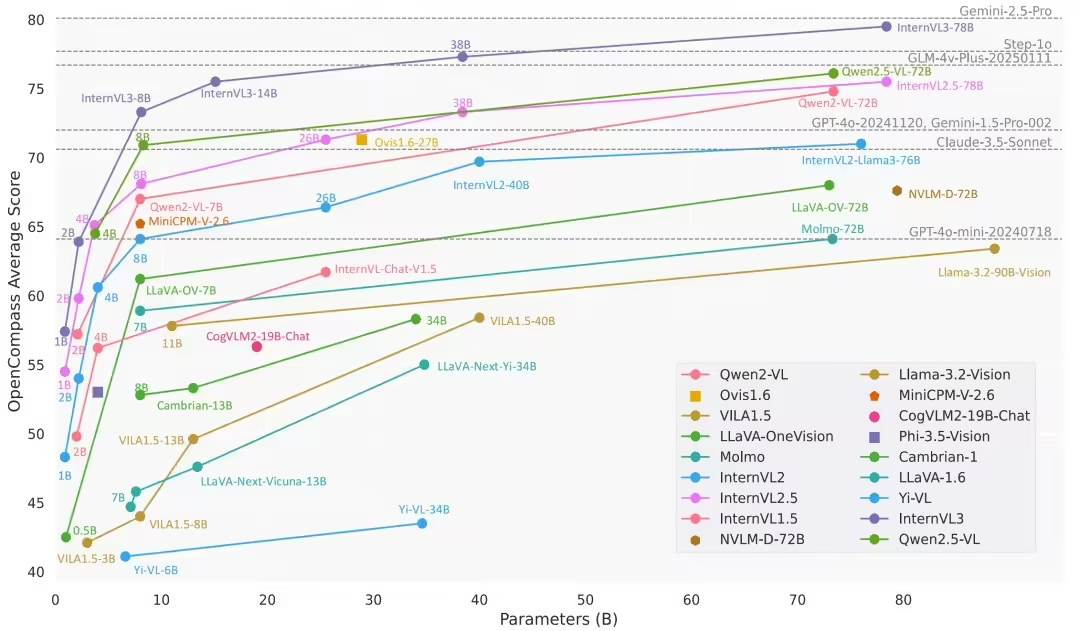

Officially, through the use of innovative multimodal pre-training and post-training methods, InternVL3 multimodal basic capabilities have been comprehensively improved, and in the expert-level benchmark tests and comprehensive multimodal performance tests, the full-scale version of the 1 billion to 78 billion parameters ranked first in the performance of open source models, and at the same time, the capabilities of the graphical user interface (GUI) intelligences, the comprehension of architectural scene drawings, the spatial perceptual reasoning, and the reasoning of liberal arts disciplines have been significantly improved. perceptual reasoning, and generalized disciplinary reasoning.

According to the report, the team proposed aAn Innovative Native Multimodal Pretraining Approach, unlike the traditional approach of optimizing a large language model before adding visual capabilities, this approach seamlessly combines textual data with multimodal data in the pre-training phase of the model, allowing the model to beLearning language and vision at the same timeThis allows for simultaneous processing of text and multimodal inputs.

In addition to handling generalized multimodal tasks, InternVL3 extends multimodal capabilities in a variety of ways, such asGraphical User Interface (GUI) Intelligentsia, Architectural Scene Drawing Understanding, Spatial Perceptual Reasoning, Generalist Discipline Reasoningwait.

According to the introduction, InternVL3 can be used as a GUI intelligence to follow the instructions toOperate specialized software on your computer or cell phone.

1AI summarizes the relevant links below:

- Link to technical report: https://huggingface.co/ papers / 2504.10479

- Code open source / Model usage: https://github.com/ OpenGVLab / InternVL

- Model address: https://huggingface.co/ OpenGVLab / InternVL3-78B

- Public Beta: https://chat.intern-ai.org.cn/