-

On the dark side of the moon, the strongest open source is launched

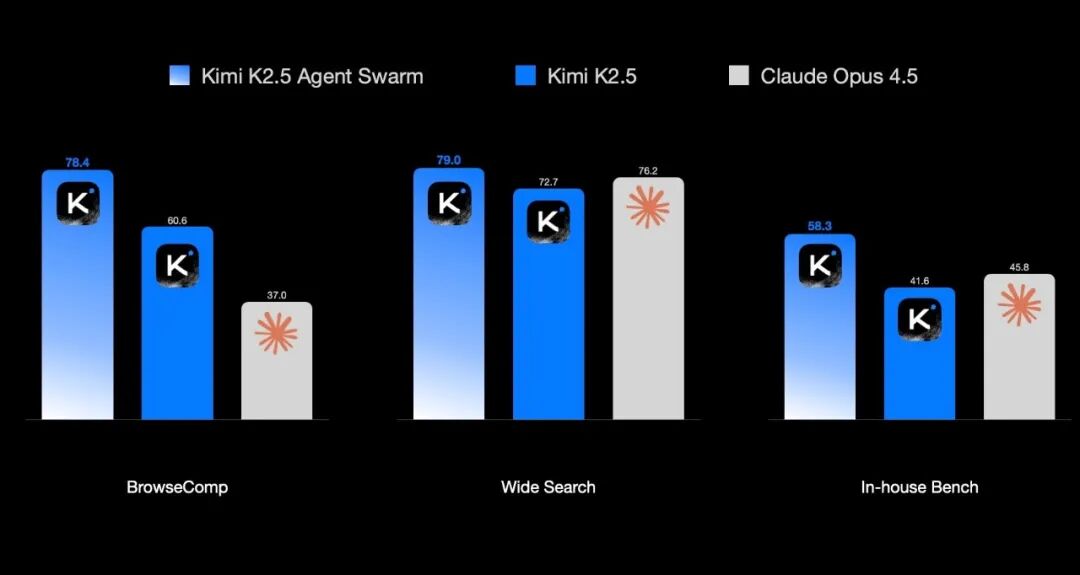

On January 28th, the dark side of the month officially launched the latest version of the flagship model, Kimi K2.5, to the public yesterday, to achieve a full upgrade in visual, multimodular understanding, code generation and intelligence capabilities. It was described that Kimi K2.5, using original multimodular structures, supported text, image and video input and was able to perform tasks such as image analysis, video analysis and visual programming. Official displays show that models can generate 3D models based on plans, re-engineer web interfaces from video and achieve higher accuracy path planning and visual debugging in image reasoning tasks..- 929

-

Aliyun Open Source 6B Parameter Z-Image Base Model, Generating Photo Rejection of AI "People's Face"

On January 28th, Ali Yunyuan officially launched the Z-Image Base Model today, January 28th. The model is 6B in size, preserves the full weight distribution for the non-distillation base model, supports the CFG pilot mechanism, and provides a training base for fine-tuning missions such as LoRA, ControlNet. Z-Image claims to break the writing limits of a single dimension: whether it's phototolealism that pursues the shadows, or the dynamic and digital arts that have emotional tension, Z-Image captures and reconstructs every..- 1.1k

-

Clawdbot is here to install a 7x24-hour AI assistant

A recent open-source AI assistant, Clawdbot, is very hot on the offline: it can run on the server for 7 x 24 hours, and the user sends it a message through the instant communication platform, directing it to do all kinds of work. It's no longer useful to feel its ability, as illustrated by the fact that Clawdbot is better at "do it" than a normal AI chat robot, and in the case of the above, he did all the work of downloading the YouTube plugin. How can we have it? I. Requirements for the deployment of Clawdbot. 1, Telegram: This is the simplest, official push..- 3k

-

Clawdbot Installation and Introduction (Putting Hands New)

What is Clawdbot? Clawdbot is an open-source, self-hosted AI Assistant Framework maintained by community developers (web:clawd.bot), which allows you to integrate AI models (e.g., anthropic Claude, Openai GPT or other API-supported models) into chat applications. Through natural language conversations, you can get AI to execute server commands, read and write files, search the Internet, manage calendars, send mail, control other services, even access mobile cameras or push notifications..- 31.6k

-

Coderrr: A powerful open-source AI coding assistant CLI tool that prepares, debugs and publishes code

Coderrr is an open-source AI coding assistant designed to accelerate the development process. Through natural language descriptions, it generates codes, debugs and deploys, and applies to various development scenarios. Coderrr function AI driver code generation: Generate code for direct input production according to natural language description, and simplify the development process. Self-recovered error recovery: autoanalysing errors and retrying them using amendments to improve code quality and development efficiency. Multi-cycle dialogue iterative development: it is done through natural dialogue to make the development process more fluid and compatible with human thinking. Code library smart understanding:..- 743

-

MASK DECLARED OPEN SOURCE X, NEW RECOMMENDED ALGORITHM: FULL DISCLOSURE OF CORE CODE

In the news of January 21, yesterday, Iron Mask announced that X had officially opened a new recommended algorithm and simultaneously made public its complete code warehouse on GitHub. The X Engineering Team wrote yesterday that the new algorithm is based on the same transform structure as the xAI 's Grok model, covering all core logic recommended by the platform for determining "natural content" and "advertising content." Mask added that X would update the algorithm every four weeks in the future, with a developer's note, so that the outside world could understand the changes in the referral mechanism. He's..- 805

-

BEFORE GOOGLE CEO SCHMIDT: EUROPE EITHER INVESTS IN OPEN SOURCE AI OR DEPENDS ON THE CHINESE MODEL

On January 21st, according to Bloomberg, prior to Google CEO, Eric Schmidt, a technology investor, stated on Tuesday that Europe must invest in building its own open-source AI laboratory and solve the problem of soaring energy prices, otherwise it would soon find itself dependent on China’s model. Schmitt said on Tuesday at the World Economic Forum in Davos: “In the United States, businesses are largely turning to closed sources, which means that these technologies will be purchased, authorized, etc. At the same time, China ' s approach is largely open-minded and open-source. Unless Europe is willing for Europe..- 4.8k

-

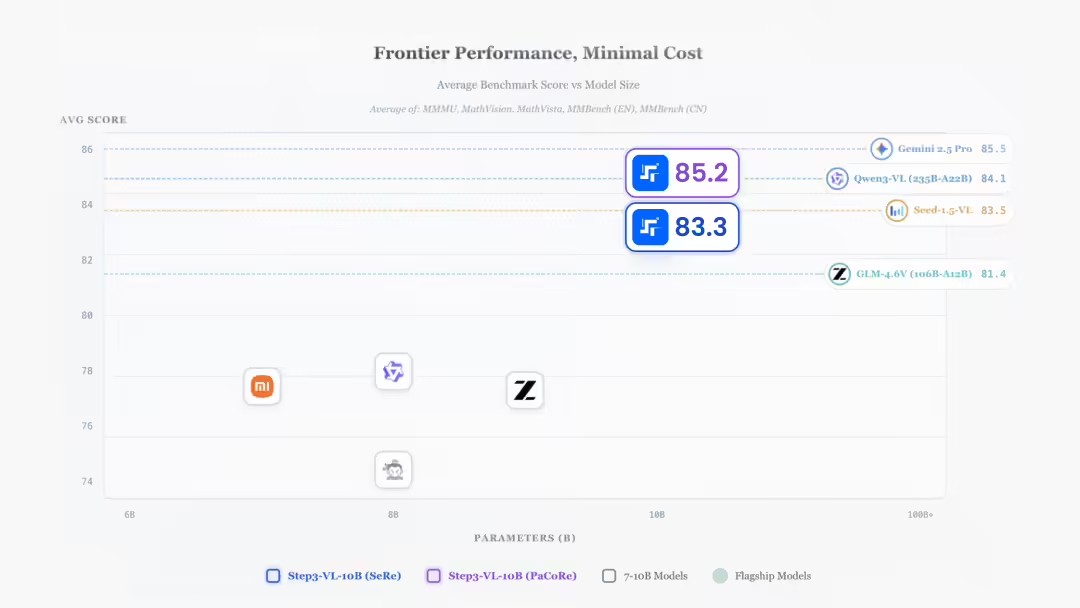

Step3-VL-10B, performance equivalent to a hundred billion-scale large model

On January 21st, Step3-VL-10B open source for step-to-step stars. It was described that with only 10B parameters, Step3-VL-10B had reached the same scale SOTA in a series of benchmark tests such as visual perception, logical reasoning, mathematical competitions and general dialogue. 1AI with the text of the official presentation as follows: Only 10B parameter, Step3-VL-10B in visual perception,..- 774

-

Genre GLM-4.7-Flash model release and open source, free of charge

On January 20, the IQ-GLM-4.7-Flash model was officially released and opened today, January 20th. GLM-4.7-Flash is a hybrid thinking model with a total parameter of 30B and a activated parameter of 3B, claiming to be a homogenous SOTA model, providing a new option for light quantification deployments that takes into account performance and efficiency. As of this date, the GLM-4.7-Flash will replace the GLM-4.5-Flash, go online on the open-think platform BigModel.cn and be available for free call..- 1.3k

-

Nano Banana Pro, a new rival, multimodular SOTA model for the first nationally produced chip in Union of Thoughts

On January 14, according to the news, Union Hua was declared today as an open-source new generation image generation model, GMM-Image, based on the roll-out of Atlas 800T A2 equipment and the MindSpore AI framework to complete the full process from data to training, the first SOTA multi-modulate model to complete full training on a national chip. GLM-Image combines image generation with language models using an autonomous and innovative "self-return + proliferation decoder" hybrid structure. 1AI with GLM-Image core highlights as follows..- 1.8k

-

The country's first open-source vertical large-language model in the field of general agriculture, “Sunon”

On January 14, the official of Nanjing Agricultural University announced that, last week, at the sub-forum of the annual conference of the Higher Agroforestry Education Branch of the Chinese Institute of Higher Education in China in 2025, “Technology for the transformation of the full dimension of agro-forestry education”, the Nanjing Agricultural University of Nanjing had officially launched Sinong, the Siinong language model. The model is the first open-source vertical-language model in the country for the general area of agriculture and the first large-language model for agriculture, which was developed by Nanjing University of Agriculture. The publication of the Sinon Language Model marked a new breakthrough in research and application of artificially intelligent basic models in agriculture at Nanjing Agricultural University. "Sunon..- 2.9k

-

10 trillion tokens! Weeda contributed to the largest open source data set in the world and pushed four open source AI models

On January 6, in the CES 2026 keynote address held today, Chief Executive Officer Hoang In-hoon of Inweida delivered a keynote speech announcing a large-scale expansion of his open-source model bank, the release of new models and data sets covering the four main areas of language, robotics, autopilot and medicine, and further acceleration of industry-wide AI innovation. Weeda contributed to the Open Source Training Framework and the world's largest open multi-modular data set, including 10 trillion language training tokens, 500,000 robot tracks, 455,000 protein structures and 100 TB vehicle sensors..- 1.2k

-

OPEN SOURCE TRANSLATION MODEL 1.5: CELL PHONE 1GB MEMORY TO RUN, GOING BEYOND COMMERCIAL API

On December 31, in the news of the announcement of the open-source translation model 1.5, the text consists of two models: Tencent-HY-MT1.5-1.8B and Tencent-HY-MT1.5-7B, in support of 33 language translations and 5 Chinese/linguistics, in addition to common languages such as Chinese, English and Japanese, as well as small languages such as Czech, Marathi, Estonian and Icelandic. Now both models are placing on the M.O. Online, and open-source communities like Github and Huggingface..- 2.7k

-

Open-source virtual human video generation model LongCat-Video-Avatar: It's all human when it's called "no talking."

On December 19, according to a tweet from the LongCat public, the company LongCat team officially released and opened the SOTA-class virtual human video generation model, LongCat-Video-Avatar. The model is based on the LongCat-Video base and continues the core design of "One Model for Multitask" and supports core functions such as Audio-Text-to-Video, Audio-Text-Image-to-Video and video continuation, as well as..- 1.8k

-

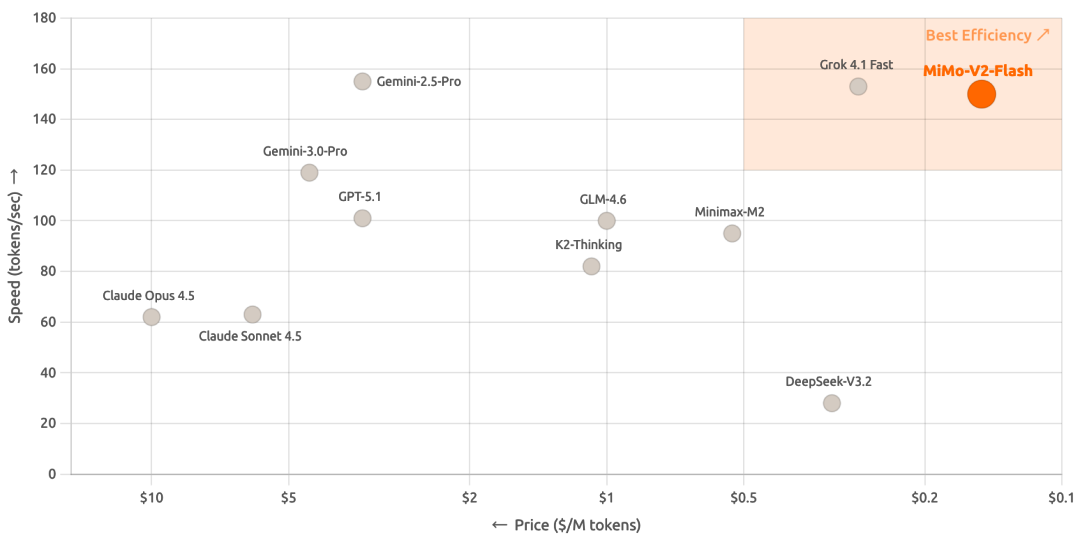

Mi suddenly released a new model: DeepSeek-V3.2

On December 17th, a new MiMo-V2-Flash model was officially released and opened in Mimo-V2-Flash, first looking at performance: a total of 30.9 billion MiMo-V2-Flash, an active parameter of 15 billion, using an expert hybrid structure (MoE), and also able to bend wrists with these front-source models DeepSeek-V3.2 and Kimi-K2. Remove the "open source" label, MiMo-V2-Flash The real killer It's a radical innovation in architecture that pulls the reasoning to 150 to..- 3.9k

-

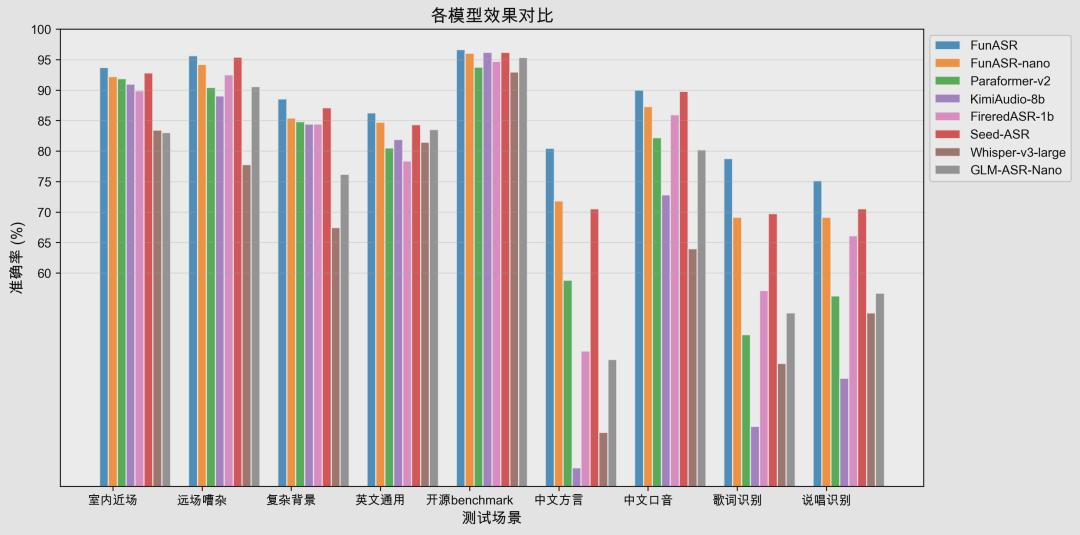

Ali Tun Yi has launched a new version of the voice model: 3 seconds to "replicate" 9 languages, 18 dialects

On December 16, in a message, the Master Model was announced by the official public sign, two “hundred-hear” voice models were officially opened and two models were upgraded. According to the introduction, it takes three seconds to get your voice seamlessly transacted in languages, dialects and emotions -- Mandarin, Chinese, Japanese, English, happy, angry nine languages, 18 dialects. Upgrade Fun-CosyVoice3 Model Upgrade: First package delayed reduction of 50%, doubling the accuracy of Chinese and English and supporting 9 languages 18 dialects, translingual cloning and emotional control; ..- 3.4k

-

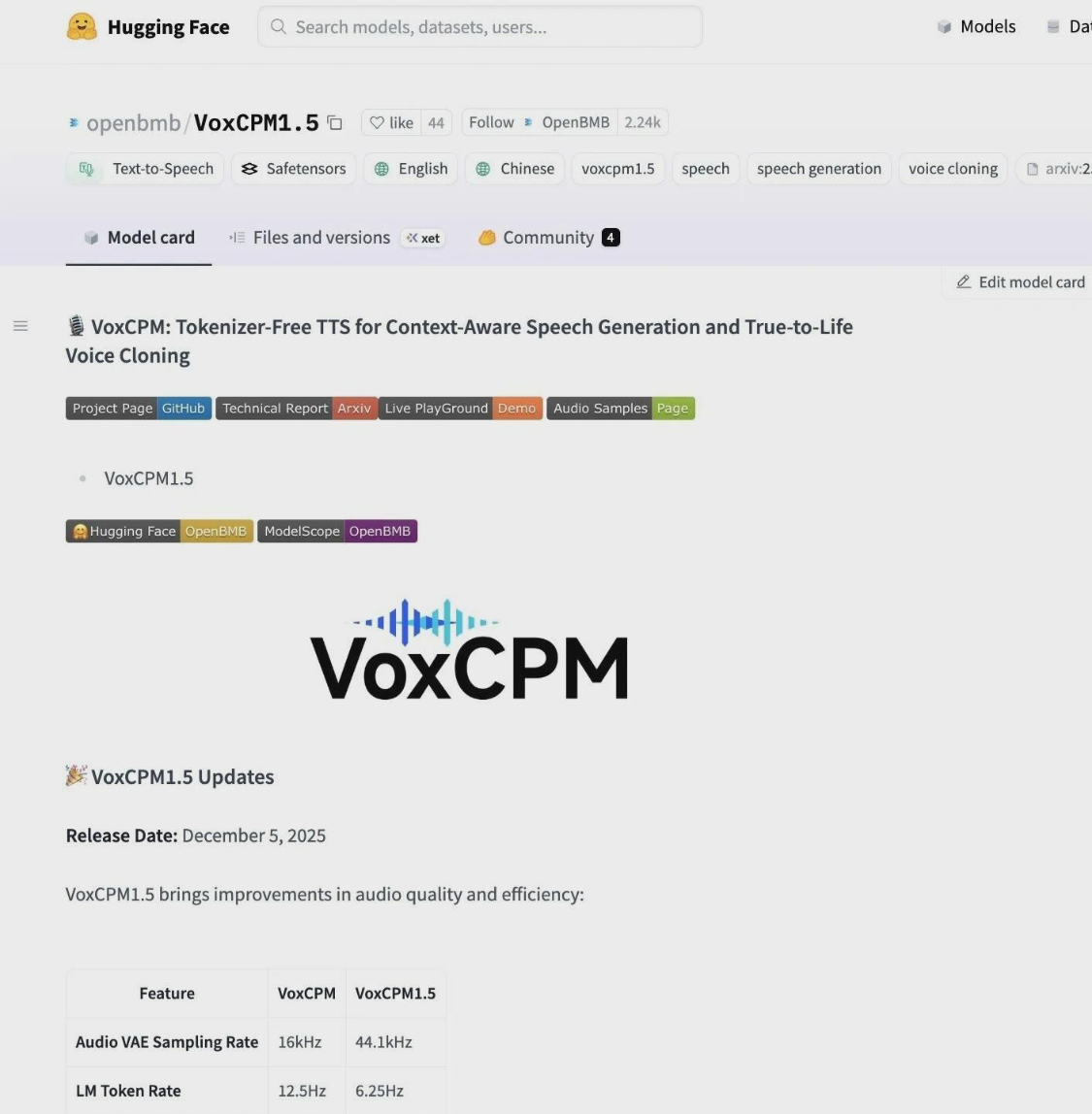

VoxCPM 1.5 Voice generation AI model open source: high-exploited sample audio cloning, double efficiency

On December 11, the Spectator announced that the VoxCPM version 1.5 was officially on line, while continuously optimizing the developer ' s development experience, it also brought about a number of core competency upgrades. VoxCPM is a speech generation base model of 0.5B parameter size, first released in September this year. 1AI with VoxCPM 1.5 Update bright spots: High-exploration Sample Audio Cloning: AudioVAE Sampling Rate raised from 16kHz to 441kHz, with models that make better and more detailed noises based on high-quality audio; ..- 3.6k

-

AutoGLM: Every cell phone becomes "AI"

Yesterday, December 10th, AI announced the Official Open Source AutoGLM project, which aims to promote "AI Agent, a mobile phone," as the public domain of the industry. First complete link operation: On October 25, 2024, AutoGLM was considered the first AI Agent in the world with Phone Use capabilities to achieve a stable full operating link on the real machine. Cloudphones and security design: 2025, AutoGLM 2.0 launched, using MobileRL, Comp..- 6.6k

-

GENRE GLM-4.6V SERIES MULTIMODULAR AI LARGE MODEL RELEASE AND OPEN SOURCE API REDUCTION 50%

Message dated 9 December, AI Commissioner announced and opened a large multi-modular model for the GLM-4.6V series, including: GM-4.6V (106B-A12B): Basic version of cloud- and high-performance cluster scenarios; GM-4.6V-Flash (9B): Light version for local deployment and low-delayed applications. GLM-4.6V raises the context window for training to 128k tokens and achieves the same parameter size in visual understanding precision as SOTA..- 6.1k

-

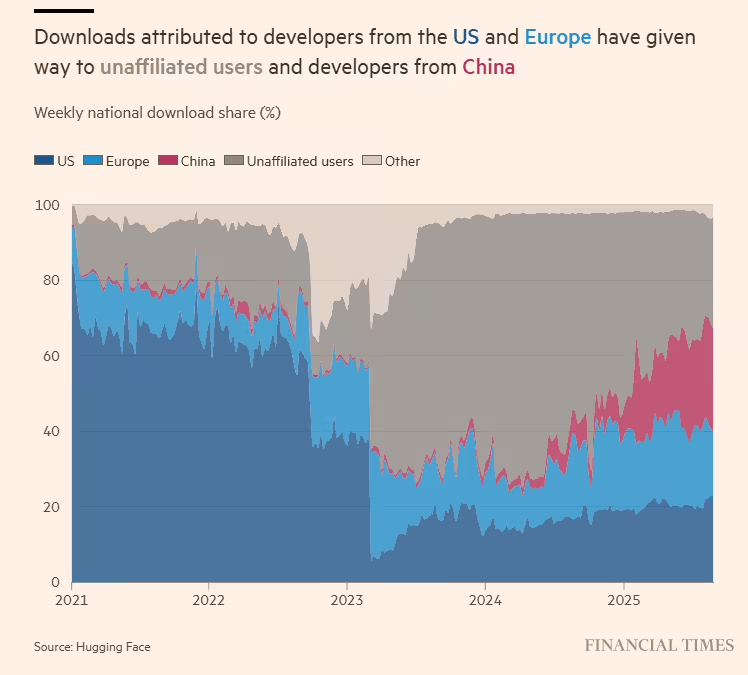

RESEARCH: FOR THE FIRST TIME IN THE PAST YEAR, CHINESE OPEN SOURCE AI MODEL DOWNLOADS SURPASSED THE UNITED STATES

On November 28th, according to the British Financial Times, China has surpassed the United States in the global market for open-source artificial intelligence, i.e., the AI Model, and has gained a key advantage in the global application of this powerful technology. According to reports, a study carried out by the Massachusetts Institute of Technology and Open Source Artificial Intelligence Starter, Hugging Face found that, over the past year, the share of the open source AI model developed by the Chinese scientific team in the global download of open source models had risen to 171 TP3T, more than 15...- 3.6k

-

China's "Closed" in the open source of the AI Model Market is in stark contrast to the "Closed" of OpenAI

On 26 November, the Financial Times reported that China had surpassed the United States in the global “open source” AI market, thus gaining a key advantage in the global application of this powerful technology. A study by the United States MIT and Open Source AI Entrepreneurship, Hugging Face, found that over the past year, China ' s share of newly developed open source models in total downloads of open source models had risen to 17%, surpassing United States companies like Google, Meta and OpenAI by 15.8%. This is the first time that Chinese companies have surpassed their American counterparts in this area. Open..- 5.3k

-

CHAIRMAN OF THE BOARD OF DIRECTORS, PRIVATE LIU: FULL SUPPORT FOR OPEN SOURCE. WE'VE GOT MORE THAN 40 AI MODELS

ON THE MORNING OF NOVEMBER 16TH, AT TODAY'S 2025 A.I.+ CONGRESS, PRIVATE LIU, DIRECTOR GENERAL OF THE GESTAPO, STATED, “THE INTELLECTUAL SPECTRUM HAS ITS OWN ATTACHMENT TO OPEN SOURCES, PREFERS OPEN SOURCES AND SHARING, AT THE PRACTICAL STRATEGIC LEVEL, OPEN SOURCES BENEFIT THE ARTIFICIAL INTELLIGENCE INDUSTRY, AI NEEDS THOUSANDS OF PEOPLE IN ALL FIELDS TO PARTICIPATE, AND BASIC MODELS NEED A LOT OF PEOPLE TO STUDY AND PLAY TOGETHER.” “WE FULLY SUPPORT THE OPEN SOURCE, WHICH NOW HAS MORE THAN 40 MODELS.” PRIVATE LAU SAID THAT. OF COURSE, HE ALSO POINTED OUT THAT THE THINK TANK WAS ALSO CONSIDERING HOW TO EXPLORE COMMERCIAL GAINS ON AN OPEN SOURCE BASIS THROUGH..- 1.7k

-

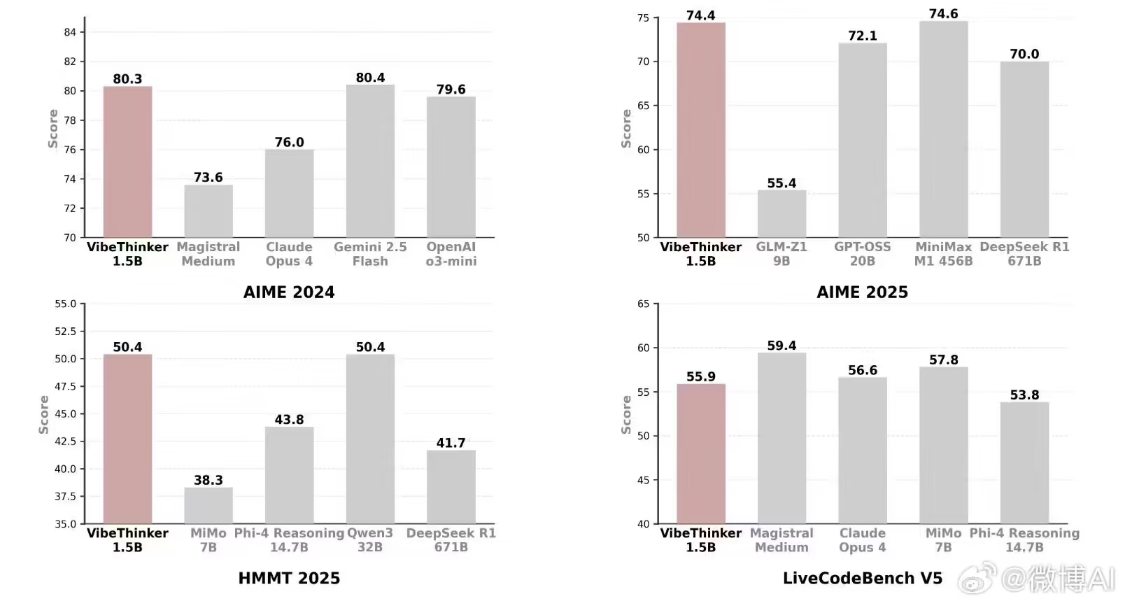

Sina Weibo released its first large open source model, VibeThinker-1.5B, small models challenging a huge parametric rival

On November 14, New Wave Weibo released its first large open source model, VibeThinker-1.5B, called “Small models can also be intelligent”. 1AI is accompanied by the following official presentation: Are the most powerful models in the industry at present more than 1T, or even 2T-scale models, that have a high level of intelligence? Is only a small number of technology giants capable of making large models? VibeThinker-1.5B, which is exactly the negative answer given by Weibo AI to this question, proves that small models can also have high IQs. It's..- 3.1k

-

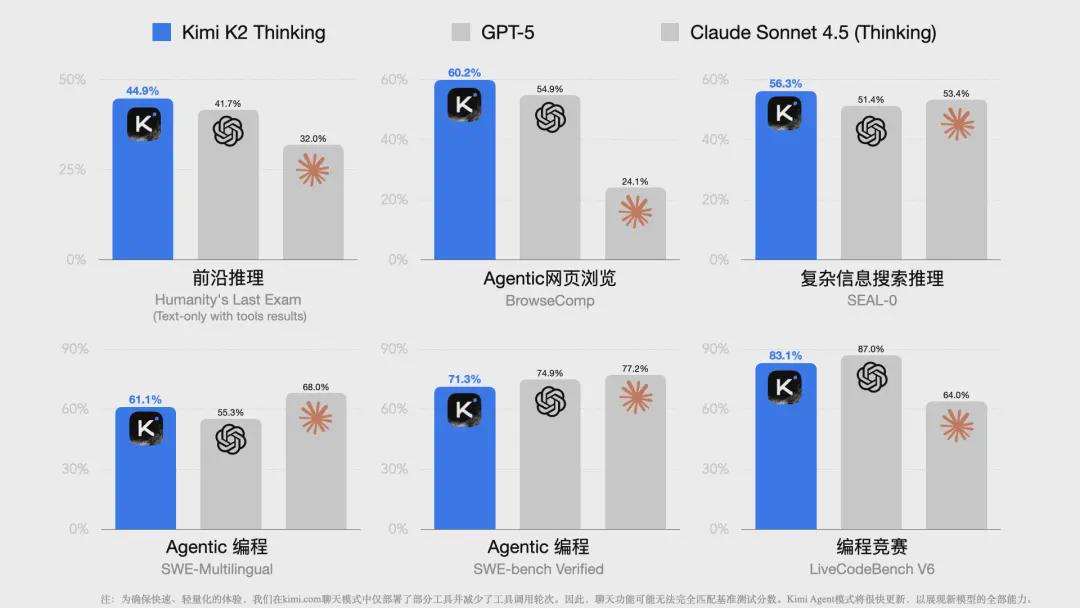

The dark side of the moon Kimi K2 Tinking training costs were exposed to $4.6 million and performance exceeded the OpenAI GPT model of billions of dollars invested

On November 9, Moonshot AI launched its most powerful open-source thinking model so far on Thursday. According to the dark side of the month, Kimi K2 Thinking achieved an excellent performance of 44.9% in the final human examination (HLE), which went beyond advanced models such as GPT-5, Grok-4, Claude 4.5. However, CNBC quotes informed sources that Kimi K2 Tinki..

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: