April 19th.GoogleThe company published a blog post yesterday, April 18, releasing an optimized version of Quantitative Awareness Training (QAT) Gemma 3 Model,Reduce memory requirements while maintaining high quality.

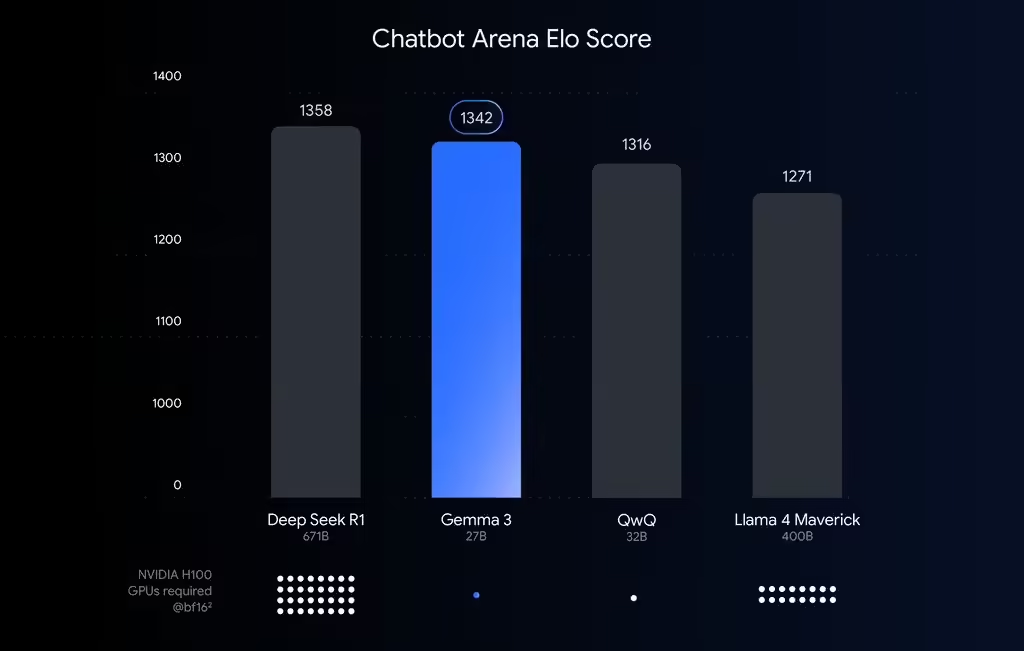

Google launched last month Gemma 3 open-source model that runs efficiently on a single NVIDIA H100 GPU with BFloat16 (BF16) precision.

1AI cites a blog post that describes Google's efforts to make Gemma 3's powerful performance adaptable to common hardware in response to user demand. Quantization techniques are key, dramatically reducing data storage by reducing the numerical precision of model parameters (e.g., from 16 bits in BF16 to 4 bits in int4), similar to image compression that reduces the number of colors.

Quantized by int4, Gemma 3 27B video memory requirementsSharp reduction from 54GB to 14.1GBThe Gemma 3 12B is down from 24GB to 6.6GB; the Gemma 3 1B requires only 0.5GB of video memory.

This means that users can run powerful AI models on desktops (NVIDIA RTX 3090) or laptops (NVIDIA RTX 4060 Laptop GPU), and even phones can support smaller models.

To avoid performance degradation due to quantization, Google employs quantization-aware training (QAT) techniques, which simulate low-precision operations during training to ensure that the model maintains a high level of accuracy even after compression.The Gemma 3 QAT model reduces the perplexity degradation by 541 TP3T in about 5000 training steps.

Major platforms such as Ollama, LM Studio, and llama.cpp have already integrated the model, and users can get the official int4 and Q4_0 models through Hugging Face and Kaggle to easily run on Apple Silicon or CPU. In addition, the Gemmaverse community offers more quantization options to meet different needs.