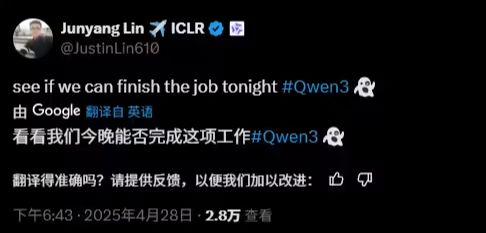

April 28 News.AliThousand Questions on TongyiIn a post on X, Open Source Leader Yang Jun Lin hinted that the Qwen3 model is expected to be released today.

It's worth mentioning that the Qwen3 model collection has been launched on ModelScope, Alibaba's open-source AI modeling community, and is now offline, including Qwen3-4B-Base, Qwen3-1.7B, Qwen3-0.6B, and Qwen3-30B-A3B-Base. All models are under the Apache License 2.0. Although the official announcement has not yet been released, but combined with the naming rules and the logic of the previous generation of technology, it can be speculated that its technical path and positioning direction.

In this release, Qwen3-4B, Qwen3-1.7B and Qwen3-0.6B are directly named by the parameter scale, which corresponds to 4 billion, 1.7 billion and 600 million parameters, respectively. This kind of suffix-free naming method may indicate that they are single-architecture dense models without adopting the Mixed-Mode Expert (MoE) design, which is presumed to focus on lightweight application scenarios. On the other hand, Qwen3-30B-A3B-Base is a MoE architectural base model that dynamically invokes 3 billion (3B) parameters out of 30 billion (30B) total parameters to process tasks.

According to 1AI, since August 2023, AliCloud has successively open-sourced four generations of models, including Qwen, Qwen1.5, Qwen2, and Qwen2.5, which encompass the full sizes of 0.5B, 1.5B, 3B, 7B, 14B, 32B, 72B, and 110B, and the full range of large languages, multimodalities, math, and code.