SonicSonic is an audio-driven portrait animation framework from Tencent and Zhejiang University that generates realistic facial expressions and movements based on global audio perception.Sonic is based on context-enhanced audio learning and motion decoupling controllers, which extract long-term temporal audio knowledge within an audio clip and independently control the head and expression movements, respectively, to enhance local audio perception.Sonic uses a temporal-aware positional offset fusion mechanism to extend local audio perception to the global level, solving the problem of jitter and mutation in long video generation. Sonic uses a time-aware positional offset fusion mechanism to extend local audio perception to the global level, solving the problem of jitter and mutation in long video generation.Sonic outperforms existing state-of-the-art methods in terms of video quality, lip-synchronization accuracy, motion diversity, and temporal coherence, and dramatically improves the naturalness and coherence of portrait animations, supporting fine-grained adjustments to the animations by the user.

Sonic Features

- Realistic Lip Synchronization: Precise alignment of audio with lip movements ensures a high degree of consistency between what is spoken and the shape of the mouth.

- Rich expressions and head movements: Generate diverse and natural facial expressions and head movements for more vivid and expressive animations.

- Stable generation over long periods of time: When processing long videos, it can maintain a stable output, avoid jitter and sudden changes, and ensure overall coherence.

- User adjustability: Supports user control of head movement, expression intensity and lip synchronization effects based on parameter adjustments, providing a high degree of customizability.

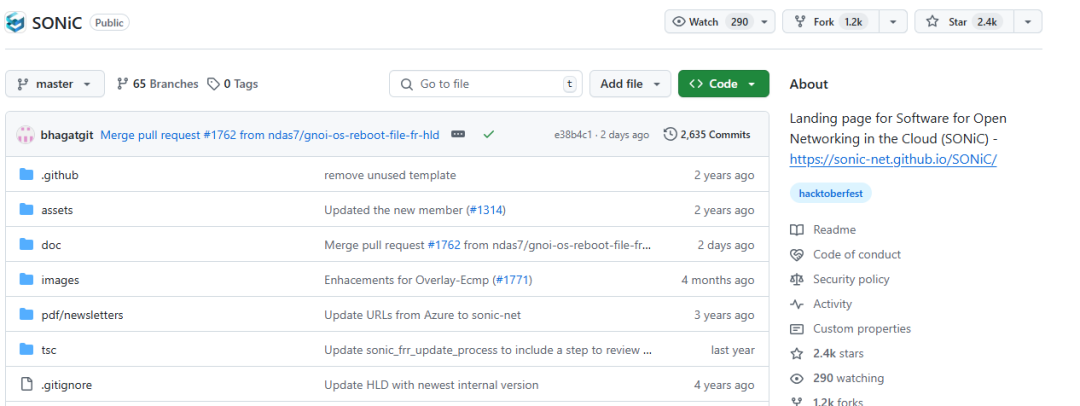

Official website link:https://github.com/jixiaozhong/Sonic