DeepSeek The R1 model announced the completion of a minor version upgrade.The current version is DeepSeek-R1-0528The API has also been updated. After entering the dialog interface through the official website, app or applet, users can turn on the "Deep Thinking" function to experience the latest version. the API has also been synchronized with the update, and the invocation method remains unchanged.

On the evening of May 29, DeepSeek officially announced the detailed upgrade content of the DeepSeek-R1-0528 update.1AI attached specific introduction is as follows:

Deep Thinking Skills Strengthening

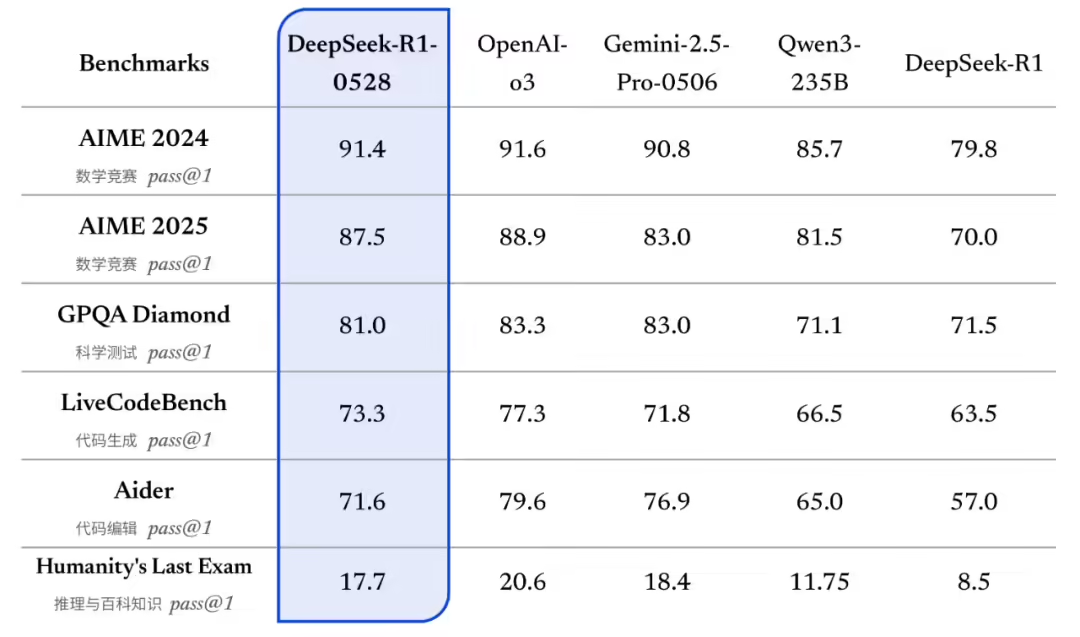

DeepSeek-R1-0528 still uses the DeepSeek V3 Base model released in December 2024 as a base, but puts more computing power into the post-training process.Significantly improved depth of thinking and reasoning in modelsThe updated R1 model has achieved excellent results in several benchmarks, including math, programming, and general logic. The updated R1 model has achieved excellent results in several benchmarks, including math, programming and general logic, and is approaching the overall performance of other top international models, such as o3 and Gemini-2.5-Pro.

Compared to the old R1.The performance of the new version of the model in complex reasoning tasks has been significantly improvedIn the AIME 2025 test, for example, the accuracy of the new model increased from 70% in the old version to 87.5%. For example, in the AIME 2025 test, the accuracy of the new version of the model was improved to 87.51 TP3T from 701 TP3T in the old version.

This improvement is due to the increased depth of thought in the model's reasoning: on the AIME 2025 test set, the old model used an average of 12K tokens per question, whereas the new model uses an average of 23K tokens per question, suggesting that more detailed and deeper thought went into the solution.

Meanwhile, the official distillation of DeepSeek-R1-0528's chain of thought and then training Qwen3-8B Base yielded DeepSeek-R1-0528-Qwen3-8B. This 8B model was second only to DeepSeek-R1-0528 in the math test AIME 2024, surpassing Qwen3-8B (+10.01 TP3T) and is comparable to Qwen3-235B. DeepSeek officials believe that DeepSeek-R1-0528's chain of thought will be of great significance to the research of inference models in academia and the development of small models in industry.

Other capacity updates

- Hallucinations improve:The new version of DeepSeek R1 has been optimized for the "illusion" problem. Compared with the old version, the updated model can provide more accurate and reliable results in the scenarios of rewriting, summarizing, and reading comprehension with a reduction of about 45-50% in the hallucination rate.

- Creative Writing:Based on the old version of R1, the updated R1 model has been further optimized for essay, novel, prose and other genres, and is able to output longer works with more complete structure and content, as well as presenting a style of writing that is more in line with human preferences.

- Tool Call:DeepSeek-R1-0528 supports tool calls (tool calls in thinking are not supported). The current model Tau-Bench score is airline 53.5% / retail 63.9%, which is comparable to OpenAI o1-high, but still not as good as o3-High and Claude 4 Sonnet.

also,DeepSeek-R1-0528 Capabilities in areas such as front-end code generation, role-playing, and more have been updated and enhanced.

API Updates

The API has been synchronized and updated, and the interface and calls remain unchanged. The new R1 API still supports viewing the model's thought process.Function Calling and JsonOutput support have also been added..

The meaning of the max_tokens parameter in the new R1 API has been adjusted: now max_tokens is used to limit the total length of the model's single output (including the thought process), the default is 32K, and the maximum is 64K. API users are requested to adjust the max_tokens parameter in time in order to prevent the output from being truncated prematurely.

The use of the R1 model is detailed in the API guide:

https://api-docs.deepseek.com/zh-cn/guides/reasoning_model

After this R1 update, the model context length in the official website, applets, apps and APIs is still 64K, if users need longer context length, they can call the open source version R1-0528 model with 128K context length through other third-party platforms.

modeling open source

DeepSeek-R1-0528 uses the same base model as the previous DeepSeek-R1, with only an improved post-training method. Only checkpoint and tokenizer_config.json need to be updated for private deployment (tool calls related changes). The model parameters are 685B (of which 14B is the MTP layer), and the open source version has a context length of 128K (64K contexts are provided for the web, app, and API).

DeepSeek-R1-0528 Model weights download reference:

Model Scope:

https://modelscope.cn/models/deepseek-ai/DeepSeek-R1-0528

Huggingface:

https://huggingface.co/deepseek-ai/DeepSeek-R1-0528

Consistent with older versions of DeepSeek-R1, this open source repository (including model weights)The MIT License is still being used consistently., and allows users to train other models using model outputs, through model distillation, etc.