July 7 News.Station BteamOpen SourceAnime Video Generation Model AniSora Updated on July 2 to AniSora V3 Preview.

As part of the Index-AniSora project, the V3 version further optimizes the generation quality, smoothness of movement, and variety of styles, providing a more powerful tool for anime, manga, and VTuber content creators.

AniSora supports one-click generation of video footage in a variety of anime styles, including fanfic clips, kokusai anime, manga adaptations, VTuber content, anime PVs, ghosts (MADs), and more.

AniSora V3 is based on the previously open-sourced CogVideoX-5B and Wan2.1-14B models from B, combined with the Reinforcement Learning with Human Feedback (RLHF) framework.Significantly improves the visual quality and motion consistency of the generated video. It supports one-click generation of anime video footage in a variety of styles, including fan drama clips, nationally created animation, manga video adaptations, VTuber content, and more.

Core upgrades include:

- Spatiotemporal Mask Module Optimization: The V3 version enhances the spatiotemporal control capability to support more complex animation tasks, such as fine control of character expressions, dynamic camera movement and local image guidance generation. For example, the prompt "Five girls dance when the camera zooms in, raise the left hand to the top of the head and then lower it to the knee" can generate smooth dance animation, and the camera and the character movements are synchronized naturally.

- Dataset Expansion: V3 continues to rely on more than 10 million high-quality anime video clips (extracted from 1 million original videos) for training, and a new data cleaning pipeline is added to ensure the stylistic consistency and detail richness of the generated content.

- Hardware optimization: V3 adds native support for Huawei's Ascend910B NPU, which is fully trained on a domestic chip, increasing inference speed by about 20%, and generating a 4-second HD video in just 2-3 minutes.

- Multitasking Learning: V3 has enhanced multitasking capabilities, supporting functions ranging from single-frame image generation for video, keyframe interpolation to lip sync, making it particularly suitable for manga adaptations and VTuber content creation.

In the latest benchmarks, AniSora V3 achieves top-of-the-industry (SOTA) levels of character consistency and smoothness of movement in both VBench and double-blind subjective tests, with a particular focus on complex movements (e.g., exaggerated anime movements that defy the laws of physics).

V3 also introduces the first RLHF framework for anime video generationIn addition, the model is fine-tuned through tools such as AnimeReward and GAPO to ensure that the output is more in line with human aesthetics and the needs of anime styles. The community has begun to develop customized plug-ins based on V3, for example to enhance the generation of specific anime styles such as Ghibli.

AniSora V3 supports a variety of anime styles, including Japanese anime, domestic original animation, manga adaptation, VTuber content and spoof animation (ghost animation), covering 90% anime video application scenarios. Specific applications include:

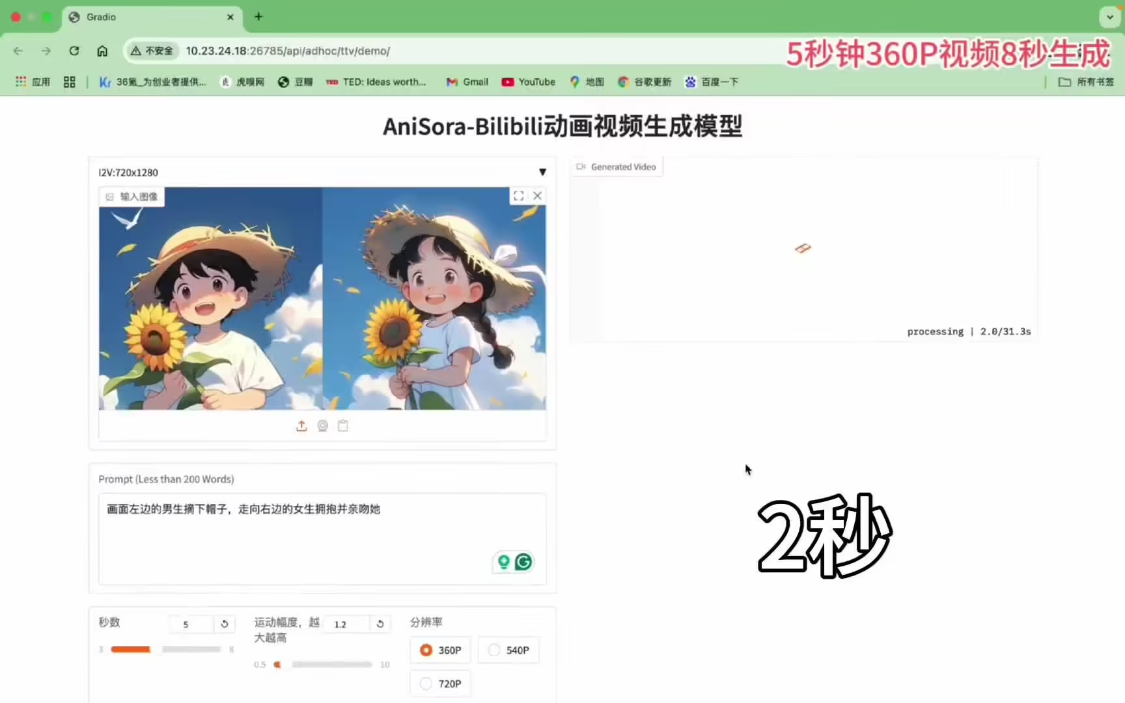

- Single image to video: Users can upload a high-quality anime image with text prompts (e.g., "The character is waving his hand in a forward moving car and his hair is swinging in the wind") to generate a dynamic video, keeping the character's details and style consistent.

- Manga Adaptation: Generate animation from manga frames with lip sync and motion, suitable for quick trailers or short animations.

- VTuber and Games: Support for real-time character animation generation enables indie creators and game developers to quickly test character movement.

- High-resolution output: Generated videos support up to 1080p, ensuring professional presentation on social media, streaming platforms.

AIbase tests show that V3, when generating complex scenes (e.g., multi-character interactions, dynamic backgrounds), theReduces artifacts by about 15% compared to V2, reducing generation time to an average of 2.5 minutes (IT House note: 4-second video).

Compared to OpenAI's general-purpose video generation models such as Sora or Kling, AniSora V3 focuses on anime. Compared with ByteDance's EX-4D, AniSora V3 focuses more on 2D / 2.5D anime style rather than 4D multi-view generation.

1AI Attached open source address:

https://github.com/bilibili/Index-anisora/tree/main