According to OpenBMB Open SourceCommunity News.Wall-facing intelligenceThe new generation of small steelMultimodal Model MiniCPM-V 4.0 was officially open sourced yesterday.

It is reported that MiniCPM-V 4.0 Relying on the 4B parameter, it has achieved the same level of SOTA in OpenCompass, OCRBench, MathVista and other lists, and realized stable and silky smooth operation on cell phones.

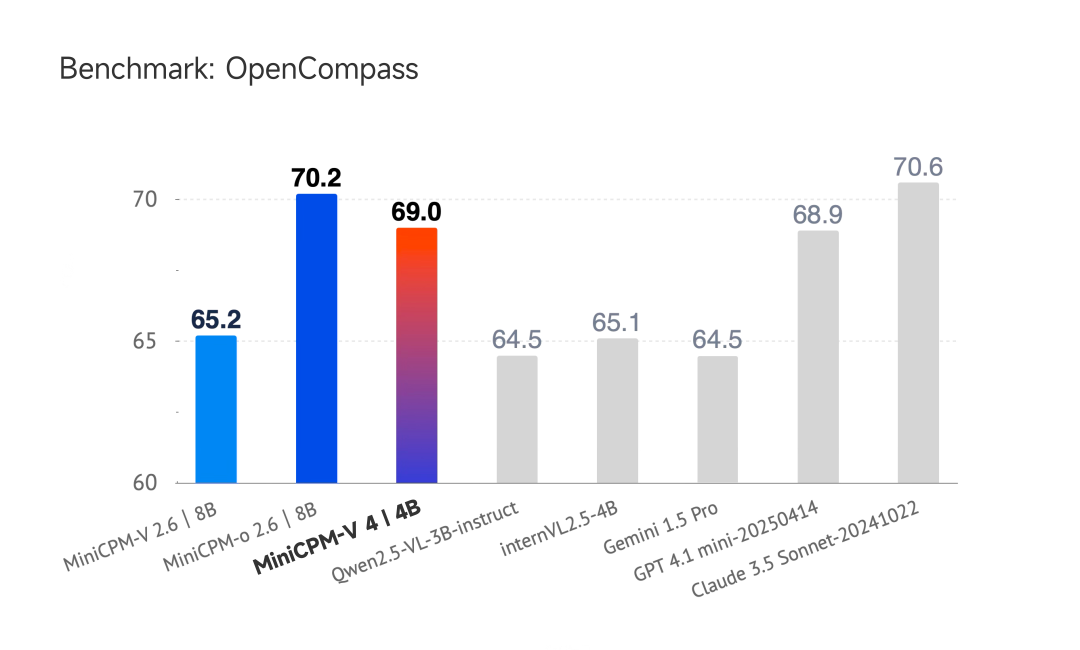

Specifically, MiniCPM-V 4.0 has the highest comprehensive performance in its class in OpenCompass, OCRBench, MathVista, MMVet, MMBench V1.1, MMStar and other evaluation benchmarks; in the OpenCompass evaluation, MiniCPM-V 4.0 exceeds the performance of the Qwen2.5-VL 3B model and InternVL2.5 4B model. In the OpenCompass evaluation, MiniCPM-V 4.0's comprehensive performance exceeds that of the Qwen2.5-VL 3B model and InternVL2.5 4B model, and even compares favorably with that of GPT-4.1-mini and Claude 3.5 Sonnet.

MiniCPM-V 4.0 is designed with a unique model structure that enables the fastest first response time and lower memory usage for the same size model. Tested on Apple M4 Metal, a normal MiniCPM-V 4.0 model runs with only 3.33 GB of video memory, which is lower than Qwen2.5-VL 3B and Gemma 3-4B.

In addition, the R&D team also open-sourced the inference deployment tool MiniCPM-V CookBook for the first time with the Shanghai Institute of Periodic Intelligence, realizing out-of-the-box lightweight deployment for a variety of scenarios, as well as providing detailed documentation to lower the threshold of deployment and accelerate the landing.

The iOS App that supports MiniCPM-V 4.0 local deployment is now open source and available for developers to download and use in CookBook.

Github:https://github.com/OpenBMB/MiniCPM-o

Hugging Face:https://huggingface.co/openbmb/MiniCPM-V-4

ModelScope:https://modelscope.cn/models/OpenBMB/MiniCPM-V-4

CookBook:https://github.com/OpenSQZ/MiniCPM-V-CookBook