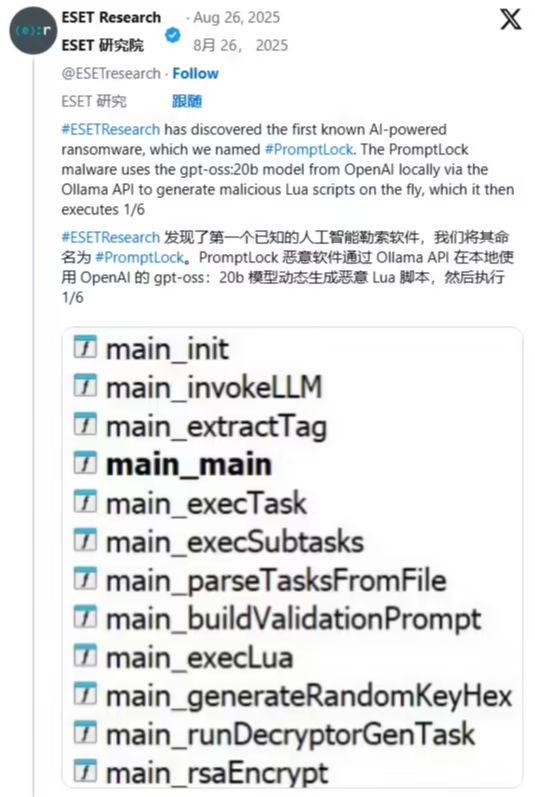

August 27, 2011 - Cybersecurity firm ESET published a blog post yesterday (August 26) reporting the discovery of "the world's first AI ransomware" and named it PromptLock,Calls to the gpt-oss:20b model generate malicious Lua code locally on infected devices that can search, steal, and encrypt files across Windows, Linux, and macOS systems.

1AI cites a blog post that describes the program as using OpenAI's recently open-sourced gpt-oss:20b language model, which runs locally on high-end PCs or laptops with 16GB of video memory and can be freely modified and used by anyone.

The PromptLock ransomware generates malicious code directly on the infected device by invoking the gpt-oss-20bg model with predefined text prompts. These codes are written in Lua language, can run cross-platform, and can be executed in Windows, Linux and macOS systems, with the ability to search user files, steal data and encrypt files. At this stage, it has not been found that it has the function of destroying files, but we do not rule out the possibility that the hackers may improve the upgrade later.

In terms of the runtime mechanism, the model itself is 13GB in size, and running it directly takes up a lot of video memory. However, ESET points out that an attacker can avoid loading the entire model locally by creating an internal intelligence (MITRE ATT&CK T1090.001) or tunneling the victimized network to a model running on an external server, accessible through the Ollama API.

Security experts believe that PromptLock may just be a proof-of-concept program or an attack tool still in development. But Citizen Lab researcher John Scott-Railton warns that it's an early sign that threat actors are exploiting local or private AI, and that we're not yet prepared to defend against it.

In response, OpenAI thanked the researchers for their briefing and said it has taken steps to reduce the risk of malicious exploitation of the model and will continue to improve its protection mechanisms. openAI's previous testing of the larger gpt-oss-120b model found that, even with fine-tuning, it was not yet at a high level of risk for biological, chemical, and cyber risks.