September 1 News.Step StarOpen Source Released Todayend-to-endVoice big model Step-Audio 2 mini, a model that has achieved SOTA scores on several international benchmark test sets, is now available on the Step-Star Open Platform.

1AI learns from the official introduction that it models speech understanding, audio reasoning and generation in a unified way, and is the first to support voice-native Tool Calling capabilities.Enables network search and other operations.

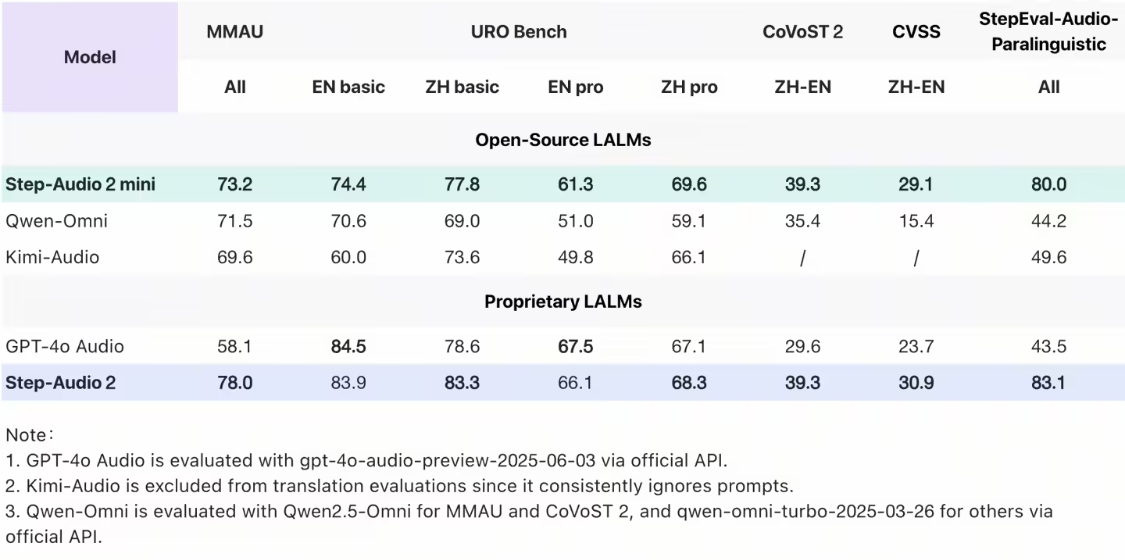

Step-Audio 2 mini achieves SOTA scores in several key benchmarks, excelling in audio understanding, speech recognition, translation and dialog scenarios.Outperforms all open source end-to-end speech models including Qwen-Omni and Kimi-Audio in comprehensive performanceand outperforms GPT-4o Audio on most tasks.

- Step-Audio 2 mini topped the list of open source end-to-end speech models with a score of 73.2 on MMAU, a generalized multimodal audio understanding test set;

- On the URO Bench, which measures spoken conversation ability, Step-Audio 2 mini received the highest scores for open source end-to-end speech modeling in both the basic and professional tracks, demonstrating excellent conversation comprehension and expression;

- On the Chinese-English translation task, Step-Audio 2 mini has a clear advantage, scoring 39.3 and 29.1 on the CoVoST 2 and CVSS evaluation sets, respectively, and is significantly ahead of GPT-4o Audio and other open-source speech models;

- In the speech recognition task, Step-Audio 2 mini achieves the first place in multi-language and multi-dialect. The average CER (Character Error Rate) of the open-source Chinese test set is 3.19, and the average WER (Word Error Rate) of the open-source English test set is 3.50, which is more than 15% ahead of other open-source models.

In the past, AI voice was often criticized for its low IQ and emotional intelligence. First, it is "no knowledge", lacking the knowledge reserve and reasoning ability of a large text model; second, it is "cold", not understanding the subtext, tone of voice, emotion, laughter, and other "string sounds". "Step-Audio 2 mini effectively solves the problems of previous speech models through innovative architectural design.

- True end-to-end multimodal architectureStep-Audio 2 mini breaks through the traditional ASR+LLM+TTS three-stage structure and realizes the direct conversion from raw audio input to voice response output, with a simpler architecture, lower latency, and effective understanding of paralinguistic information and non-vocal signals.

- CoT Reasoning combined with intensive learning:Step-Audio 2 mini introduces for the first time Chain-of-Thought (CoT) reasoning and reinforcement learning co-optimization in an end-to-end speech model, which enables fine-grained understanding, reasoning and natural response to paralinguistic and non-speech signals such as emotion, intonation, music, and more.

- Audio knowledge enhancement:The model support includes external tools such as web retrieval, which helps the model to solve the illusion problem and empowers the model on multi-scene extensions.

GitHub: https://github.com/stepfun-ai/Step-Audio2

Hugging Face: https://huggingface.co/stepfun-ai/Step-Audio-2-mini

ModelScope: https://www.modelscope.cn/models/stepfun-ai/Step-Audio-2-mini