On September 4, Shanghai Artificial Intelligence Laboratory (Shanghai AI Lab) announced thatOpen Sourcecommon (use)MultimodalityLarge ModelShusheng Wanxiang 3.5 (InternVL3.5)The company's reasoning, deployment efficiency and generalization capabilities are fully upgraded.

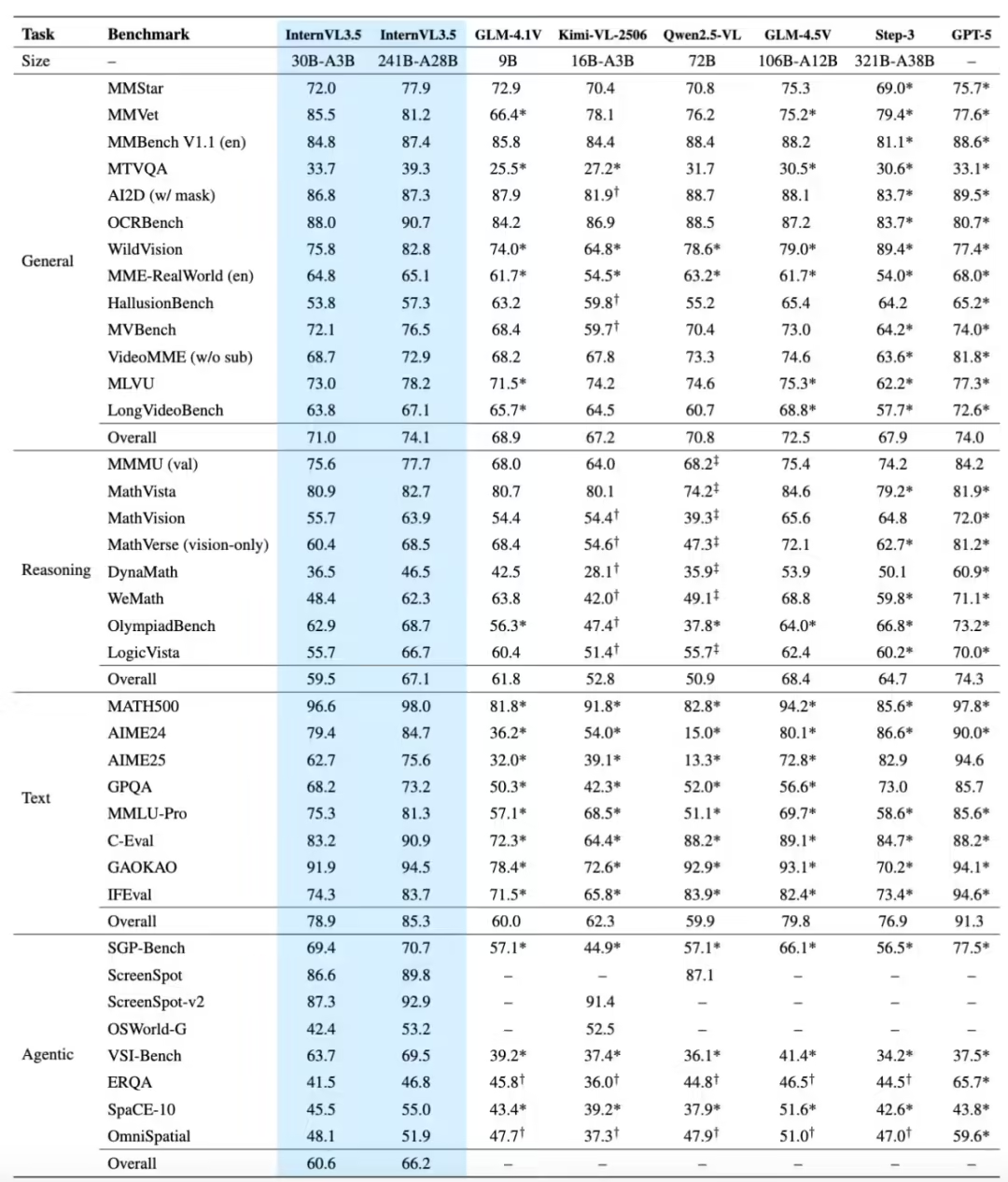

InternVL3.5 This open source has 9 sizes of models.Parameters cover $1 billion to $241 billionThe flagship model InternVL3.5-241B-A28B scored 77 points in the multidisciplinary reasoning benchmark MMMU, the highest score among open source models. Among them, the flagship model InternVL3.5-241B-A28B scored 77.7 points in MMMU, a multidisciplinary reasoning benchmark, which is the highest score among open-source models; the multimodal general perception capability exceeds GPT-5, and the text capability leads the mainstream open-source multimodal large models.

Compared with InternVL3.0, InternVL3.5 realizes significant enhancements in a variety of featured tasks, such as graphical user interface (GUI) intelligences, embodied spatial awareness, and vector image understanding and generation.

In this upgrade, the research team of Shanghai AI Lab focuses on strengthening the intelligent body and text thinking ability of InternVL3.5 for practical applications, and realizes the leap from "understanding" to "action" in many key scenarios, such as GUI interaction, embodied spatial reasoning, and vector graphic processing, and has been validated by many evaluations. "It has been validated by many evaluations.

- In the GUI Interaction section, InternVL3.5 outperforms similar models with a score of 92.9 in the ScreenSpot-v2 Element Positioning task, supports Windows / Ubuntu automation, and is significantly ahead of Claude-3.7-Sonnet in the WindowsAgentArena task.

- In the embodied intelligence test, InternVL3.5 demonstrated the ability to understand physical space relationships and plan navigation paths, outperforming the Gemini-2.5-Pro with a score of 69.5 on the VSI-Bench.

- In terms of vector graphics understanding and generation, InternVL3.5 sets a new open source record with a score of 70.7 in SGP-Bench, and outperforms GPT-4o and Claude-3.7-Sonnet in terms of generation task FID.

Specifically, InternVL3.5 recognizes interface elements and performs mouse and keyboard operations on its own to automate tasks such as recovering deleted files, exporting PDFs, adding attachments to emails, etc. across multiple platforms such as Windows, Mac, Ubuntu, Android, and so on.

InternVL3.5 has stronger grounding capability, which can be generalized to new complex large number of small samples of embodied scenes, and together with the grasping algorithm, it supports generalized long-range object grasping operation, which helps the robot to complete object recognition, path planning and physical interaction more efficiently.

As an important part of Shanghai AI Lab's Shusheng Big Model System, InternVL focuses on visual model technology.InternVL's entire series has been downloaded over 23 million times on the Internet..

1AI Attached open source address:

- Link to technical report: https://huggingface.co/papers/2508.18265

- Code Open Source / Model Usage: https://github.com/OpenGVLab/InternVL

- Model address: https://huggingface.co/OpenGVLab/InternVL3_5-241B-A28B

- Link to online experience: https://chat.intern-ai.org.cn/