Early this morning, Ali Tunyi announced the next generationBasic model structure Qwen3-Next, andOpen SourceQwen3-Next-80B-A3B models based on this architecture。

According to official sources, it is believed that Context Length Scaling and Total Parameter Scaling are two major trends in the development of large models in the future. In order to further enhance the training and reasoning efficiency of the model under the long context and large-scale general parameters, a new Qwen3-Next model structure was designed。

It was described that the following core improvements had been made to the MoE model structure of Qwen3-Next compared to the MoE model structure of Qwen3: a mixed attention mechanism, a high-sortitude MoE structure, a set of trainings to optimize stable and friendly, and multiple token forecasting mechanisms to improve the efficiency of reasoning。

A model structure based on Qwen3-Next has also trained the Qwen3-Next-80B-A3B-Base model. The model has 80 billion parameters that only activate 3 billion and achieves even slightly better performances than the Qwen3-32B dense model。

The Qwen3-Next-80B-A3B-Base training costs (GPU hours) are less than one tenth of Qwen3-32B, and the reasoning in the context above 32k is more than ten times more than Qwen3-32B, which achieves an excellent ratio of training and reasoning。

At the same time, the general meaning is based on the Qwen3-Next-80B-A3B-Base type, the Qwen3-Next-80B-A3B-Instract and Qwen3-Next-80B-A3B-Thinking:

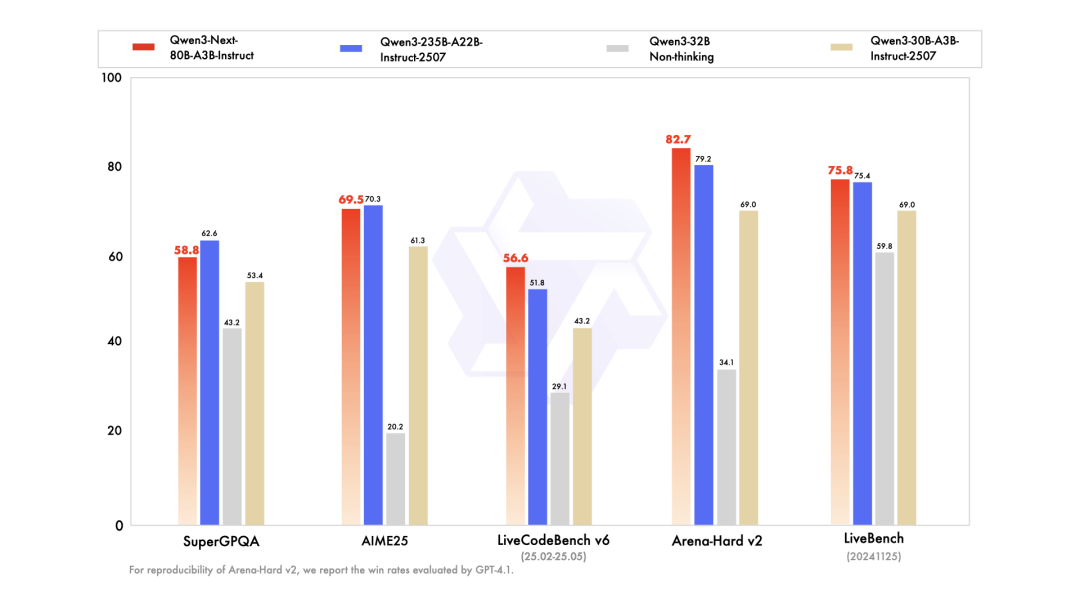

Qwen3-Next-80B-A3B-Instruct acts in the same way as the flagship model Qwen3-235B-A22B-Instruct-2507, while showing a significant advantage in the super-long context of 256K

Qwen3-Next-80B-A3B-Thinking performed well in complex reasoning tasks, not only better than Qwen3-30B-A3B-Thinking-2507 and Qwen3-32B-Thinking, but also beyond closed-source model Gemini-2.5-Flash-Thinking in a number of benchmark tests, some of the key indicators are already approaching Qwen3-235B-A22B-Thinking-2507。

The new model is now online。

free experience: https://chat.qwen.ai/

Qwen3-Next-c314f23bd0264a

HuggingFace: https://huggingface.co/collections/Qwen/qwen3-next-68c25fd6838e585db8eea9d

Alibaba CloudRefinement: https://help.aliyun.com/zh/model-studio/models#2c9c4628c9yd