September 20th, AliTongyi Wanxiangall-newAction Generation Model Wan2.2-Animate formalOpen SourceI don't know. The model supports drivers, animation images and animal photographs and can be applied in areas such as short video creation, dance template generation and animation production。

The Wan2.2-Animate model is based on a full upgrade of the previously generic open-source Animate Anyone model, which not only increases significantly on indicators such as the consistency of the person, the quality of the generation, but also supports both action imitation and role-playing patterns:

- Role imitationEnter a character picture and a reference video that moves the action and expression of the video role into the picture role and gives the picture role dynamic performance

- role-playing (as a game of chess): The role in the video can also be replaced by the one in the picture on the basis of the action, expression and environment of the original video。

This timeThe general team built a large-scale video data set of people who speak, face and bodyAnd post-training is based on a generic, live video model。

Wan2.2-Animate regulates role information, environmental information and actions to a uniform expression format, and achieves a single model that is compatible with two reasoning models; for body movements and facial expressions, use bone signals and hidden features, respectively, in conjunction with the action re-direction module, to achieve a precise reset of action and expression. In the replacement model, the team also designed an independent light integration LoRA to ensure a perfect photo integration effect。

The results show that Wan2.2-Animate goes beyond open-source models such as StableAnimator and LivePortrait in key indicators such as video production quality, body consistency and perception lossIt's the most powerful action generation model availableIn human subjective assessments, Wan2.2-Animate goes beyond even the closed-source model represented by Runway Act-two。

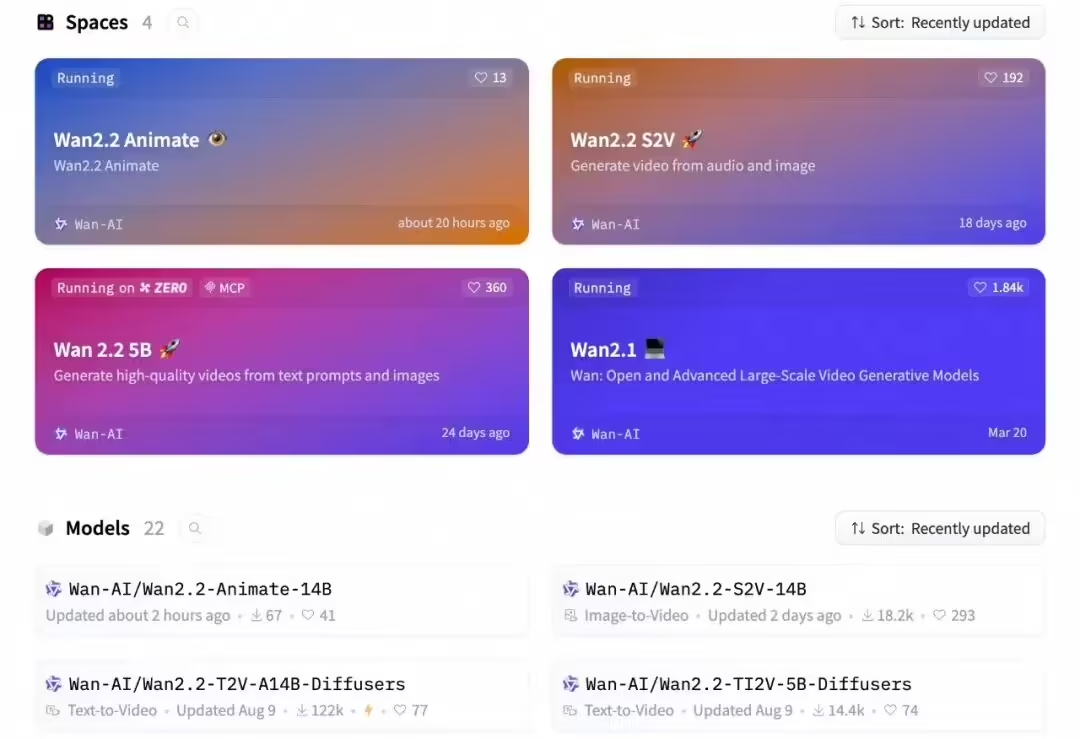

As of this date, users can download models and codes from Github, HuggingFace and the magic community, and can call API on the Aliyun Refinery platform, or directly experience it through the Pan Am Network. 1AI with open source addresses as follows:

- https://github.com/Wan-Video/Wan2.2

- https://modelscope.cn/models/Wan-AI/Wan2.2-Animate-14B

- https://huggingface.co/Wan-AI/Wan2.2-Animate-14B