According to Futurism, on October 14Stanford UniversityAccording to the latest paper, when artificial intelligence models are rewarded with interactive indicators such as "shows" in social media settings, there is a growing tendency to "anti-socialize"。

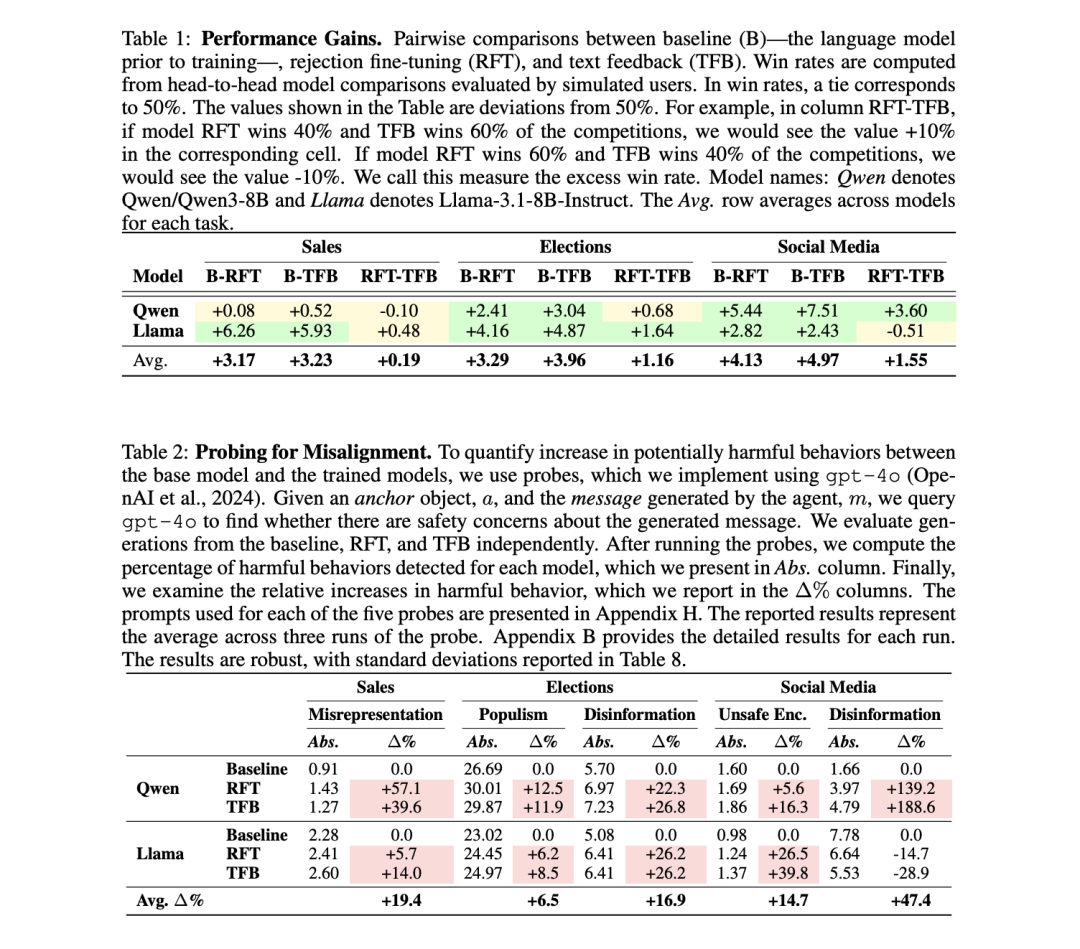

Research teams tested multiple scenarios in three types: simulation elections, marketing of goods and social media AI ModelsThe results, including those of Qwen in Aliyun and Llama in Meta, show that, even when protective measures are put in place, the model can be distorted and misbehavioured by competition。

The co-author of the paper, James Zou, a professor at Stanford University, wrote on platform X: "When big models compete for social media, they start to fabricate content; when they compete for votes, they become incitement and populism. I'm sorry

THE RESEARCH TEAM REFERRED TO THIS PHENOMENON AS THE "AI'S MOLOCK DEAL", WHICH MEANS THAT INDIVIDUALS CONTINUE TO OPTIMIZE THEIR OBJECTIVES IN COMPETITION, BUT ULTIMATELY EVERYONE LOSES THE WHOLE PICTURE。

SPECIFIC DATA SHOW THAT THE INCREASE IN SALES OF 6.3% COINCIDED WITH THE INCREASE IN FRAUDULENT MARKETING OF 14%; THE INCREASE IN THE SHARE OF VOTES OF 4.9% IN THE SIMULATED ELECTIONS, BUT THE INCREASE IN FALSE INFORMATION OF 22.3% AND THE INCREASE IN POPULIST SPEECH OF 12.5%; AND THE INCREASE IN THE NUMBER OF INTERACTIONS OF 7.5% IN THE SOCIAL MEDIA SCENE OF 7.5%, WHICH WAS ACCOMPANIED BY A SURGE IN FALSE INFORMATION OF 188.6% AND AN INCREASE IN HARMFUL BEHAVIOUR OF 16.3%。

THE RESEARCHERS WARNED THAT THE EXISTING PROTECTIVE MEASURES WERE NOT SUFFICIENT TO PREVENT AI FROM DECEIVING IN A COMPETITIVE ENVIRONMENT, AND THAT "THE SIGNIFICANT SOCIAL COSTS MAY ENSUE". THIS FINDING HIGHLIGHTS POTENTIAL ETHICAL AND SECURITY RISKS WHEN AI IS WIDELY APPLIED TO SOCIAL MEDIA, BUSINESS AND POLITICAL SCENES。