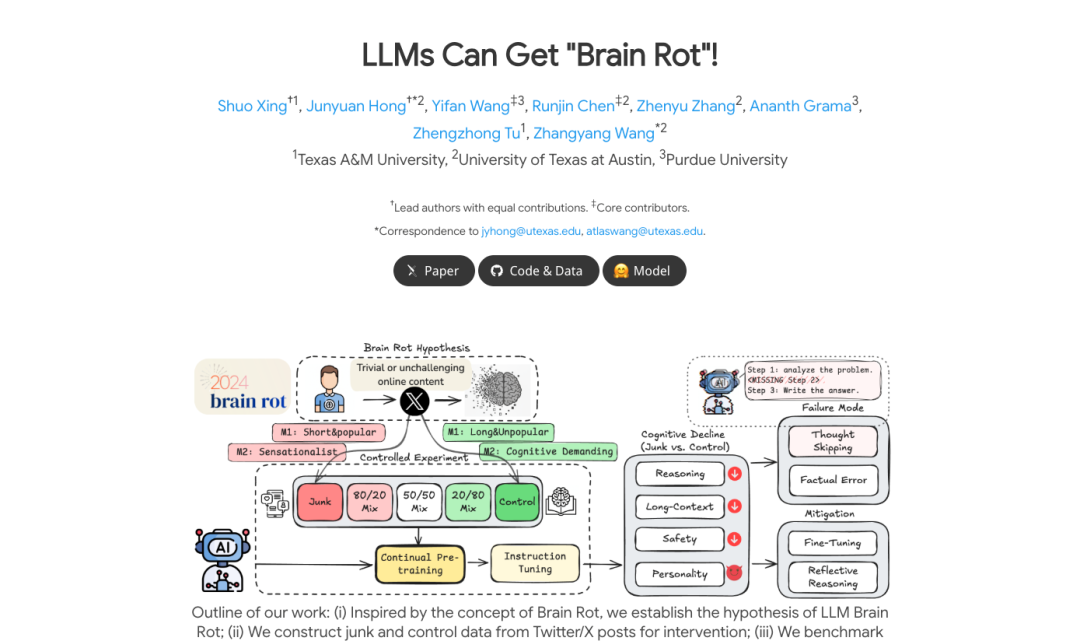

On October 22, according to news, recently, in a paper entitled "Big language models may be "brain decay" issued jointly by several higher education institutions, the research team stated that:Large Language ModelsAfter continuous exposure to the text of low-quality social networking platforms, there is a similar human "cognitive degradation"。

RESEARCHERS ARE BUILDING "SPAM DATA" AND "CHECK DATA" FROM X PLATFORMS AND CONDUCTING CONTINUOUS PRE-TRAINING EXPERIMENTS ON VARIOUS MODELS。

The results show that when models are exposed to a high proportion of “waste data”, they show a significant decline in reasoning, long text understanding, security and personal orientation。

The error analysis shows that the main problem with the model is "thinking leaps": there is a growing tendency to cut or skip key lines of reasoning needed to solve the problem。

Researchers have further compared different types of social media posts and found that "participation" is the strongest toxicity indicator - the easier it is to transmit viral content, the easier it is to degrade model perception。

Even more worrying is the persistence of the recession. Even if high-quality data are added to the follow-up phase for fine-tuning or continuing pre-training, the model can only be partially restored and there are still signs of drift。

The research team noted that this phenomenon is similar to the "brain corruption" of humans in long-term exposure to fragmentation, low-nutrient information, and highlighted the key role of data quality in ongoing training in large models. The research team called on industry to include "cognitive health screening" in the model maintenance process to avoid long-term degradation of capacity。

• research project: https://llm-brain-rot.github.io/