November 3rd, September 1stMeituan (Japanese company)The LongCat-Flash series model has been officially released and is now availableOpen Source LongCat-Flash-Chat and LongCat-Flash-Thining have received the attention of developers. Today, the LongCat-Flash series officially launched a new family member, LongCat-Flash-Omni。

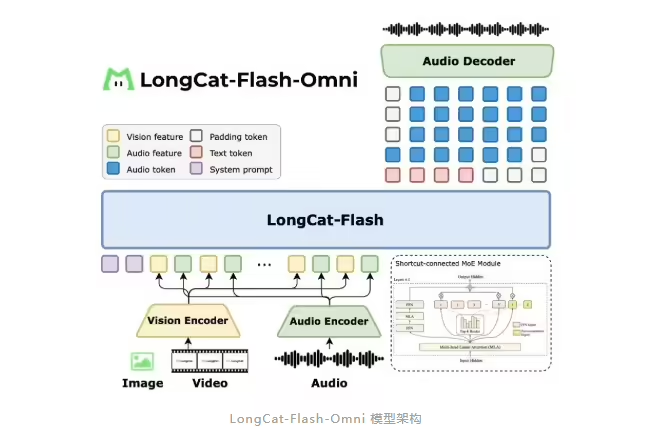

1AI was informed by an official presentation that LongCat-Flash-Omni was based on the LongCat-Flash series ' s high-impact architecture design (Shortcut-Connected MoE, including zero-calculation experts), while the innovative integration of high-efficiency multimodular perception modules and voice reconstruction modules. Even in the size of the large parameter of the total parameter of 56 billion (activated parameter 27 billion)Real-time video interactive capacity with low delay was still achievedThis provides more efficient technology options for developers ' multi-modular application scenarios。

A comprehensive assessment showed that LongCat-Flash-Omni met in a full-state baseline testOpen source most advanced levelSOTA)It also shows great competitiveness in key monomorphic tasks such as text, image, video understanding and voice perception and generation. LongCat-Flash-Omni is the first to be achieved by industry "Cyclical cover, end-to-end structure, large-parameter efficient reasoning" Integrated open-source large-language models achieve for the first time, in the open-source context, the matching of total modular capabilities to closed-source models and, with innovative architecture design and engineering optimization, large-parametric models can also be achieved in multimodular missionsmillisecond responseThe pain of delays in in-industry reasoning was solved。

- Text:LongCat-Flash-Omni continues the excellent text base capacity of the series and is leading in many areas. Compared to the early version of the LongCat-Flash series, the model not only did not experience a decline in text capacity, but also achieved performance enhancement in some areas. This result not only confirms the effectiveness of our training strategy, but also highlights the potential synergy between the different modes of training in a full-model model。

- Image Understanding:LongCat-Flash-Omni performance (realWorldQA 74.8 minutes) is comparable to the closed-source full-modular model Gemini-2.5-Pro and superior to the open-source model Qwen3-Omni; multi-image missions have a particularly significant advantage, with cores benefiting from training results on high-quality interweaves, multi-image and video data sets。

- Audio capacity:The assessment of speech retention dimensions from automated speech recognition (ASR), text to voice (TTS), voice retention dimensions has highlighted the following levels of performance: ASR is superior to Gemini-2.5-Pro on data sets such as LibriSpeech, AISHELL-1; voice to text translation (S2TT) is strong in CoVost2; audio understanding is currently the best in TUT2017, Nonspeech7k; audio text dialogue is excellent on OpenAudioBench, VoiceBech, with live video interactive ratings close to closed source models, and humanity indicators are superior to GPT-4o, leading to efficient conversion from basic to practical interaction。

- Video Understanding:LongCat-Flash-Omni video-to-text tasks are currently best performed, short video understanding is much better than the existing reference model, long video understanding is shoulder-to-shoulder Gemini-2.5-Pro and Qwen3-VL, which benefits from dynamic frame sampling, stratum-based video-processing strategies, and high-efficiency backbone networks that support the long context。

- Cross-model understanding:Performance is better than Gemini-2.5-Flash (non-thinking mode) and is more than shoulder Gemini-2.5-Pro (non-thinking mode); especially in the real world video interpretation WorldSense benchmarking test, other open-source full-temperature models show significant performance advantages, confirming their efficient multi-modular integration capabilities, and are currently leading in integrated capabilities。

- End-to-end interaction:As there is not yet a mature real-time multi-modular interactive assessment system within the industry, the LongCat team has developed an exclusive end-to-end assessment programme consisting of quantitative user ratings (250 users) and qualitative expert analyses (10 experts, 200 dialogue samples). The quantitative results showed that the naturality and flow fluidity around end-to-end interactions, LongCat-Flash-Omni showed significant advantages in the open-source model, which scored 0.56 points higher than the current best open-source model Qwen3-Omni; the qualitative results showed that LongCat-Flash-Omni was equal to the top three dimensions of sub-linguistic understanding, relevance and memory, but that there were still gaps between the three dimensions of real-time, humanity and accuracy and would be further optimized in future work。

Model is synchronized with open source:

- Hugging Face: https://huggingface.co/metuan-longcat/LongCat-Flash-Omni

- Github: https://github.com/metuan-longcat/LongCat-Flash-Omni