November 14th.New WaveWeiboIt's the first of its kindOpen SourceLarge Model VibeThinker-1.5B, called “Small models can also be intelligent”。

1AI HAS THE FOLLOWING OFFICIAL DESCRIPTION:

NOW THAT MOST OF THE MOST POWERFUL MODELS IN THE INDUSTRY ARE MORE THAN 1T, AND EVEN 2T-SCALE MODELS HAVE EMERGED, IS IT THAT ONLY A MEGA-PARAMETER MODEL HAS HIGH INTELLIGENCE? IS ONLY A SMALL NUMBER OF TECHNOLOGY GIANTS CAPABLE OF MAKING LARGE MODELS

VibeThinker-1.5B, which is exactly the negative answer given by Weibo AI to this question, proves that small models can also have high IQ. This means that making the most powerful models no longer depends primarily on pushing up parameters, as conventionally thought, but can also be done through sophisticated algorithm design。

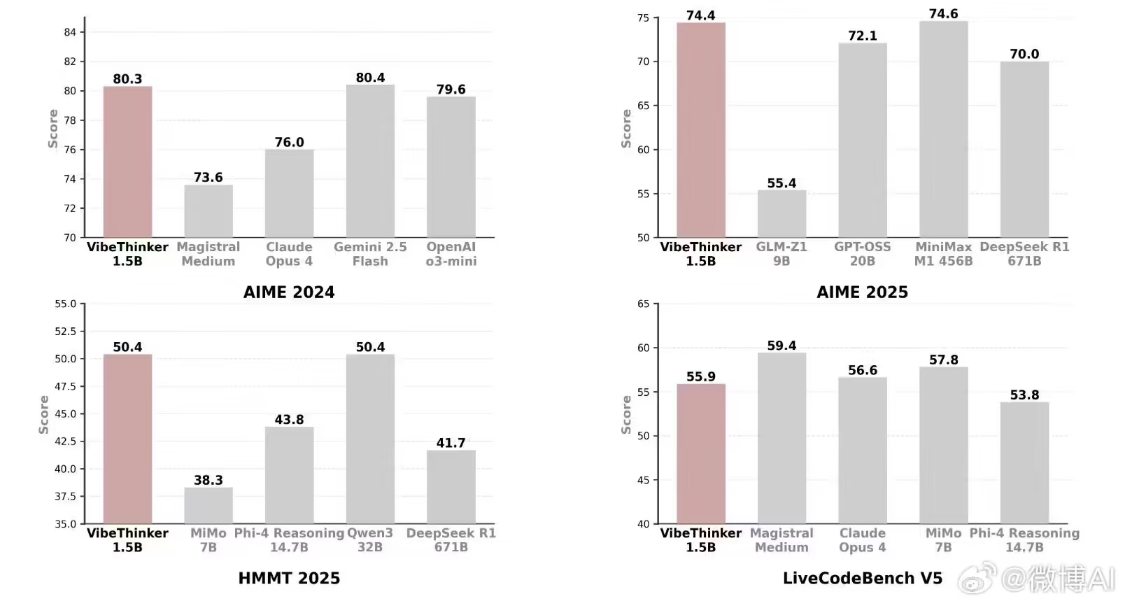

The model has only 1.5B (1.5 billion) parameters and, after training in the innovative “spectrum to signal principle” (SSP) methodology developed by microblogging AI R & D, its effects are subversive: VibeThinker's performance on three difficult mathematical test sets: AIME24, AIME25 and HMT25, surpassing the model DeepSeek-R1-0120 (model size 671B) with 400 times the amount of the parameterMiniMax-M1 on 456B is close to or equivalent to; in the LiveCodeBench v6 (Programmatic Test Series), successful tracking of models with tens of times the number of parameters, such as the Magistral-Medium-2506 version of the deep-thinking model of the lead AI firm Minstral.AI in Europe。

VibeThinker is powerful not by stacking of parameters, but rather by the SSP training concept developed by microblogging researchers, which encourages models to explore all possible solution paths, rather than focusing on the right rate, at the learning stage; and then, to optimize the model ' s performance to the extreme, using enhanced learning to optimize the efficiency of the strategy and to pinpoint the right path。

The single "Post-Training" cost of the model is less than $8000In contrast, DeepSeek-R1 and MiniMax-M1 cost after-training at $290,000 and $530,000, respectively, a reduction of several dozen times。

The Open Source of VibeThinker-1.5B, designed to provide a new, high-value, research and development path for medium-sized enterprises and higher education research teams with limited global computing resources, allows everyone to train the front-line large models rather than be left out as before, which is critical to technological progress in industry。

Github: https://github.com/Weiboai/VibeThinker

HuggingFace: https://huggingface.co/Weiboai/VibeThinker-1.5B

Arxiv: https://arxiv.org/pdf/2511.06221

ModelScope: https://www.modelscope.cn/models/WeiboAI/VibeThinker-1.5B