December 2 News.DeepSeek V3.2 Published in official editions to enhance the capacity of Agent and integrate thinking and reasoning。

Two official versions of the model were published today:DeepSeek-V3.2 and DeepSeek-V3.2-Speciale.

Official web pages, App and API have been updated to the official DeepSeek-V3.2. The Speciale version is currently available only in the form of a temporary API service for community assessment and research。

THE TECHNICAL REPORT ON THE NEW MODEL HAS BEEN PUBLISHED SIMULTANEOUSLY AND 1AI HAS THE FOLLOWING LINKS:

https://modelscope.cn/models/deepseek-ai/DeepSeek-V3.2/resolve/master/assets/paper.pdf

DeepSeek-V3.2

The goal of DeepSeek-V3.2 is to balance the ability to reason with the length of the output and to be suitable for daily use, such as the question and answer scene and the generic Agent mission scene。

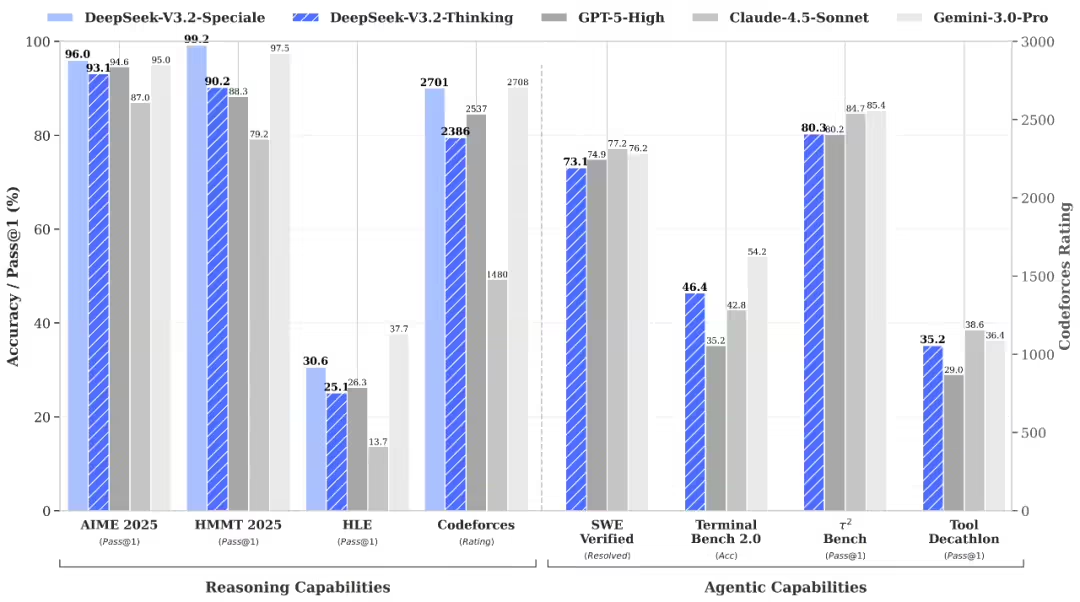

In the open reasoning class of BenchmarkDeepSeek-V3.2 Achieved GPT-5 only slightly below Gemini-3.0-Pro;The output length of V3.2 is significantly lower than that of Kimi-K2-Thinking, which significantly reduces the cost of computing and the waiting time of users。

DeepSeek-V3.2-Speciale

DeepSeek-V3.2-Speciale aims to:The ability of open source models to deduce is extremely high, explore the boundaries of modelling capabilities。

V3.2-Speciale is a long-thinking, enhanced version of DeepSeek-V3.2, which combines DeepSeek-Math-V2 ' s proof of theorem. The model has a better command-following, mathematical proof and logical validation capability and performs well in the mainstream reasoning benchmarking test, Gemini-3.0-Pro。

V3.2-Speciale Model Success IMO 2025 (International Mathematics Olympics), CMO 2025 (Mathematics Olympics, China), ITC World Finances 2025 (Global Finals of the International Student Program Design Competition) and IOI 2025 (International Information Olympics) gold medalsI DON'T KNOW. AMONG THEM, THE ICPC AND IOI HAVE ACHIEVED THE SECOND AND TENTH PLACE IN THE HUMAN RACE, RESPECTIVELY。

DeepSeek officially stated that, for highly complex missions, the Speciale model was significantly better than the standard version, but that tokens were also significantly more consumed and more costly. At present, DeepSeek-V3.2-Speciale is for research purposes only, does not support the call for tools, and there is no specific optimization for daily dialogue and writing tasks。

Unlike the limitations of previous versions of the inability to access tools in thinking mode, DeepSeek-V3.2 was the first of its kindIntegrating thinking into the use of toolsIt is a model that supports both thinking and non-thinking。

Officially, a large-scale Agent training method for the synthesis of data, with a large number of intensive learning tasks (800+ environment, 85,000+ complex directives) that are " difficult to answer, easy to verify " , has significantly improved the capability of the model to be generalized。

DeepSeek-V3.2 achieved in smart body assessmentMaximum level of current open source modelTHE GAP BETWEEN OPEN-SOURCE AND CLOSED-SOURCE MODELS HAS BEEN SIGNIFICANTLY REDUCED. IT IS WORTH NOTING THAT V3.2 DOES NOT HAVE SPECIAL TRAINING ON THESE TEST SET TOOLS。

DeepSeek-V3.2's thinking patternIncreased support for Claude Code, the user can use it by changing the model name to deepseek-reasoner, or by pressing the Tab key to start thinking mode in Claude Code CLI. But what needs to be noted is thinking patternsInadequate fit Cline, RooCode As long as non-standard tools are used, it is officially recommended that users continue using such componentsNot thinkingMode.

DeepSeek V3.2 Open Source Address

DeepSeek-V3.2

- HuggingFace: https://huggingface.co/deepseek-ai/DeepSeek-V3.2

- ModelScope: https://modelscope.cn/models/deepseek-ai/DeepSeek-V3.2

DeepSeek-V3.2-Speciale

HuggingFace: https://huggingface.co/deepseek-ai/DeepSeek-V3.2-Special

ModelScope: https://modelsscope.cn/models/deepseek-ai/DeepSeek-V3.2-Special