December 16th.Tongyi Large ModelBy official public call, the two sections are “holy hearing”Voice ModelformalOpen Source, two models were upgraded. According to the introductionIt takes three seconds to get your voice seamlessly to change languages, dialects and emotions - Mandarin, Mandarin, Japanese, English, Happy, Anger 9 languages, 18 dialects, all done。

upgrade

- Fun-CosyVoice3 Model Upgrade: First package delayed reduction of 50%, doubling the accuracy of Chinese and English and supporting 9 languages 18 dialects, translingual cloning and emotional control

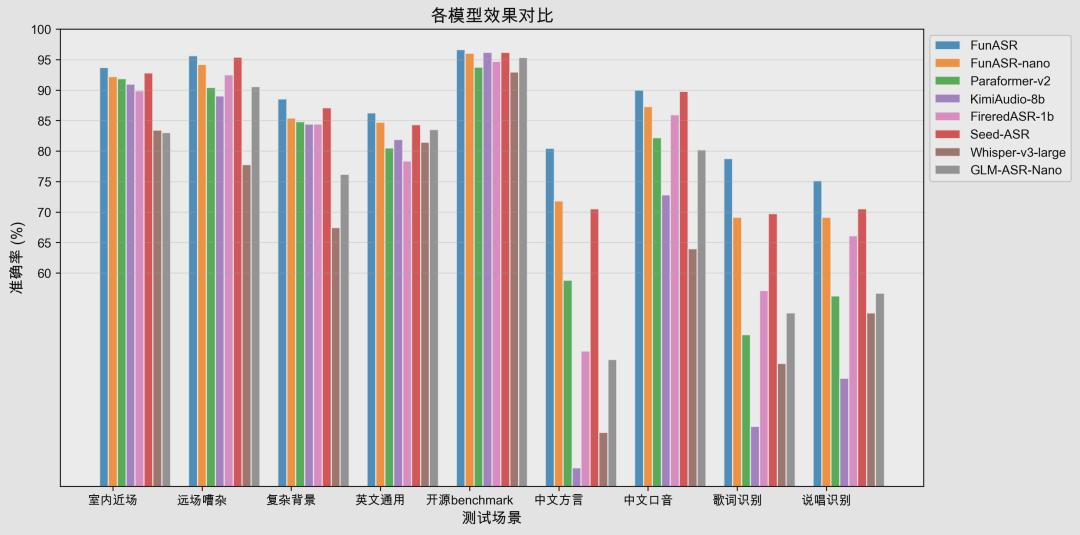

- Fun-ASR Model Capability Enhancements: Noise scene accuracy 93%, verbs and rap recognition, 31 free speech, accent coverage of dialects, and reduction of the first words of flow recognition models to 160 ms。

Open Source

- Fun-CosyVoice 3(0.5B) open source: provide zero-shot acoustic cloning capability to support local deployment and secondary development

- Fun-ASR-Nano (0.8B) open source: light quantitative version of Fun-ASR, lower cost of reasoning, model open source to support local deployment and customization fine-tuning。

1A has been officially informed that this time, the Fun-CosyVoice3 large model has completed several key upgrades:

- (A) THE INITIAL PACKAGE DELAY REDUCTION OF 50%, WHICH SUPPORTS TWO-WAY-STREAM SYNTHESIS, AND THE REAL REALIZATION OF "INPUT-IN-SPEECH" FOR REAL-TIME SCENES SUCH AS VOICE ASSISTANTS, LIVE AUDIO-SPEECHING, BARRIER-FREE READING

- 56.41 TP3T, WHICH CONTAINS A COMBINATION OF PROFESSIONAL TERMS, SIZES AND WORDS, OR A TRANSLITERATED SENTENCE, CAN BE PRONUNCIATED WITH PRECISION AND NATURALITY

- In the zero-shot TTS assessment, the consistency of content and the sound-synthesis increase in total and the complex scene (test-hard) character error rate (CER) decreases relatively by 26%, close to human recording levels

- 9 Universal languages, 18 Chinese dialects, 9 Emotional controls, with cross-linguistic transliteration -- recording in a Mandarin language gives you voice in Chinese, Japanese, English, etc., and the sound is consistent。

And open source Fun-CosyVoice 3-5B the model provides a zero-shot acoustic cloning capability that requires only reference audio for a period of three seconds or more to recaptulate the sound and synthesize the new voice, and to support local deployment and secondary development。

The Fun-ASR name allows AI to “understand”. It is based on tens of millions of hours of real voice data training and has landed on a large scale in scenes such as “AI hearing”, videoconferences, etc. Officially, the model focuses on optimizing the sound environment, multilingual free speech, Chinese dialect and accent cover, linguistic recognition, customization, and reducing the first words of the current identification model to 160 ms。

Fun-CosyVoice 3-5B Open source address:

- https://github.com/FunAudioLMM/CosyVoice

- https://funaudiolm.github.io/cosyvoice3/(GitHub.io)

- https://www.modelscope.cn/studios/FunAudioLLM/Fun-CosyVoice3-0.5B (experience demo)

- https://modelscope.cn/models/FunAudioLLM/Fun-CosyVoice3-0.5B-2512 (domestic model warehouse)

- https://huggingface.co/FunAudioLLM/Fun-CosyVoice3-0.5B-2512 (Overshore Model Warehouse)

Fun-ASR-Nano-0.8B Open source address:

- https://github.com/FunAudioLLM/Fun-ASR (GitHub)

- https://funaudiolm.github.io/funasr/ (GitHub.io)

- https://modelscope.cn/studios/FunAudioLLM/Fun-ASR-Nano/ (domestic experience demo)

- https://huggingface.co/spaces/FunAudioLLM/Fun-ASR-Nano

- https://modelscope.cn/models/FunAudioLLM/fun-asr-nano-2512 (domestic model warehouse)

- https://huggingface.co/FunAudioLLM/Fun-ASR-Nano-2512 (Overshore Model Warehouse)