December 17th message, just nowMilletOfficially released andOpen SourcenewModel MiMo-V2-Flash, first of all:

MiMo-V2-Flash's total parameter 30.9 billion, active parameter 15 billion, using a mix of specialist structures (MoE) and performances that are also able to shake wrists with DeepSeek-V3.2 and Kimi-K2 head-open-source models。

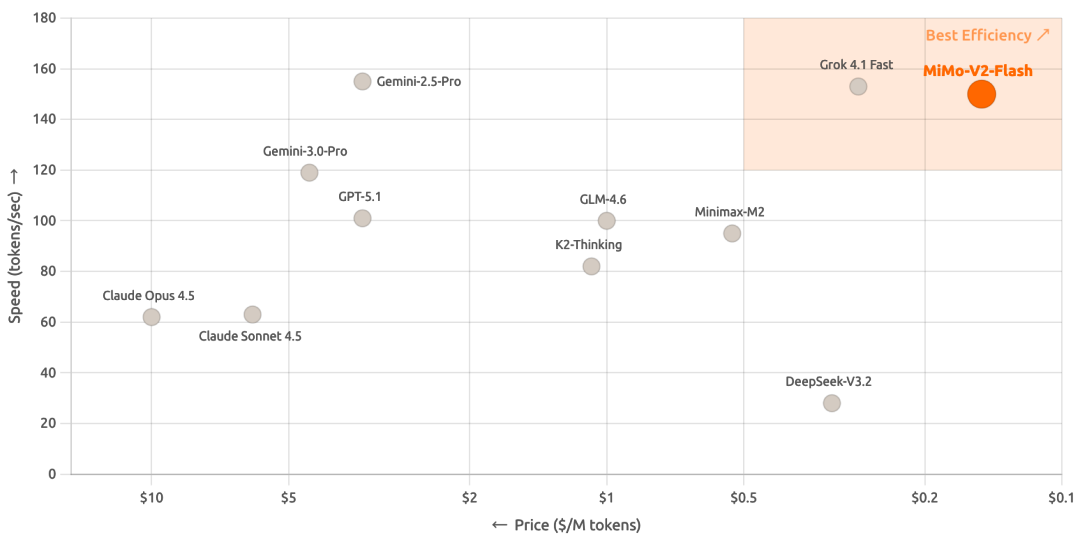

Remove the "open source" label, MiMo-V2-Flash The real killer It's a radical innovation in architecture, pulling the pace of reasoning to 150 tokens/seconds, at a cost of 0.1 dollars per million token, 0.3 dollars for output, and a super-extreme value。

The benchmark test results showed that MiMo-V2-Flash was in the top two of the open source models in both the AIME 2025 Math Competition and the GPQA-Diammond scientific knowledge test. The programming capability is even more bright, SWE-bench Verified score 73.4%, going beyond all open source models and pressing GPT-5-High。

Turning to intelligent missions, MiMo-V2-Flash scored 95.3, Retail 79.5, Aviation 66.0, Brownsecomp Search Agent 45.4, directly to 58.3 when context management was enabled。

These data show that MiMo-V2-Flash not only writes code, but also truly understands complex mission logic and implements multi-wheel intelligent interactions。

it is worth mentioning that mi used a hybrid slide window attention mechanism this time - using a 5-to-1 radical ratio, 5-sliding window attention paired with 1-slide global attention interchange, and the slide window looked at 128 tokens。

In response, Roosevelt pointed out on the social platform an intuitive discovery: window size 128 is the best dessert valueI don't know. It also stated that MiMo-V2-Flash was only the second step on the Mi-AGI road map。

In addition, based on official experience page information, MiMo-V2-Flash supports in-depth thinking and networking search, both for chat and for use in scenes requiring real-time data, latest developments or data reconciliation。

In addition, MiMo-V2-Flash uses the MIT Open Source Protocol, and base copyright weights have also been published on Hugging Face。

Xiaomi Mimo AI Studio Experience Address: http://aistudio.xiaomimo.com

HuggingFace Model Address: http://hf.co/XiaomiMiMo/MiMo-V2-Flash

Technical coverage address: http://github.com/XiaomiMiMo/MiMo-V2-Flash/blob/main/paper.pdf