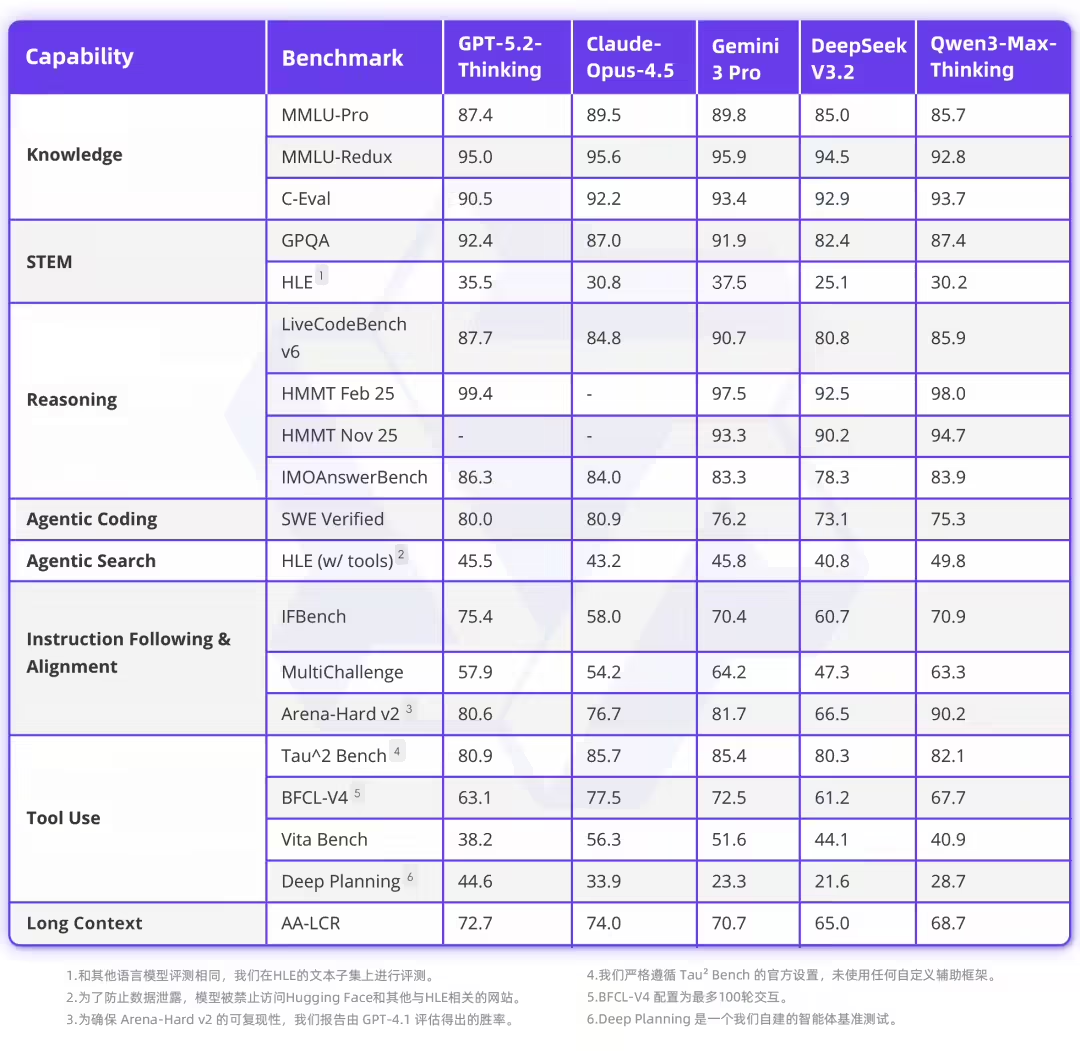

January 27th.AlireleaseQuestionsFlagshipinference model Qwen3-Max-Thinking. According to official presentations, it achieved significant improvements in several critical dimensions, including:Factual knowledge, complex reasoning, command compliance, human preferences and intelligence capabilitiesI don't know. Performance in 19 authoritative benchmark testsOptimistic top model GPT-5.2-Thinking, Claude-Opus-4.5 and Gemini 3 Pro.

New modelTotal parameters over trillionLarger-scale intensive post-learning training was undertaken and, through a series of innovations in reasoning techniques, a significant leap in model performance was finalized. Qwen3-Max-Thinking has also significantly enhanced the original Agent capacity of the autonomous call tool in several key energy benchmarking testsThink like a professionalThe answer is more user-friendly, intelligent and fluid. At the same time, model illusions have been significantly reduced, laying the groundwork for real and complex tasks。

According to the official presentation, Qwen3-Max-Thinking has updated several Best Performance (SOTA) records, particularly in key performance benchmark tests such as Scientific Knowledge (GPQA Diamond), Mathematical Logic (IMO-AnswerBench), and LiveCodeBench, to achieve international lead。

Qwen3-Max-Thinking is now online Qwen Chat, and users can interact directly with the model and its self-adaptation tool call function. Meanwhile, API for Qwen3-Max-Thinking (model name qwen3-max-2026-01-23It's also open。

1AI WITH EXPERIENCE LINKS:

- Qwen Chat:i don't know

- Ali Yunpun:https://bailian.console.aliyun.com/cn-beijing/?tab=model#/model-market/detail/qwen3-max-2026-01-23

Qwen3-Max-Thinking is known to have two core innovations。

- The self-adaptation tool, which calls the search engine and code interpreter on demand, is now on line Qwen Chat

- TEST-TIME Scaling during testing significantly enhances reasoning performance beyond Gemini 3 Pro on key reasoning benchmarks。

The official description is as follows:

- Capacity to access adaptation tools

- Qwen3-Max-Thinking is free to choose and call on its built-in search, memory and code interpreter functions in a conversation, unlike the early way in which user manual selection tools are needed. This capacity is derived from a specially designed training process: after the initial tool has been fine-tuned, the model is further trained in diverse tasks using feedback based on rules and models. Experiments have shown that search and memory tools can effectively mitigate hallucinations, provide real-time information access and support more personalized responses. The code interpreter allows the user to execute the Snippets and apply computational reasoning to solve complex problems. Together, these functions provide a fluid and powerful experience of dialogue。

- Extension during testing

- Extension during testing refers to the technology of allocating additional computing resources at the reasoning stage to enhance model performance. We have proposed a cumulative, multi-temporal, multi-temporal approach to scaling up. Unlike simply increasing the number of parallel lines of reasoning, N (which often leads to redundancies), we limit N and devote the savings to an iterative self-reflection guided by the “experiment of experience” mechanism. The mechanism draws key insights from past reasoning, allowing the model to avoid duplicating known findings and instead focus on unresolved uncertainties. The key is that the mechanism achieves a more efficient use of the context and fuller integration of historical information within the same context window than by direct reference to the original reasoning trajectory. At roughly the same token consumption, the method continues to outperform standard parallel sampling and aggregation methods: GPQA (90.3 → 92.8), HLE (34.1 → 36.5), LiveCodeBench v6 (88.0 → 91.4), IMO-AnswerBench (89.5 → 91.5) and HLE (w/works) (55.8 → 58.3)。