-

Billion-billion flagship models of parameters are released, and efficient reasoning and cross-model capabilities are fully upgraded

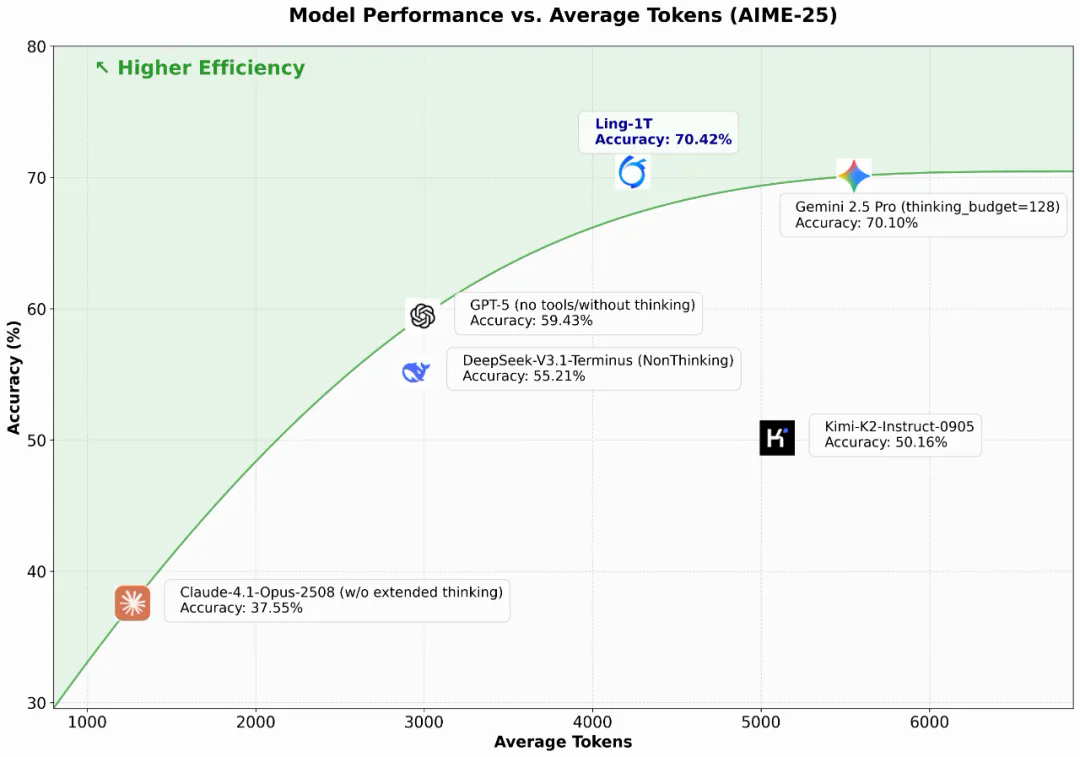

Yesterday, the Grinch Model team officially launched the first flagship non-thinking model of the Ling 2.0 series -- Ling-1T. It was described that the model was based on the Ling 2.0 architecture and had a total parameter size of 1T, each token activated about 50B parameters and completed pre-training on high-quality language above 20T token to support the highest 128K context window. According to the official presentation, Ling-1T shows the lead in a number of difficult benchmark tests such as code generation, software development, competitive mathematics, and logical reasoning..- 2.5k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: